Ataccama 16.3.0 Release Notes

Products |

16.3.0-patch20:

|

|---|---|

Release date |

February 18, 2026 (patch20) See earlier releases

|

Downloads |

|

Security updates |

ONE

Introducing Ataccama Data Quality Gates

Ataccama Data Quality Gates enables you to deploy and execute data quality rules directly within your data processing environments, validating data in motion as it flows through pipelines.

Define rules once in Ataccama ONE, then deploy them as native platform functions that execute locally within your data infrastructure.

This initial release supports Snowflake, deploying the DQ rules as User-Defined Functions (UDFs) callable directly in SQL.

For full release notes, see Ataccama Data Quality Gates Release Notes.

Databricks Pushdown Processing

Pushdown profiling is available on Databricks sources. Run profiling and data quality evaluation as an SQL pushdown workload directly in Databricks and benefit from enhanced security and performance.

For more details about how pushdown processing works and how to set it up for your connection, see Databricks Pushdown Processing.

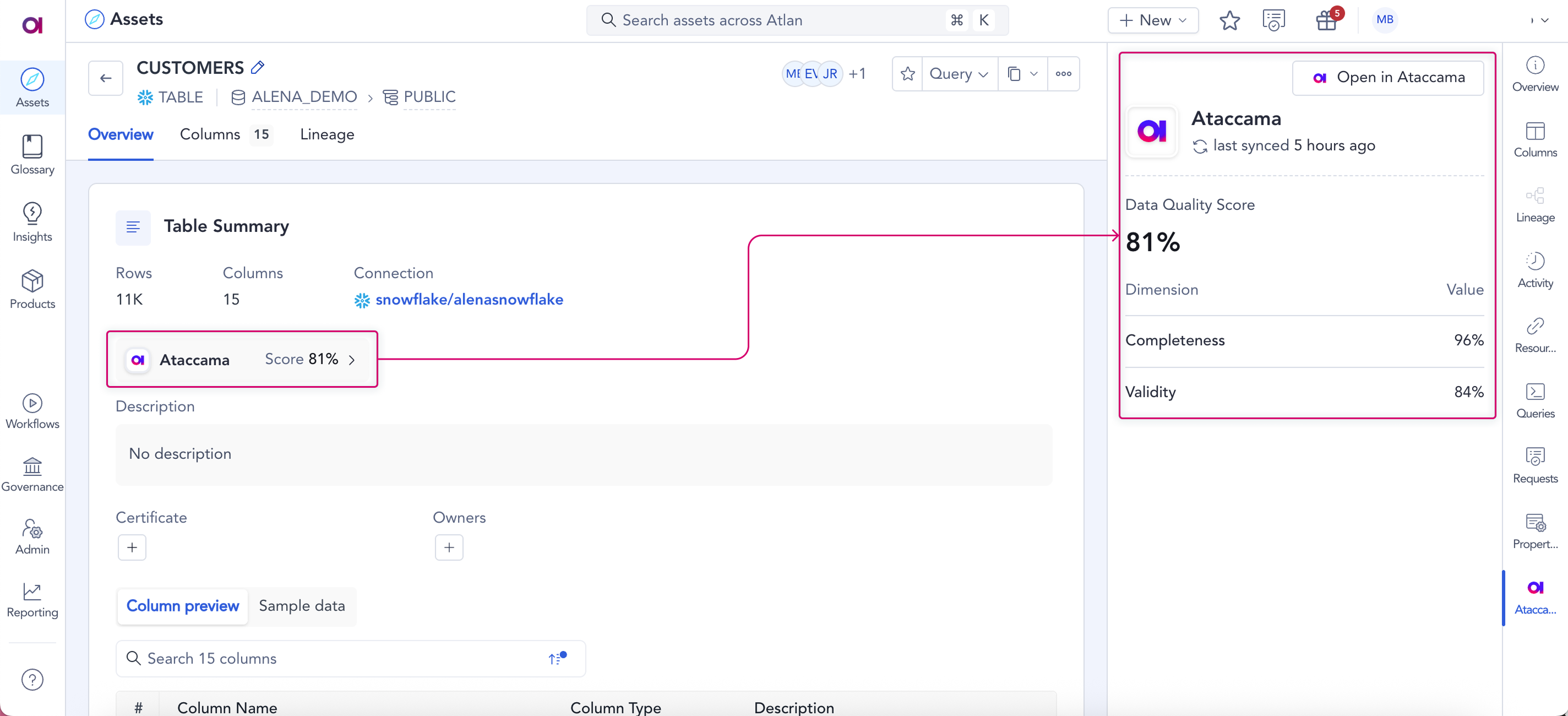

View ONE DQ Results in Atlan

Atlan can now retrieve data quality results directly from Ataccama ONE and display them in its catalog. This lets you perform quality-aware data discovery without switching between platforms.

Atlan displays overall data quality results at both the table (catalog item) and attribute levels, including their dimensional breakdowns. Additionally, you can click through from Atlan assets to corresponding catalog items in ONE for detailed context and analysis.

The integration provides full control over which ONE data sources are included in the integration.

For more information and setup instructions, see Atlan Integration and Configure Atlan Integration.

ONE Data Available in Hybrid Environments

ONE Data is now fully supported in hybrid environments, where all of Ataccama ONE runs in the cloud except Data Processing Engines (DPEs).

This update allows you to take full advantage of ONE Data capabilities across both on-premise and cloud deployments.

For details about how to make ONE Data available in a hybrid setup, see ONE Data Setup for Hybrid Deployments.

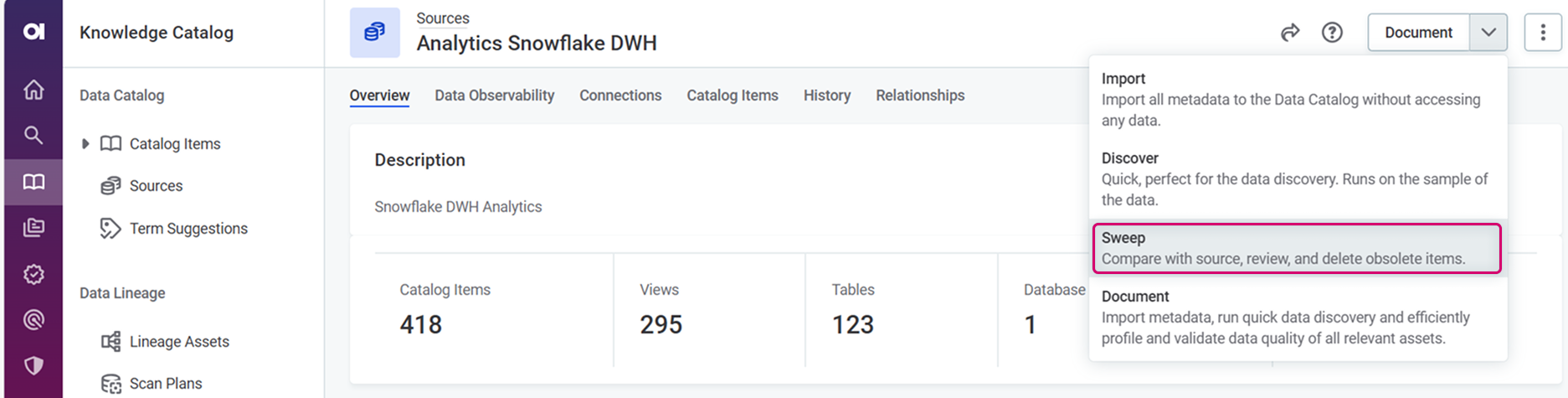

Remove Obsolete Catalog Items in Bulk

Keep your Data Catalog up to date with the new Sweep documentation flow.

Start by running the Sweep documentation flow to identify catalog items that no longer exist in the data source, either because they’ve been deleted or renamed. Then review and delete identified catalog items in bulk from the Notification Center.

Catalog Item Page Performance

The catalog item screen has been optimized to improve loading performance by reducing the amount of data fetched by default.

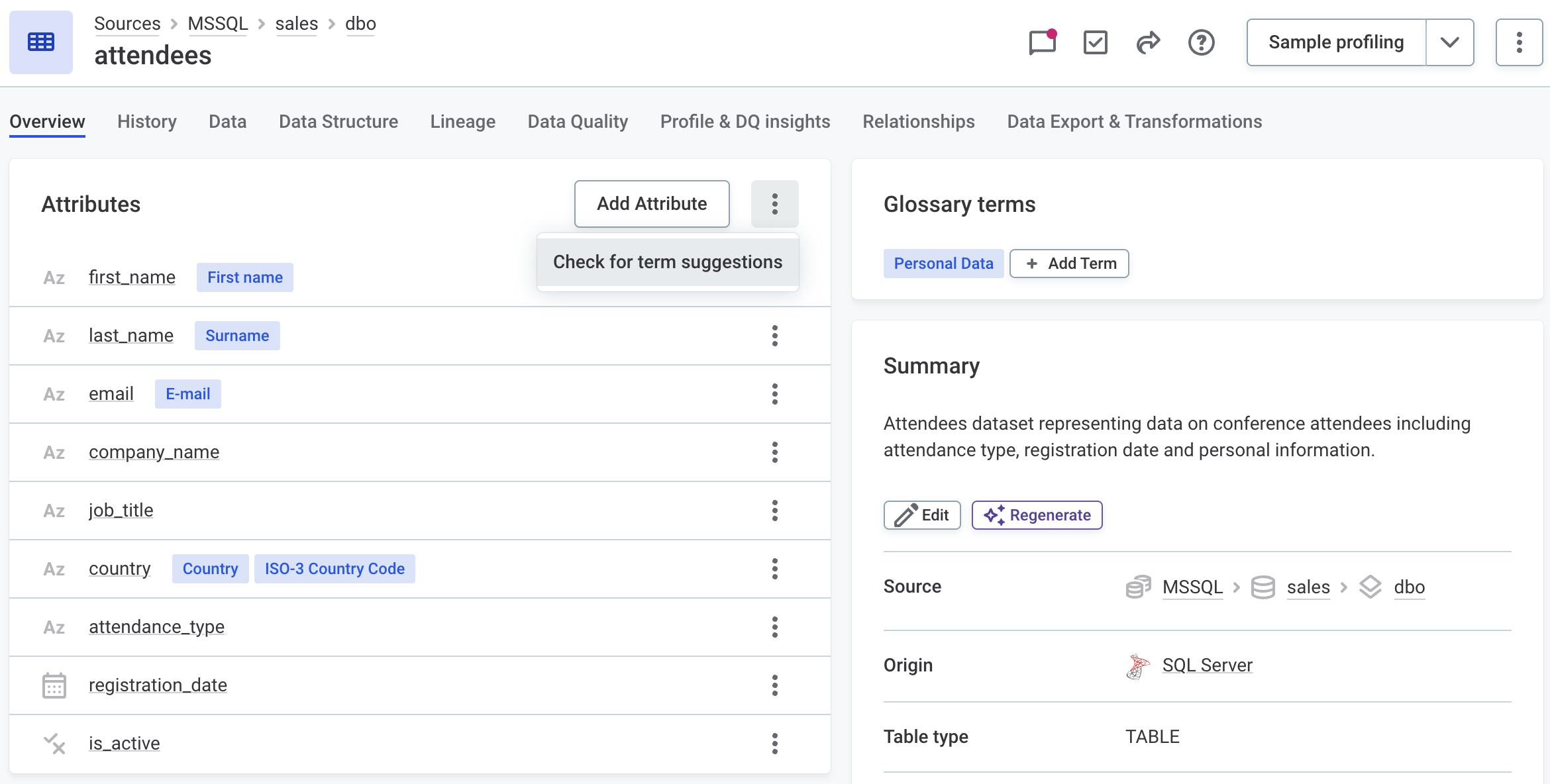

Attribute-Level Information Display

Term suggestions and anomaly icons are no longer displayed on the attribute listing on the catalog item Overview tab.

-

Term suggestions can be managed from Knowledge Catalog > Term Suggestions, or accessed for a specific catalog item via the three dots menu in the Attributes section (Check for term suggestions).

Individual attribute term suggestions remain visible in the attribute details sidebar.

-

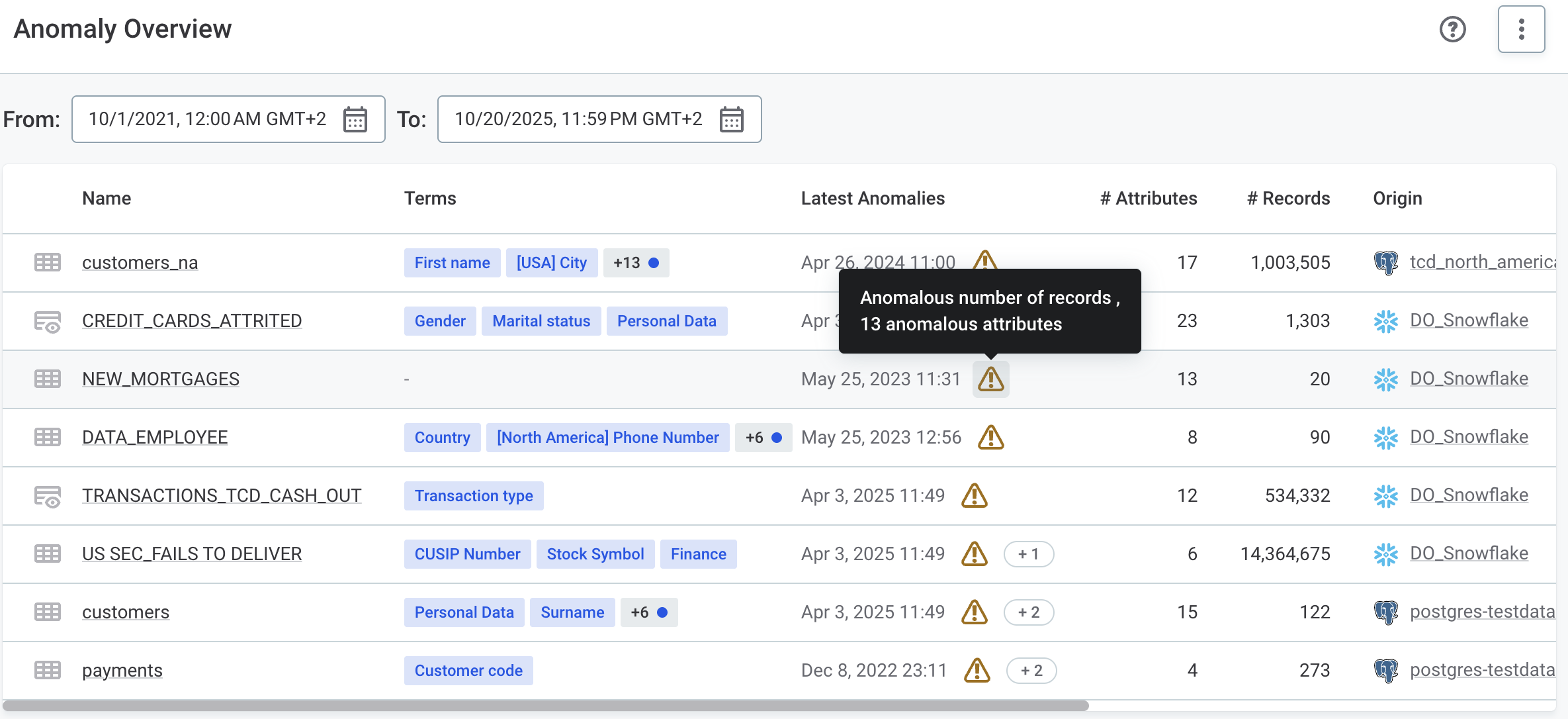

Anomalies are indicated by a banner at the catalog item level, where users can view details.

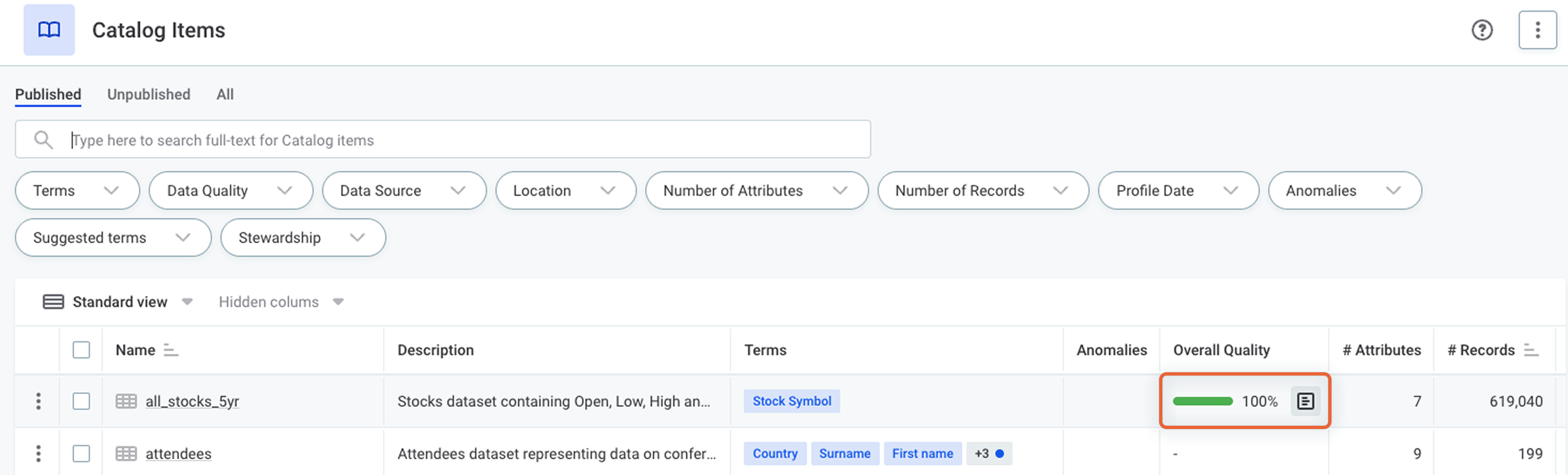

Overview Tab and Navigation

The following widgets have been removed from the Overview tab default layout:

-

Data Quality widget

-

Number of records widget

To maintain quick access to this information:

-

Navigating to a catalog item from monitoring projects now opens the Profile & DQ Insights tab directly.

-

A new shortcut from the quality column in the catalog item listing links to the Data Quality tab.

|

Re-enabling hidden features

Removed widgets and attribute-level information can be re-added if needed, though this can impact performance. See Entity Screen Customization. |

New Authentication Options for Snowflake in Data Stories

Snowflake sources in Data Stories now support key-pair authentication and OAuth 2.0. For configuration details, see Data Stories Connection.

Support for S3-Compatible Data Sources

Connect to S3-compatible storage solutions like MinIO, expanding your object storage options beyond AWS S3.

For configuration details, see Amazon S3 Connection and follow the instructions for S3 compatible connectors.

New MS Teams Notifications Integration

MS Teams notifications for monitoring projects are now available again through a new integration method.

If you previously used MS Teams notifications in Ataccama ONE, generate a new incoming webhook in MS Teams using the Workflows app and update your notifications configuration in ONE accordingly. See MS Teams Integration.

Enhancements to Lineage Edge Processing

Edge processing for lineage scanners can now be managed directly in the web application:

-

Turn on edge processing from your scan plan - Turn on edge processing when creating or editing a scan plan and select from connected edge instances via dropdown. Previously, edge processing had to be configured within the scan plan.

-

Lineage Configuration screen - View and manage all connected and deactivated edge instances. You can refresh the list to see the latest status and deactivate instances as needed.

For details, see Lineage Edge Processing.

Schedule Lineage Scan Plans

Lineage scan plans can now run automatically at defined intervals using cron expressions. This keeps your lineage metadata current with minimal manual effort.

-

Automatic import - Import scan results automatically after successful scans. Review and publishing remain under your control.

-

Automatic publishing - Fully automate lineage updates using automatic publishing (applies the Expand write strategy).

-

Progressive approach - Start with manual imports, enable automatic import for semi-automation, then add automatic publishing for full automation as you build trust in your data.

For more details, see Schedule scan plan.

MDM

New Event Handler and Publisher

A new Event Handler and Publisher implementation brings improved stability and performance across event storing, filtering, processing, and traversing operations.

With the new event scope filter, you can target events more efficiently. Event batching and streaming offer flexible publishing options: large transactions can be broken into smaller chunks for publishing (batching), while small transactions can be collected and published as one chunk (planned).

The new implementation is streamlined to plan publisher only with simplified configuration for easier setup and maintenance. For more details, see Streaming Event Handler.

Improvements to Admin Center

The MDM Web App Admin Center now features enhanced task execution monitoring, new navigation and usability improvements, and the ability to monitor the Orchestration server status all in one spot.

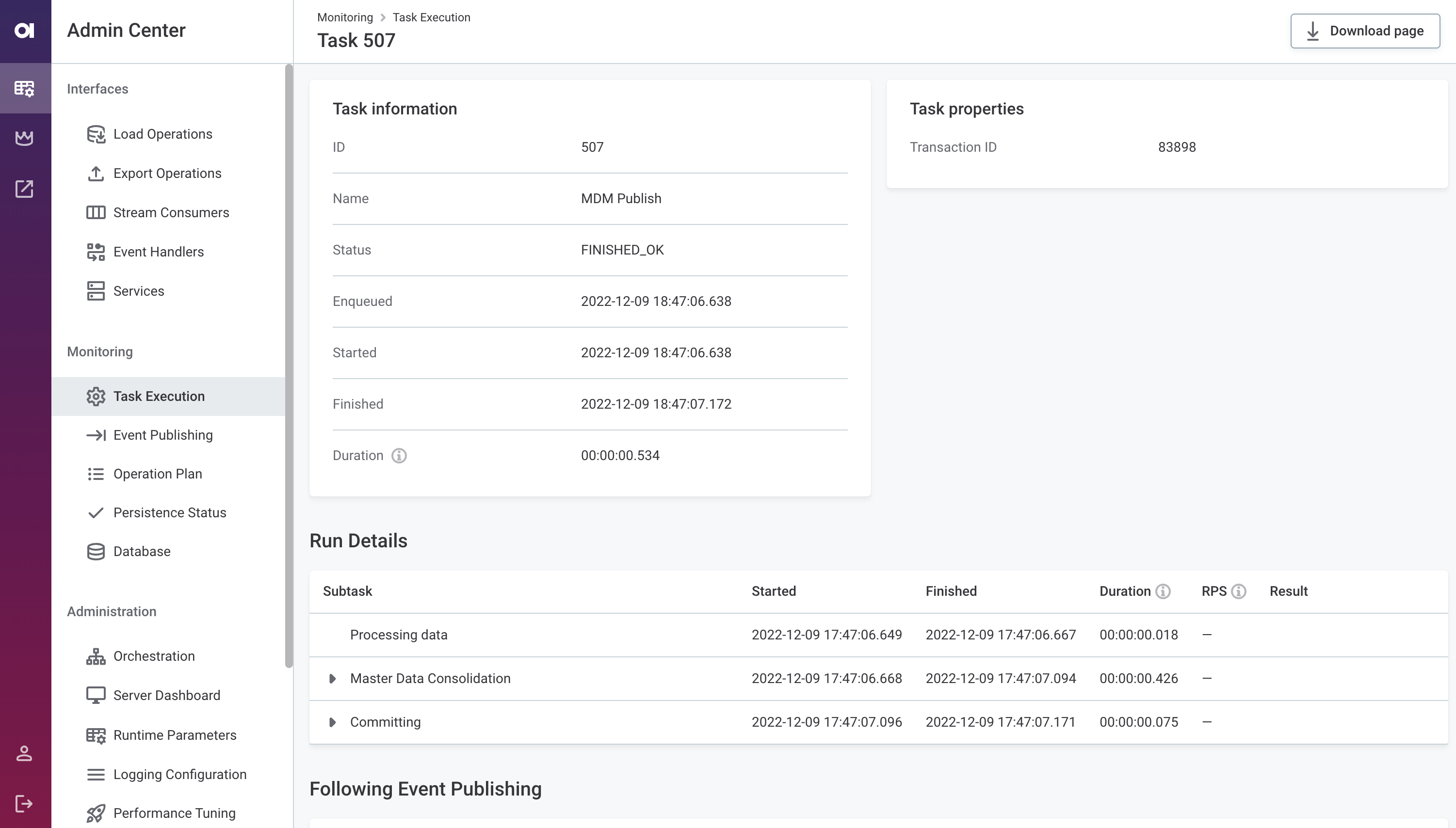

Enhanced Task Execution Monitoring

Key improvements include:

-

Millisecond-precision tracking - All job timestamps include milliseconds, making it easier to monitor and troubleshoot operations in high-workload environments where multiple transactions complete in parallel, sometimes within sub-second timeframes.

-

Timezone listed in execution reports - Execution reports indicate the server timezone for consistency across environments.

-

Better visibility of tasks in progress - Details and duration of currently running subtasks are expanded by default, including nested subtasks.

For quick status assessment, the task listing also shows one key metric per row—either Started at (if not started) or Finished at (if running). In addition, the Tasks API now provides duration information even for unfinished subtasks, enabling better programmatic monitoring.

-

Running operations count on server stop - The Stop server action displays the count of currently running operations, helping you avoid interrupting critical processes. If not relevant, such tasks can now be force stopped.

-

Persistence status monitoring - If defined, the VLDB persistence type can be monitored from the Persistence Status tab in the Admin Center.

To learn more, see Monitoring.

Orchestration Screen Available in Admin Center

Track the health status, resources, services, and various statistics of the Orchestration Server directly from the MDM Web App Admin Center. The new Orchestration screen also allows monitoring and running workflows and schedulers.

To learn more, see Administration.

Changes to Navigation

The navigation menu in MDM Web App Admin Center has been reordered for easier access to key functions.

For a full overview of changes, see MDM Web App Admin Center.

Store Lookup Files in MinIO

MDM now supports storing lookup files in MinIO for improved performance with large files and updates without server restarts.

Choose the right approach for your needs:

-

Static approach - Uses Git storage with server restarts for updates. Best for small files (under 5 MB) and infrequent changes.

-

Dynamic approach - Leverages MinIO and the Versioned File System (VFS) component for no-downtime updates. Best for large files or production environments requiring high availability.

Additionally, MinIO provides built-in backup and recovery capabilities, enhancing data integrity and resilience.

Both approaches work in cloud and self-managed deployments. You can combine both within the same environment or use the dynamic approach for all lookup files regardless of size.

For configuration details and migration steps from the static to the dynamic approach, see Lookups Management in MDM.

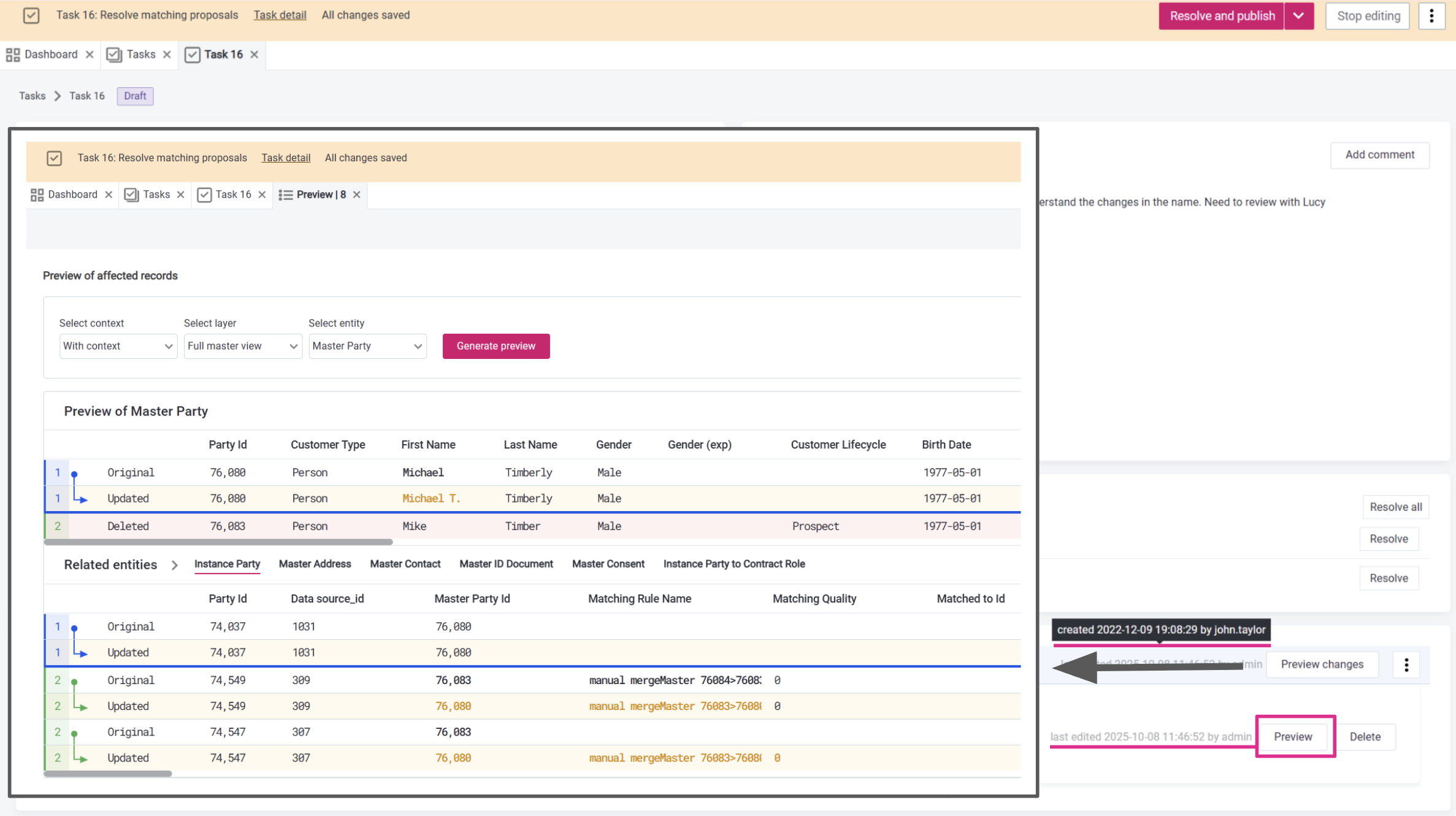

Enhanced Matching Proposal Management

Benefit from better visibility and control when working with matching proposals and manual match.

Review and modify merge operations before applying them, track who created or last edited each draft operation, and identify operation types at a glance with color-coded previews. To prevent accidental cross-task actions, task context warnings are now available.

Deprecation Notice

The following features will be deprecated starting from version 17. In case of any questions, contact Ataccama Support.

MS SQL and Oracle RDBMS

As a follow up to version 15.4.0 announcement, MS SQL and Oracle RDBMS will no longer be supported for MDM Storage starting in version 17. While these databases will continue to function during the 16.3.0 LTS support period (two years), we recommend migrating to PostgreSQL at your earliest convenience.

Alternatively, you can switch to Custom Ataccama Cloud, where we manage PostgreSQL on your behalf.

Async Event Handler

The Async Event Handler is obsolete from version 17 and will be removed in version 18.

If you are using the event handler traversing functionality, we recommend migrating to the new Streaming Event Handler. If migration is not immediately possible, the Async Event Handler can continue to be used for small data volumes until version 18.

Old Admin Center

The old Admin Center has been fully replaced with the new implementation, available since 13.8.0.

In 16.3.0 LTS, the old Admin Center is turned off by default. It is deprecated in version 17 and will be removed in version 18.

No user action is needed to migrate: you can start using the new Admin Center immediately. For details, see MDM 16.3.0 Upgrade Notes.

RDM

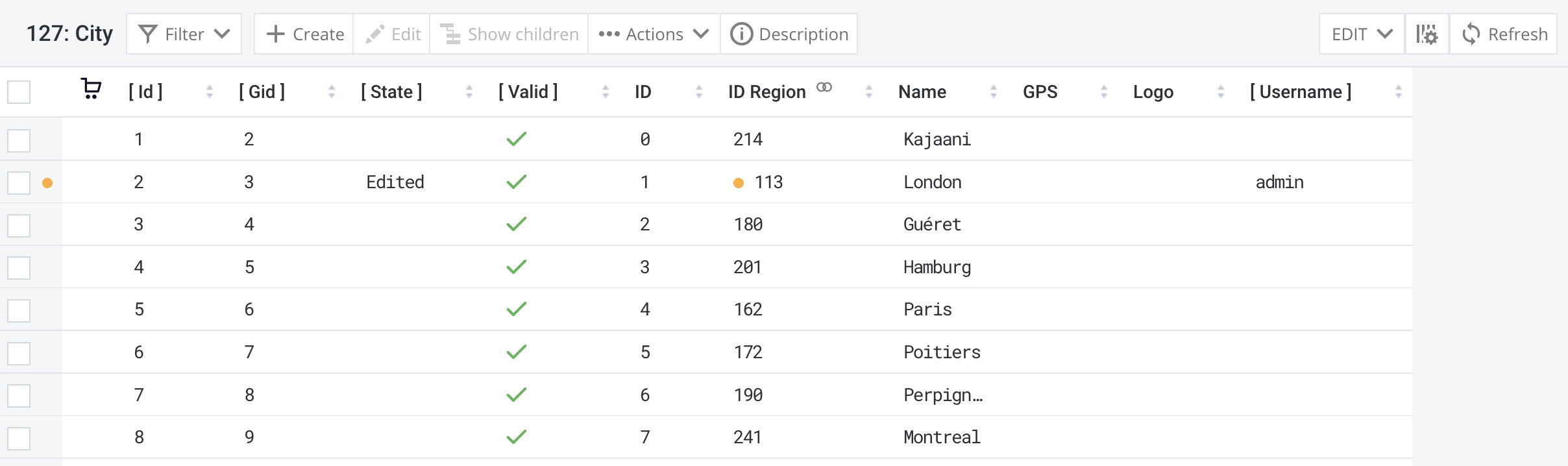

Edited Values Display in RDM Views

RDM Views now display edited values in create and edit dialogs and record detail views more clearly, allowing you to see at a glance which specific attributes were modified.

An orange dot marks updated attributes when the attribute value or a reference to a parent record changes. If the parent record’s values change instead, no indicator is displayed.

Value hovers in grid listings are no longer displayed, as published values cannot be loaded for multiple rows.

Customize Logo and Environment Name

Brand your RDM environment with custom logos and labels. Upload your organization’s logo, set environment-specific text (such as "Production" or "Development"), and apply styling to adjust the logo’s appearance.

For details, see RDM Server Application Properties > Static configuration.

Platform Changes

PostgreSQL 17 Support

ONE components now support PostgreSQL 17 as the recommended database version across the platform (DQ&C, MDM, RDM). When upgrading self-managed, on-premise deployments to version 16.3.0, upgrade to PostgreSQL 17 for optimal performance and support.

PostgreSQL 15 remains available under limited support. This means the application should run on this platform but compatibility is not tested with each version.

For details, refer to Supported Third-Party Components.

Fixes

We’ve streamlined the lists of fixes by removing the internal Jira ticket references. In addition, we’ve organized ONE fixes by functional area, making it easier to find relevant updates.

ONE

Click here to expand

- 16.3.0-patch20

-

-

Catalog & data processing

-

When creating a DQ firewall from a catalog item, attributes with unsupported data types are now skipped instead of blocking firewall creation entirely. A warning lists any skipped attributes.

-

Fixed intermittent

ConcurrentModificationExceptionfailures in JDBC Reader and SQL Execute steps, sometimes surfacing as "Cannot connect to the database" errors despite the database being healthy. The issue was caused by a race condition when multiple jobs ran concurrently. -

Metadata import jobs can now be assigned a custom priority in the processing queue via Metadata Management Module configuration.

-

-

Performance improvements

-

Faster loading on multiple screens:

-

Edit screens.

-

Catalog Items tab on source details.

-

Data Quality tab on catalog items.

-

Pushdown connection details, especially for environments with many connections.

-

Data Observability tab on sources.

-

-

-

Lineage

-

Scan plan schedule deletion is now immediately reflected without requiring a page refresh.

-

Improvements to SSIS lineage scanner:

-

Assets are now correctly resolved when multiple assets share the same external ID.

-

Empty packages, missing SQL statements, and invalid connection paths are now handled gracefully instead of failing.

-

Import no longer fails when a previously mapped connection has been deleted from the catalog.

-

-

MS SQL lineage assets using the MSSQL-NO-LOCKS technology type now display the correct icon on the lineage diagram.

-

Fixed an issue where duplicate entries could cause errors during lineage diagram calculation.

-

-

Chat with documentation now links to the correct documentation version.

-

- 16.3.0-patch19

-

-

Performance improvements

-

Faster loading of the Data Observability tab and related widgets on sources.

-

Faster loading of the Data Quality tab on catalog items.

-

Improved database access performance in DPM.

-

Reduced backend load during high-volume job processing.

-

Fixed long-running database transactions caused by slow OpenSearch responses, which could block other operations.

-

Turned off resource-intensive PostgreSQL custom metrics that could significantly slow down database performance.

-

Fixed an issue where hybrid DPE slots were incorrectly reported as fully utilized, causing queued jobs to stall indefinitely.

-

Improved reliability of search event processing.

-

-

Lineage

-

When importing Databricks or Dremio lineage, connections are correctly preselected in the import mapping settings.

-

Databricks lineage assets now correctly link to catalog items instead of appearing as generic database assets.

-

-

- 16.3.0-patch18

-

-

Lineage scans now correctly handle private keys with newline characters.

-

Improved AI Evolution service performance.

-

- 16.3.0-patch17

-

-

Fixed an issue where ADLS mounting would fail when using hybrid DPE with Spark pushdown and Azure Key Vault credentials.

-

Faster loading times for Source and Catalog screens through optimized metadata fetching.

-

Faster catalog item metadata imports through reduced database overhead.

-

Databricks lineage assets now correctly link to catalog items instead of appearing as generic database assets.

-

Fixed an upgrade issue where certain roles could end up with duplicate access levels, preventing modifications.

-

Improved hybrid DPE stability during high memory usage.

-

Optimized system validations to reduce resource usage on large environments.

-

Reduced log noise from analytics-related network errors.

-

- 16.3.0-patch16

-

-

Power BI reports with errors now show "No preview available" in ONE instead of a TypeError.

-

Audit records in

audit_affected_assettable now show only the unique user ID in theuserfield, no longer including user roles. -

Data observability overview metrics now load on demand, improving page load times for large sources.

-

Fixed an issue where MS SQL lineage scans could fail when logging certain parsing errors.

-

Attribute IDs on SQL catalog items imported from content packs are now preserved when editing the query.

-

Fixed 'user is null' messages flooding logs on some environments.

-

PostgreSQL

application_nameconnection parameter in logs no longer contains correlation and transaction IDs, resulting in more useful query statistics. -

Security fixes for Lineage Scanning service: CVE-2025-48976, CVE-2025-55163.

-

- 16.3.0-patch15

-

-

Set the default logging level for hybrid DPEs to

DEBUG. -

Lineage

-

When importing lineage from a ZIP file, you can now import up to 50 connections at once, preventing incomplete diagram imports.

-

MS SQL scan plans no longer fail due to datetime parsing issues. If parsing fails, the current time is used instead (or null if allowed) and a warning is logged.

-

Scan plans screen now refreshes automatically when updates are available.

-

Null values in lineage data no longer cause parsing errors when loading diagrams.

-

Updated documentation link to the correct version.

-

-

- 16.3.0-patch14

-

-

Monitoring project results in email notifications are now rounded to two decimal places.

-

Loading to ONE Data works correctly for data with null or empty values of DateTime data type.

-

Loading to ONE Data now works correctly when DateTime values are null or empty.

-

Lineage:

-

Databricks lineage is now correctly labeled and mapped to catalog items, preserving the lineage hierarchy after import.

-

When importing Databricks or Dremio lineage, connections are correctly preselected in the import mapping settings.

-

When providing a private PEM key for a scan plan, newlines are no longer replaced by spaces for values spanning multiple lines.

-

-

- 16.3.0-patch13

-

-

In Data Observability, attributes are no longer individually logged when calculating domain statistics, improving platform performance.

-

Changes in terms applied to schema views are now reflected in search immediately.

-

In Ataccama Cloud environments, uploading large lineage scan results into ONE no longer gets stuck or fails.

-

- 16.3.0-patch12

-

-

After upgrading to 16.3.0, Sweep documentation flow now appears in the documentation flow menu.

-

Rule suggestions load correctly in monitoring projects for catalog items with assigned rules or terms you don’t have access to.

-

Term Suggestions services no longer overload application logs with zero-confidence term suggestions.

-

Term Suggestions metrics are no longer collected when the services are not configured.

-

BigQuery pushdown processing now displays the correct source name in error messages.

-

Improved monitoring project notification logging for faster troubleshooting.

-

Upgraded OpenSearch for enhanced security.

-

- 16.3.0-patch11

-

-

Rule suggestions now correctly respect your access permissions when using the Check for rule suggestions option. You’ll see suggestions for catalog items you have access to (even without term access), while rules requiring higher permissions are excluded.

-

Improved error messaging when creating an ADLS Gen 2 connection, making it easier to troubleshoot connection failures.

-

Lineage

-

Power BI reports are now correctly matched to reports in Data Catalog instead of datasets.

-

Scan plans executed on an edge instance are no longer shown as

Finishedif artifacts failed to upload. -

When running MS SQL scan plans, empty statements are removed during preprocessing.

-

When running SQL scan plans, synonyms are now correctly recognized and processed for assets already in Data Catalog.

-

When a connection name is referenced through a function in a scan plan, a fallback name is now used instead of causing the scan to fail.

-

Assets with long concatenated names now appear correctly on the lineage diagram after import.

-

Lineage imports can now be canceled regardless of their status, including stuck or failed imports.

-

- 16.3.0-patch10

-

-

When reimporting the same monitoring project configuration but with a post-processing plan attached, related catalog items are no longer duplicated and the project runs as expected.

-

When importing monitoring project configuration containing filters, filter references to catalog items and attributes are correctly updated after remapping. Previously, filters would appear invisible after remapping but were still submitted to DQ evaluation as duplicates, causing exponential growth in filter combinations and processing failures.

-

Data quality rules containing regular expressions now produce consistent results between rule debugging and DQ evaluation.

-

Pausing an Ataccama Cloud environment running ONE Data with PrivateLink configured works as expected.

-

- 16.3.0-patch9

-

-

Updating individual data quality checks using the Update rule option in the DQ check modal works as expected. Previously, the update would fail to be propagated.

-

Editing an asset as a non-admin user now correctly creates a review task instead of returning a

GraphQlTransporterror. -

AI-generated transcription of the lineage transformation context is correctly cleared after opening a different flow.

-

Resolved the issue with failing health checks for system validations when upgrading to 16.3.0-patch5.

-

Updated MS SQL JDBC driver to 13.2.1.

-

- 16.3.0-patch6

-

-

Profile inspector no longer shows mixed results when monitoring projects target the same catalog item with and without data slices. Results now correctly reflect whether the project uses the full dataset or a specific data slice.

-

Searching to add a data steward doesn’t remove all previously assigned stewards. New stewards are now added without affecting existing assignments.

-

Databricks pushdown processing works as expected when operational credentials are used.

-

Keycloak now starts successfully when using a custom monitoring port.

-

- 16.3.0-patch5

-

-

Lineage

-

Lineage diagram correctly highlights all connected assets after selecting an attribute.

-

The Transformation snippet panel stays closed when dismissed and does not reopen on other lineage diagrams. The panel also no longer displays outdated content from previous diagrams.

-

MSSQL lineage scanner extracts downstream lineage from Table Valued Functions (TVFs).

-

Tableau lineage scanner skips unsupported connection types and logs them instead of failing the entire scan.

-

Power BI lineage scanner correctly extracts lineage when Power Query transformations reference other sources or variables within the same dataset.

-

-

Catalog & data processing

-

Profiling no longer fails on Databricks catalog items when data masking is applied.

-

Databricks jobs execute successfully when the ADLS path contains spaces in folder names.

-

-

Authentication & security

-

Security fixes for Task service and Workflow service: CVE-2025-55752, CVE-2025-41249.

-

SSO login works reliably without intermittent failures.

-

-

Other fixes

-

Component rules on Databricks support complex transformations, including joins and aggregations.

-

Databricks JDBC connections using integrated credentials work correctly after upgrade to version 16.3.0.

-

-

- 16.3.0-patch4

-

-

Lineage

-

Power BI lineage scanner no longer fails on certain workspaces.

-

Power BI Lineage scanner processes report parameters correctly.

-

Azure Data Factory lineage scanner supports CSV files from Azure Data Lake Storage.

-

Standalone lineage scanner registers correctly with edge manager version 16.2.0.

-

Power BI lineage scanner supports Direct SQL QUERY clause for Databricks, Teradata, Oracle, and BigQuery.

-

SSIS lineage imports show fewer connections in the matching screen as connections with identical properties are automatically merged.

-

Secrets are correctly synchronized from Ataccame ONE to edge instance (standalone lineage scanners).

-

-

Other fixes

-

Filter in the Select data steward dialog works as expected.

-

-

- 16.3.0-patch3

-

-

Workflows no longer fail when runtime configuration contains empty passwords or other secrets.

-

- 16.3.0-patch2

-

-

Long term names do not overflow the Relationships widget.

-

When using Snowflake OAuth credentials, the token validity is derived from the OAuth response.

-

On the lineage diagram, the transformation context panel now stays closed when dismissed and won’t show outdated content from previous diagrams.

-

Using the Start lineage from here option on attribute-level lineage correctly shows all edge assets.

-

- 16.3.0-patch1

-

-

Lineage

-

Lineage diagram metadata loads as expected on first load.

-

Lineage import publish logs are automatically refreshed instead of requiring a manual refresh.

-

Lineage import ZIP files now support up to 50 connections (up from 20).

-

CSV file uploads now support up to 50M rows per file (up from 5M).

-

XLS sources are now correctly displayed on the lineage diagram.

-

Creating custom SQL context for PowerQuery works as expected.

-

GraphQL validation errors no longer appear when running a scan plan in setups with edge management turned off.

-

When using NativeQuery SQL statements with MSSQL, source tables are shown as expected on the lineage diagram.

-

The SSIS lineage scanner now correctly identifies target tables when source components have both table name and SQL command defined.

-

-

Other fixes

-

When using transformation plans to export monitoring project results to a database or a CSV file, invalid and valid columns are correctly populated for failing rules instead of containing only null values.

-

Resolved performance issues when running the

comparemonitoringProjectGraphQL query in Custom Ataccama Cloud environments. -

Increased Audit module connection pool size for better query performance.

-

-

- Initial release

-

-

Monitoring projects

-

Fixed a performance issue in the filter validator that determines suitable attributes for filters.

-

Failed projects no longer leave behind temporary files that take up extra storage space.

-

When running on BigQuery using pushdown processing, column names in generated SQL are no longer duplicated.

-

Fixed inconsistent results in monitoring projects that reference multiple aggregation rules with variables on Databricks.

-

When applying rules to Snowflake catalog items, only published rules are available for selection.

-

The Report tab displays correctly after adding a notification.

-

Notifications are now sent correctly when overall data quality is not available for some catalog items but others meet the notification threshold.

-

Anomaly checks applied on a data slice no longer process the entire catalog item.

-

The Catalog Items screen and catalog items load significantly faster.

-

Fixed the issue with anomaly detection jobs repeatedly failing.

-

Email notifications display values of data quality filters.

-

Extensive filtering operations are handled reliably without crashing, using improved file size and threshold management.

-

When importing configuration from one project to another, all rules from the source project are correctly imported and mapped.

-

When editing anomaly configuration, the value of Sensitivity of anomaly detection is displayed.

-

It is possible to add events and channels to notifications.

-

Publishing works as expected.

-

A warning about number of possible value combinations no longer appears when unselecting attributes to be used as filters.

-

The Invalid Samples screen shows the correct overall quality value when accessed from a historical run.

-

The profile inspector only shows anomalies for attributes with anomaly detection enabled, preventing confusion caused by unrelated anomalies.

-

Email notifications no longer show some data quality results as negative if DQ results are missing.

-

Submission no longer fails when a relevant Data Processing Engine is temporarily disconnected.

-

Monitoring project configuration UI indicates if referenced catalog items have been deleted from Data Catalog.

-

-

Rules

-

Detection rule inputs are validated, no longer causing job failure because of invalid input names.

-

When assigning a term to an attribute, the rules applied to that term are now displayed in the Applied rules column.

-

In DQ rule creation, the AI prompt used to generate logic and inputs now persists after testing.

-

Sidebar with details of ONE Data table attribute displays the correct number of applied rules.

-

A correct dialog is displayed when changing dimension in the quick creation workflow.

-

Duplicate names are no longer allowed when creating inputs.

-

The Add rule option on the attribute Overview tab is no longer deactivated.

-

When viewing rule occurrences, the Attributes tab also displays rules applied to ONE Data attributes.

-

Rule debug improvements:

-

Deleting conditions is correctly reflected in the debug results.

-

Testing individual conditions shows correct results.

-

Debug shows consistent results for friendly and advanced expressions.

-

-

-

Transformation plans

-

Sharing plans is now possible.

-

When creating plans, ONE Operator, Data Owner and Data Steward have

Full accessby default. -

DB writer step now defaults to the Append data write strategy, instead of Replace.

-

Only users with corresponding permissions for a catalog item can select Create new catalog item in the Database Output step.

-

A warning is displayed after selecting Validate plan if incompatible data types are detected.

-

Fixed data preview for valid steps preceding the step with validation issue.

-

Data preview jobs are correctly routed to the proper DPE.

-

Transformation plans now gracefully handle attributes with unsupported binary data types by skipping them during execution and displaying a warning in the UI.

-

-

Catalog & data processing

-

BigQuery, Synapse, and Databricks pushdown processing support additional SQL functions including aggregate, conditional, and mathematical operations:

countDistinct,iif,math.abs,math.acos,math.asin,math.atan,math.e,math.exp,math.log10,math.log,math.pi,math.pow,math.sin,math.sqrt,math.tan. -

Snowflake pushdown processing supports VARIANT, VECTOR, and GEOMETRY column types by converting them to strings.

-

Okta Single Sign-On (SSO) can now be used with Spark processing. Previously, impersonation could be used only for interactive actions and local Data Processing Engine (DPE) jobs.

-

In Microsoft Excel files, empty columns are correctly resolved as String instead of Date and Integer columns are no longer treated as Boolean.

-

Data slices created from a BigQuery source use the correct data type when comparing data in a Timestamp column.

-

When sorting catalog items by # Records, unprofiled items are treated as having

0records and appear at the bottom when sorting by most records first. -

Documentation flow is no longer shown as running if all related jobs are finished (completed or canceled).

-

Databricks job clusters are correctly located during job execution.

-

-

Data source connections

-

OneLake connections now support folder and file names containing spaces. Using spaces in workspace names is still not supported.

-

Power BI Report Server connector now supports paginated reports.

-

S3 connection configuration screen displays Endpoint and Region fields based on provider type, and the MINIO provider is now labeled as S3 Compatible.

-

The Spark enabled option has been removed from JDBC connections.

-

Databricks connections now support

checkMountPointparameter to verify mount point accessibility before job execution. -

Snowflake JDBC connections no longer time out during network or SSL handshake failures.

-

Database export operations work correctly when using write credentials only, without requiring default credentials for listing schemas and tables.

-

Test Connection feature works correctly for ONE Data connections.

-

Client ID field is now available in the SAP RFC connection creation form.

-

Secret Management Service requests are correctly routed to the appropriate DPE in hybrid cloud environments.

-

In Ataccama Cloud, Keycloak monitoring endpoints are no longer accessible from public domains. When upgrading, adjust your hybrid DPE configuration accordingly. See DQ&C 16.3.0 Upgrade Notes.

-

Invalid JDBC connections no longer block gRPC executor threads for other connections.

-

-

ONE Data

-

Adding rules or terms to the technical attribute

dmm_record_idis no longer possible. -

It is possible to manually edit values in

DateTimecolumns. -

Added new date-time formats to import wizard.

-

Tables can no longer appear deleted while still being referenced by a transformation plan.

-

When you export a table with an edited name, it is correctly exported using the updated name.

-

-

Data Admin Console

-

Create Thread Dump and Kill Job actions are available on the DPM job detail page in DPM Admin Console. Previously, they were available only from the job listing menu.

-

Job logs and related files can be downloaded as a ZIP file directly from the console.

-

You can open jobs in a new tab, and the console remembers the previous page position when navigating back.

-

Strict version compatibility rules prevent connections with unsupported DPE versions.

-

-

Performance & stability

-

Addressed high CPU usage on environments using AWS RDS/Amazon Aurora database.

-

DQ jobs now run more efficiently with optimized memory usage, addressing previous

OutOfMemoryfailures. This improvement includes moving resource-intensive result processing from DPM to DPE and fixing a faulty filter combination limit check. -

Improvements to Anomaly Detector monitoring.

-

-

Usability & display

-

Text in Custom Term Property doesn’t overflow adjacent elements.

-

Filters in pages with custom layout work as expected.

-

Long explanation texts in monitoring project detailed results do not overlap other fields.

-

Selecting sources in Create SQL Catalog Item dialog works as expected.

-

Selecting a graph with data quality results displays a detailed graph.

-

Icons in email notifications display correctly.

-

Adding terms to comments works as expected.

-

All localization files are correctly applied in self-managed deployments.

-

-

Upgradability

-

The Task service can be upgraded from 15.4.1 to 16.2.0 without issues.

-

Fixed the issue where ONE sometimes remained in Maintenance mode after upgrading to or deploying version 16.2.0.

-

Fixed the issue where tasks could not be successfully approved or canceled after upgrading to 15.4.0.

-

-

MDM

Click here to expand

- 16.3.0-patch15

-

-

Improved MDM server startup time through database optimization.

-

Filtering works as expected on fields using

WINDOWlookup type. -

Usernames are correctly displayed in MDM Web App Admin Center instead of appearing hashed.

-

- 16.3.0-patch14

-

-

Transition Name in custom workflow configuration must now be unique.

-

MDM server no longer fails on startup with

WorkflowServerComponent not founderror whenWorkflowTaskListeneris configured innme-executor.xml. -

Fixed database connection leaks in event handlers.

-

- 16.3.0-patch12

-

-

Updated Kafka client library in Kafka Provider Component to prevent compatibility errors.

-

- 16.3.0-patch11

-

-

MDM Web App widgets no longer show a blank screen on load.

-

Resolved memory issues in the Matching step with parallelism configured that could cause the application to crash.

-

- 16.3.0-patch9

-

-

Infrastructure improvements to Ataccama Cloud environments.

-

- 16.3.0-patch6

-

-

Users with

MDM_Viewerrole correctly see only attributes ofcmo_typewhen querying the MDM REST API. -

During publishing, the streaming event handler now correctly populates the

meta_origincolumn as a string instead of incorrectly treating it as a number.

-

- 16.3.0-patch5

-

-

IgnoredComparisonColumnsignores columns only on the selected entities. -

Batch load and workflow operations no longer fail when the parallel write strategy is enabled and matching is added to an entity after initial data load.

-

- 16.3.0-patch3

-

-

Selecting Details in the Orchestration section of MDM Admin Center expands rows correctly.

-

MDM server logs events related to setup of PostgreSQL database.

-

MDM server logs events related to Lookup items and VFS configuration.

-

Security fix (CVE-2025-59250).

-

- 16.3.0-patch2

-

-

Virtual file system is used as expected in MDM cleansing plans. Previously, lookup files in these plans were incorrectly loaded from a temporary local folder instead and the VFS was ignored, leading to

recent unsafe memory access operationerror messages.

-

- 16.3.0-patch1

-

-

Improved error messages for missing autocorrect configuration when using enrichments.

-

When rejecting a matching proposal with the Exclude this decision from AI matching learning option selected, the web application now clearly reports an error if there are no records related to the proposal. If the linked entity exists, the proposal is rejected without issues.

-

MDM Web App Admin Center correctly reports on the event handler execution times (started and finished), allowing you to more accurately assess the handler status.

-

In HA setups, scheduled tasks use a dedicated thread pool, allowing the second processing node to remain stable.

-

The default workflow and scheduler ID in MDM Server components is set to

MDM. -

Streamlined the web application upgrade template in ONE Desktop: redundant workflow step checkboxes were removed and group names are automatically converted to valid technical formats.

-

- Initial release

-

-

Record activity status can now be updated programmatically, via REST API. You can change the activity on both master and instance entities. See Activate and deactivate records.

-

When inserting and updating records through REST API, the

source_timestampparameter supports milliseconds according to the ISO 8601 standard for date and time format. See Source timestamp formatting. -

In MDM REST API, using multiple values in preload filters works as expected.

-

MDM Swagger UI now uses OAuth 2.0 authentication.

-

Reading related records via REST API is correctly audited. Previously, only one read request was logged.

-

You can now audit custom actions. See Configuring Audit Log > Other actions.

-

Filtering by date in the MDM Web Application now works as expected.

-

MDM Server can access S3 buckets using

AWS_POD_IDENTITY_TOKENauthentication. -

History Plugin now tracks who last modified each record, the most recent transaction, and whether master records were authored or consolidated.

-

Improved error messaging for relationship misconfigurations.

-

Record details can be accessed by users without edit permissions.

-

Editing a task pending approval no longer creates a new task.

-

Tasks cannot be created without a draft if earlier tasks are closed in a failed state.

-

Filtering tasks by ID behaves consistently regardless of whether the filter was added manually or using the Add value to filter option.

-

Load and export operations in MDM Web App Admin Center display correctly even with large numbers of tasks (over 1M).

-

When using the COMBO lookup type, active and inactive lookup values are easier to distinguish in the MDM Web Application.

-

Lookup type WINDOW no longer allows custom values.

-

Restarting the server through MDM Web App Admin Center is more robust. Previously, it would occasionally interfere with the metrics collector, cause connection leaks, or break batch loads.

-

Improved performance when resolving record conflicts in Kafka streaming setups.

-

Connecting to Kafka works as expected.

-

MDM REST API no longer returns a server error when attempting to start an already active Kafka consumer.

-

The history plugin generates the

nme-history.gen.xmlfile without syntax errors. -

Initial MDM data load jobs no longer get stuck during the final commit phase.

-

Improved performance when running the

processPurgenative service. -

Setting filters in MDM Web App no longer throws errors due to duplicate entries in the user settings database.

-

In MDM Matching,

match_related_idremains consistently linked with the given record ID after rematching, even if the order of input records changes. -

Fixed random failures when using parallelism in matching with statistics tracking enabled.

-

Improved connection pooling in MDM, preventing the application from getting stuck due to

idle-in-transactiontimeout. -

Improved the observability of long-running matching jobs.

-

MasterDataConsolidationprocess gracefully shuts down when encountering anOutOfMemoryexception. -

Read misconfiguration in MDM Server no longer results in broken initialization order, preventing the server from starting.

-

RDM

Click here to expand

- 16.3.0-patch14

-

-

Improved support for long-running requests in Ataccama Cloud, preventing large data imports from timing out.

-

Fixed connection leaks in RDM that were causing sessions to remain idle, potentially leading to lock or wait issues.

-

- 16.3.0-patch12

-

-

Save option now appears alongside Publish and Discard when creating or updating records with direct publishing configured.

-

- 16.3.0-patch11

-

-

Email notification links now work correctly in Ataccama Cloud environments. Previously, the

$detail_href$variable was incorrectly resolved, causingHTTP 401 Unauthorizederrors when opening links.

-

- 16.3.0-patch6

-

-

In RDM Server runtime configuration (

server.runtimeConfig), predefined connections now use OpenID authentication instead of Basic authentication to enforce 2FA-only access to the application. Predefined connections can be overridden by custom connections of the same name if needed.

-

- 16.3.0-patch3

-

-

Security fix (CVE-2025-59250).

-

- 16.3.0-patch1

-

-

Improved table loading performance for non-admin users in environments with extensive role configurations.

-

Added confirmation prompt (type "delete") to prevent accidental deletion of the active RDM configuration in the Admin Center.

-

In Ataccama Cloud environments, some properties (such as

spring.mail.*) from theapplication.propertiesconfiguration file can be successfully overridden usingextraPropertieskey in Helm charts.

-

- Initial release

-

-

Edit and Publish actions in the web app are available only after editing a record.

-

Enrich operation now works on entities of VIEW type.

-

In RDM Views, publishing a record correctly updates the record status instead of moving it to Waiting for publishing.

-

Improved stacktrace messaging when an invalid JSON payload is submitted.

-

Optimized comparison queries to prevent MS SQL databases from getting stuck.

-

In RDM web app, tables load within seconds regardless of table count and permissions setup.

-

Added an app variable to the RDM Model Explorer to control whether documentation is generated.

-

RDM Server now consistently establishes connections to the RDM Web Application without Connection refused errors.

-

ONE Runtime Server

Click here to expand

- 16.3.0-patch15

-

-

Removed an incorrect

ssh://prefix from theGIT_CLIENT_URLfield, which was preventing SSH connections to repositories in Ataccama Cloud environments.

-

- 16.3.0-patch9

-

-

Increased the default

client_max_body_sizein Ataccama Cloud environments, enabling larger bulk record imports in RDM without application timeouts.

-

- Initial release

-

-

Workflows page no longer incorrectly displays resumed workflows as running.

-

ONE Desktop

Click here to expand

- 16.3.0-patch14

-

-

Improvements to SAP RFC connections:

-

Added support for UTCLONG data type.

-

Added support for gateway host parameter in the connection configuration, resolving connectivity issues when connecting over SAP JCo.

-

SAP client no longer skips importing columns where metadata hasn’t been updated in a while.

-

SAP metadata discovery now uses business types instead of storage types, so columns display their correct data type (for example, integer instead of binary).

-

-

Notification handler now resubscribes automatically after five minutes of inactivity, preventing high-traffic environments from getting stuck.

-

- 16.3.0-patch13

-

-

ONE Metadata Reader step correctly reads properties of Referenced Object Array type.

-

Collibra Writer step now properly groups relations to prevent overwrites in REPLACE mode and allows specifying Source or Target asset as the relationship identifier.

-

Added a JSON Assigner step for creating and modifying JSON data, outputting valid JSON payloads as STRING or JSON type.

-

- 16.3.0-patch9

-

-

Plans using Lookup step no longer fail with a

NoSuchMethodErrormessage.

-

- Initial release

-

-

ONE Desktop job results are no longer retained in the Executor Bucket (MinIO). The Delete MinIO Job result cleanup option is applied by default.

-

Unity Catalog column and data type mapping works correctly with Map to Query functionality in Databricks.

-

ONE Desktop successfully connects to MinIO instances behind a proxy.

-

SQL Commands are available in the table context menu.

-

Metadata Reader step correctly filters embedded object array data by specific properties instead of returning all values.

-

Metadata Reader step correctly retrieves

projectStatsdata for monitoring projects. -

CSV viewer displays a message when encountering lines exceeding the maximum record length (65,536 characters) instead of silently skipping or truncating them.

-

Was this page useful?