dbt Lineage Scanner

Scanned and supported objects

|

While we strive for comprehensive lineage capture, certain dataflows and transformations might be incomplete or unavailable due to technical constraints. We continuously work to expand coverage and accuracy. |

Resources scanned

The dbt scanner works together with the underlying database technology scanner. See the specific database technology scanner in order to determine the supported scope.

Limitations

The following dbt features are not supported:

-

dbt Semantic Layer using MetricFlow (introduced in dbt v1.6).

-

Cross-project references, as part of dbt Mesh (introduced in dbt v1.6)

-

dbt artifacts (projects, models) are not available as catalog items.

-

Enriching catalog items with metadata defined in dbt.

For instance, custom metadata or tags defined on a table or column level in dbt aren’t available on catalog items.

-

dbt tests are currently not supported.

Supported connectivity

-

Cloud API-based dbt scanner: See Cloud API-based dbt scanner.

-

Connector type: API.

-

Authentication method: Service Account Token.

-

-

File-based scanner: File-based dbt scanner.

-

Local folder with extracted metadata.

-

Cloud API-based dbt scanner

The Cloud API-based scanner provides comprehensive metadata and lineage extraction from all dbt projects that the provided dbt API token can access.

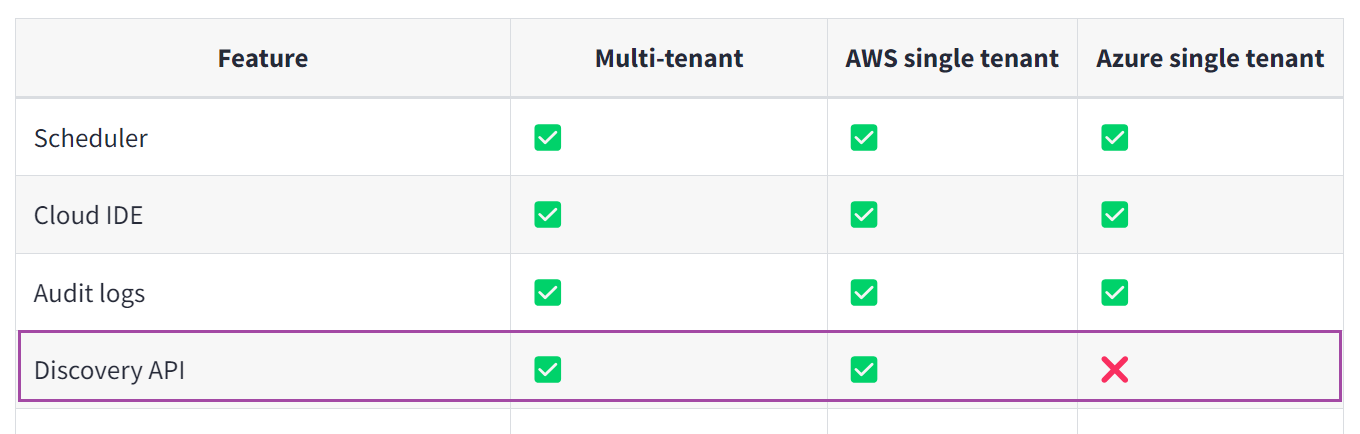

Discovery vs Admin API

Deciding between DISCOVERY_API and ADMIN_API is essential as it determines

based on the available dbt Cloud deployments and their respective features as detailed below.

For the most current information, check the dbt Cloud documentation here: dbt Cloud documentation.

Scanner Configuration

| Property | Description | ||

|---|---|---|---|

|

Unique name for the scanner job. |

||

|

Specifies the source type to be scanned.

Must contain |

||

|

A human-readable description of the scan. |

||

|

List of Ataccama ONE connection names for future automatic pairing. |

||

|

Specifies which API to use. Possible values: |

||

|

dbt cloud Admin (REST) API URL. Possible values:

See the official dbt documentation: Administrative API. |

||

|

Your dbt discovery (GraphQl) API URL endpoint.

Required only if you are using See the official dbt documentation: Query the Discovery API. |

||

|

dbt service account token. To create a token, see Service account tokens. |

||

|

If set to See the official dbt documentation: Set as production environment. |

||

|

Restricts lineage extraction to the specified list of dbt projects.

|

||

|

List of excluded dbt projects. |

{

"scannerConfigs": [

{

"name": "dbt ATA prod - Discovery API",

"sourceType": "DBT",

"description": "Scan dbt sources",

"onlyProductionEnvironments": true,

"includeProjects": [

"Dwh",

"Customer Mart"

],

"excludeProjects": [

],

"connection": {

"type": "DISCOVERY_API",

"adminApiUrl": "https://cloud.getdbt.com",

"discoveryApiUrl": "https://metadata.cloud.getdbt.com/graphql",

"accessToken": "@@ref:ata:[DBT_TOKEN]"

}

}

]

}File-based dbt scanner

The file-based dbt scanner caters primarily to dbt Core but can also be used for dbt Cloud. This method is particularly useful for environments where direct API access is limited or unavailable.

Limitations

The file-based integration is limited in certain capabilities compared to the Cloud API integration, including:

-

Separate configuration requirements for each dbt project.

-

No support for runtime metadata extraction.

File-based scanner configuration

| Property | Description |

|---|---|

|

Unique name for the scanner job. |

|

Specifies the source type to be scanned.

Must contain |

|

A human-readable description of the scan. |

|

List of Ataccama ONE connection names for future automatic pairing. |

|

Path to dbt artifacts. |

|

dbt manifest file. Contains model, source, tests, and lineage data. |

|

dbt catalog file (optional). Contains schema data. |

{

"scannerConfigs": [

{

"name": "My dbt on-prem project",

"sourceType": "DBT",

"description": "Scan dbt on-prem project",

"file":{

"path": "c:\\dbt_input_files",

"manifest": "manifest.json",

"catalog": "catalog.json"

}

}

]

}Metadata extraction details

Metadata extracted from dbt projects is critical for maintaining up-to-date and accurate data landscapes. The following table outlines the specific types of metadata available for extraction from dbt projects, particularly when using dbt Cloud:

| Metadata Type | Supported in dbt version | Description |

|---|---|---|

Runtime metadata |

dbt Cloud only |

Includes dynamic metadata viewable in diagrams, such as dbt model descriptions, job names, and execution times. |

dbt model description |

All versions |

Detailed descriptions of each model, which can include information like the purpose of the model, its design, and key considerations. |

dbt job name |

All versions |

Name of the dbt job in which the model was last executed, which is crucial for tracking and managing dbt jobs. |

Last run timestamp |

All versions |

Timestamp indicating when the model was last run, useful for monitoring model freshness and scheduling updates. |

Last successful run timestamp |

Optional |

Timestamp of the last successful run, providing insights into the reliability and stability of the dbt processes. |

Last run status |

All versions |

Current status of the last run, which helps in identifying successes or failures in recent executions. |

Last run duration |

All versions |

Duration of the last run, important for performance analysis and optimization. |

Last run processed rows |

Certain databases |

Number of rows processed in the last run, applicable only to specific databases and providing a measure of the volume of data handled. |

Identification of hardcoded source object names |

Backend only |

Identifies dbt models where source object names are hardcoded, which is a development anti-pattern. |

Identification of orphan CTEs |

Backend only |

Identifies dbt models with unreferenced |

This detailed metadata extraction is instrumental in maintaining an efficient, transparent, and optimized data management environment. The information enables teams to monitor dbt project health, track changes, and plan improvements based on empirical data.

Supported database platforms

The attribute level lineage is supported as follows:

-

Yes:

-

For dbt Python models, only datastore level lineage is supported. Column lineage for Python models is currently in the works.

-

-

Partially - This means the attribute lineage is available only when:

-

dbt documentation generation is enabled (so

catalog.jsonis available). -

The dbt model is an SQL model, not Python, and it uses ANSI SQL syntax.

-

Other database technologies using ANSI SQL syntax can be supported with minimal effort (such as Oracle, MySQL).

-

Once a specific internal database technology scanner is available (for example, for Azure Synapse), the full attribute level lineage will be available as well.

| Database platform | dbt cloud support | Datastore level lineage | Attribute level lineage | Python models support in dbt |

|---|---|---|---|---|

Snowflake |

Yes |

Yes |

Yes* |

Yes |

PostgreSQL |

Yes |

Yes |

Partially* |

No |

Amazon Redshift |

Yes |

Yes |

Partially* |

No |

Microsoft Fabric |

Yes |

Yes |

Partially* |

Yes |

Databricks |

Yes |

Yes |

Partially* |

Yes |

Google BigQuery |

Yes |

Yes |

Planned |

Yes |

Apache Spark |

Yes |

No |

No |

No |

Starburst or Trino |

Yes |

No |

No |

No |

Azure Synapse |

No |

Yes |

Partially* |

No |

Other |

No |

No |

No |

No |

Additional features

The dbt scanner supports the same SQL anomaly detection checks as the Snowflake scanner. See SQL DQ (anomaly) detection for more details.

FAQ

-

How much time does it take to scan lineage from dbt with around 500 models?

It depends on the connection method used. Here are some estimates:

-

File input: 1 min

-

Discovery API: 2 min

-

Admin API: 6 min

-

Was this page useful?