Snowflake Pushdown Processing

Processing for your Snowflake connection can be handled in two ways:

-

Use Ataccama ONE Data Processing Engines (DPEs) for all data quality processing (DQ evaluation jobs, profiling, monitoring projects, and data observability), which may require multiple engines and data transfer depending on the project’s scale.

-

Run processing directly in Snowflake by enabling pushdown.

This article outlines the benefits and limitations of Snowflake pushdown processing in ONE and provides setup instructions.

| Get in touch with your Customer Success Manager to enable this feature. |

| Pushdown processing is not available in ONE Desktop. |

Why use pushdown processing?

Using pushdown processing can lead to improvements in performance, security and infrastructure, and subsequently, scalability.

-

Performance. Evaluate millions of records in seconds.

-

Security. Data processing is executed directly in Snowflake, and results (and invalid samples, if configured) are returned. This means there is no transfer of data required or any external services or servers; all processes run on secured and governed Snowflake warehouses.

From a user perspective, the results are stored and appear the same as when DPE handles processing. -

Infrastructure. Utilize your existing infrastructure to evaluate data—no need to set up and manage new processing servers.

Prerequisites

There are two key parts when it comes to enabling pushdown processing. You must:

-

Configure Snowflake to allow Ataccama to transfer functions. Snowflake configuration is via worksheet which defines the working database and grants access to defined user roles.

-

Enable pushdown processing on the connection.

It is also necessary to make sure that DPE allows for automatic synchronization of user-defined functions. See DPE Configuration.

Configure Snowflake pushdown processing

Pushdown processing is enabled on the connection level.

To create a new Snowflake connection, follow the instructions in Snowflake Connection. To enable pushdown profiling on an existing connection:

-

In Knowledge Catalog > Sources, select your Snowflake source.

-

Select the Connections tab.

-

For the required connection, use the three dots menu and select Edit.

-

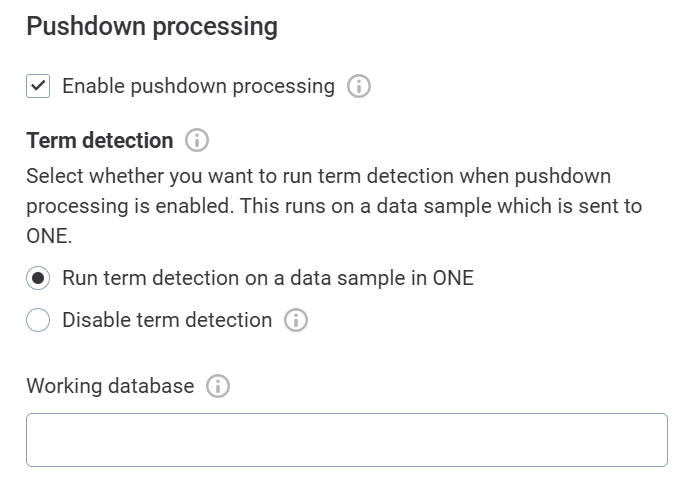

In the Pushdown processing section, select Enable pushdown processing.

-

You now need to choose whether you want to run term detection when pushdown processing is enabled, or disable term detection.

Term detection jobs (domain detection and fingerprints for AI term suggestions) can’t run in pushdown, so if you run term detection with pushdown enabled it runs on a data sample which is sent to ONE. -

Select Run term detection on a data sample in ONE to trigger detection jobs alongside profiling. Otherwise, select Disable term detection.

If you disable term detection, no terms are applied during profiling or documentation flows, and no term suggestions are shown for assets from this connection.

-

After enabling pushdown processing on a new or existing source:

-

In Working database, provide the name of the database you want to use for storing the stage, functions, and temporary tables created during profiling. You have two options:

-

Use the database that is being processed (default).

-

Create a custom database (recommended).

Additional setup in Snowflake is required. See Create the working database in Snowflake.

-

-

In Working schema optionally specify a schema of your choice. If you use a custom schema, change the schema name in Step 6 of Create the working database in Snowflake. If you leave this blank, the

publicschema is used as default. -

Select Add JDBC role to add a JDBC role if you need to share the Snowflake pushdown setting across different credentials (for example, to a common underlying role).

Create the working database in Snowflake

In Snowflake, you need to execute the following SQL commands to create a working database and grant access to defined user roles.

Make sure you run these commands from a SYSADMIN or ACCOUNTADMIN Snowflake account.

You can change role using, for example, USE ROLE ACCOUNTADMIN; or in the user interface, by selecting your profile name in the upper-right corner and choosing ACCOUNTADMIN from the role dropdown.

|

In Snowflake, select + Worksheet, and follow these instructions.

Step 1: Create a database

To create a new database, execute the following SQL command. Use the name you provided in the connection settings.

CREATE DATABASE IF NOT EXISTS <working_db>;

| The database can be deleted if you no longer want to use pushdown in ONE. Multiple users can reuse the same database if they have access to it. |

Step 2: Create a stage

Using the following command, create a stage called _ATC_ONE_STAGE.

CREATE STAGE IF NOT EXISTS _ATC_ONE_STAGE;

It is possible to use a custom stage name but if you do you need to additionally run GRANT CREATE STAGE ON SCHEMA public TO ROLE <pushdown_role>; in step 6, and use your custom name rather than _ATC_ONE_STAGE in step 7.

|

Step 3: Create a role for Ataccama

This role gives ONE permission to load data into your database: this role needs to have read permissions on your data, and write permissions for the working database. We don’t recommended reusing this role for other operations.

CREATE ROLE IF NOT EXISTS <pushdown_role>; GRANT ROLE <read_data_role> TO ROLE <pushdown_role>;

| This role needs to be specified in the JDBC connection string, for example: |

jdbc:snowflake//<snowflake_url>?db=<database_name>&warehouse=<warehouse_name>&role=<pushdown_role>&<other_param>=<other_param_value>`

Step 4: Assign role to users

Assign your newly-created role to the relevant Snowflake users.

GRANT ROLE <pushdown_role> TO USER <sample_user>; GRANT ROLE <pushdown_role> TO USER <another_user>

Step 5: Grant access to database

Grant access on the database you created in step 1 to the role you created in step 3. If no roles are specified here, all users have the access to the database.

GRANT USAGE ON DATABASE <working_db> TO ROLE <pushdown_role>;

Step 6: Grant access to schema

Grant access on the public schema of the database to the role you created in step 3.

GRANT USAGE ON SCHEMA public TO ROLE <pushdown_role>; GRANT CREATE TABLE ON SCHEMA public TO ROLE <pushdown_role>; GRANT CREATE SEQUENCE ON SCHEMA public TO ROLE <pushdown_role>; GRANT CREATE FUNCTION ON SCHEMA public TO ROLE <pushdown_role>;

If you specified a schema in Working schema make sure to update the schema name here accordingly.

If you left it blank, public is used by default and no changes are required.

|

Step 7: Grant access to stage

Grant access on the stage of the database to the role you created in step 3.

GRANT READ ON STAGE _ATC_ONE_STAGE TO ROLE <pushdown_role>; GRANT WRITE ON STAGE _ATC_ONE_STAGE TO ROLE <pushdown_role>;

If you are using a custom stage name and not _ATC_ONE_STAGE make sure to modify these commands accordingly.

|

Processing in Snowflake

The data quality processing configurations available in ONE are translated to Snowflake SQL language, either by utilizing native SQL functions or creating new functions. This is done by DPE so at least one DPE is still required to handle the translation of these configurations to Snowflake functions.

Pushdown uses Snowflake warehouse for computation, giving you easy control of resources used for computation. The size of Snowflake warehouse used determines the performance, so processing could be impacted if a small warehouse is used.

When can you use pushdown?

Pushdown processing in Snowflake can be used when running:

-

Profiling.

To optimize performance, some of the most infrequently used profiling stats such as frequency groups and quantiles for non-numeric attributes aren’t calculated. -

DQ evaluation using supported rules.

-

Monitoring projects.

-

Data observability (excluding term detection and AI-powered anomaly detection).

| For optimized performance and better cost efficiency, sample profiling always runs on Ataccama DPEs. Processing of very small data sets is better suited for non-pushdown operations because of SQL overhead. |

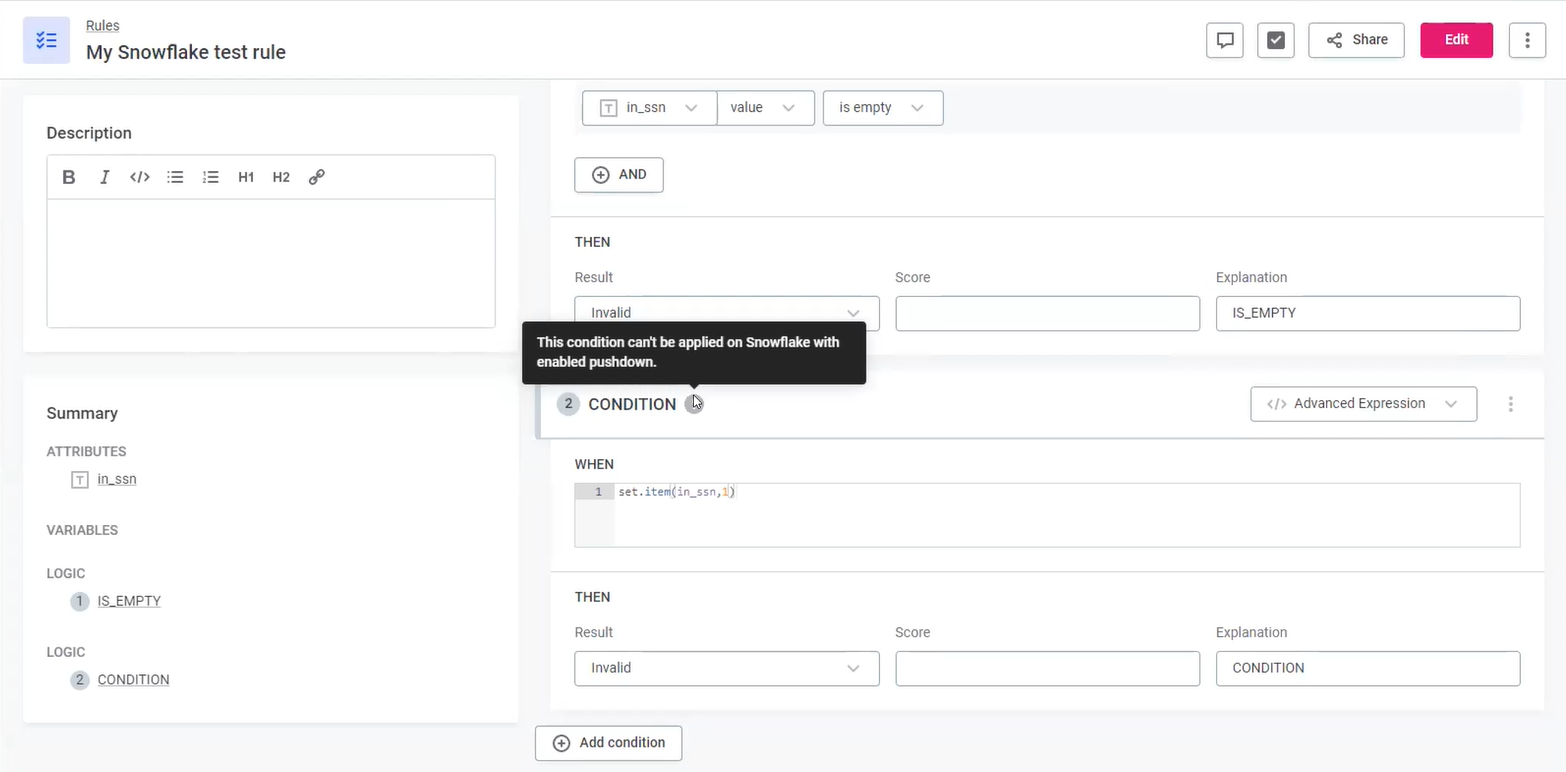

Processing is typically executed externally without a visual indication on the platform, except when an unsupported configuration is used. In such cases you see the following information: This condition can’t be applied on Snowflake with enabled pushdown. Equally, in monitoring projects, if you try and apply an incompatible rule to a catalog item, you see a warning.

To use pushdown processing, you need to remove the rule or define an alternative configuration. For more information, see Supported rules.

Supported rules

The following rules are supported in Snowflake pushdown:

-

Rules created using the Condition Builder, as expression functions available in the condition builder are supported by pushdown.

-

Parametric rules.

-

Advanced Expression rules and rules using variables, as long as they include only Supported functions and operators.

| Aggregation rules and component rules are not currently supported. |

Supported functions and operators

Supported operators

-

+(concatenate) -

AND -

NOT -

OR -

XOR -

< -

⇐ -

<>,!= -

=,== -

> -

>= -

case -

in -

is -

is in -

is not -

is not in -

is not null -

is null

Supported functions

-

addition -

avg -

avgif -

bitor -

bitand -

bitneg -

bitxor -

capitalize -

capitalizeWithException -

coding.fromBase64 -

coding.md5,encode.md5 -

coding.toBase64,encode.base64 -

concatenateif -

containsWord -

count -

countDistinct -

countDistinctIf -

countNonAsciiLetters -

countUnique -

countUniqueif -

countif -

dateAdd -

dateDiff -

datePart -

dateTrunc -

decode -

diceCoefficient -

distinct -

division -

doubleMetaphone -

editDistance -

eraseSpacesInNames -

find -

first -

firstif -

frequency -

geoDistance -

getDate -

getMilliseconds -

getParameterValue -

getRuntimeVersion -

hamming -

iif -

indexOf -

isInFile -

isNotInFile -

isNumber -

jaccardCoefficient -

jaroWinkler -

last -

lastIndexOf -

lastif -

left -

length -

levenshtein -

like -

lower -

math.abs -

math.acos -

math.asin -

math.atan -

math.ceil, math.ceiling -

math.cos -

math.e -

math.exp -

math.floor -

math.log -

math.log10 -

math.longCiel,math.longCeiling -

math.longFloor -

math.pi -

math.pow -

math.round -

math.sin -

math.sqrt -

math.srq -

math.tan -

matches -

maximum -

maximumif -

max -

metaphone -

min -

minimum -

minimumif -

modus -

modusif -

multiplication -

ngram -

now -

nvl -

preserveCase -

random -

randomUUID -

removeAccents -

replace -

replicate -

right -

safeMax -

safeMin -

set.contains -

soundex -

sortWords -

squeezeSpaces -

substituteAll -

substituteAll -

substituteMany -

subtraction -

sum -

sumif -

toDate -

toDateTime -

toDateTime -

toFloat -

toInteger -

toLong -

toString -

today -

transliterate -

trashConsonants -

trashDiacritics -

trashNonDigits -

trashNonLetters -

trashNonLetters -

trashVowels -

trim -

trimLeft -

trimRight -

upper -

word -

wordCombinations -

wordCount

Current limitations

-

Profiling is not optimized to run against complicated Snowflake views built with large tables that are not materialized. Data quality computation should not be affected as much by this.

-

Post-processing plans and load components are not supported.

-

Not all rules are supported. For full details, see Supported rules.

Troubleshooting

Processing fails with message Processing aborted due to error 300010:2765523699

- Problem

-

Snowflake pushdown processing fails with the following error

Processing aborted due to error 300010:2765523699; incident [incident_number]. - Cause

-

This issue has to do with internal Snowflake processing and typically occurs when the table contains a column of type

MAP(STRING, OBJECT). Some other complex object types might also result in the same error. - Possible solution

-

Such columns cannot be detected in advance as all

MAPandOBJECTtypes are reported as the generalOBJECTtype. As a workaround, the following options are available:-

Process the table in DPE instead of using pushdown processing.

-

Exclude all OBJECT types from pushdown processing.

To do this, set the JDBC driver property

ata.pushdown.ignore.object.typetotrueand remove any DQ rules from columns ofOBJECTtype. With this setting, only non-OBJECTtypes will be processed.The property is configured on the source connection. For details, see Add Driver Properties.

-

Was this page useful?