Snowflake Connection

Create a source

To connect to Snowflake:

-

Navigate to Data Catalog > Sources.

-

Select Create.

-

Provide the following:

-

Name: The source name.

-

Description: A description of the source.

-

Deployment (Optional): Choose the deployment type.

You can add new values if needed. See Lists of Values. -

Stewardship: The source owner and roles. For more information, see Stewardship.

-

| Alternatively, add a connection to an existing data source. See Connect to a Source. |

Add a connection

-

Select Add Connection.

-

In Select connection type, choose Relational Database > Snowflake.

-

Provide the following:

-

Name: A meaningful name for your connection. This is used to indicate the location of catalog items.

-

Description (Optional): A short description of the connection.

-

Dpe label (Optional): Assign the processing of a data source to a particular data processing engine (DPE) by entering the DPE label assigned to the engine. For more information, see DPM and DPE Configuration in DPM Admin Console.

-

JDBC: A JDBC connection string pointing to the IP address or the URL where the data source can be reached. For a list of supported sources and JDBC drivers, see Supported Data Sources.

Depending on the data source and the authentication method used, additional properties might be required for successful connection. See Add Driver Properties.

-

-

In Snowflake pushdown processing, select Pushdown processing enabled if you want run profiling entirely using Snowflake data warehouse.

During query pushdown, profiling results and data samples are sent to Ataccama ONE. To learn more, see Snowflake Pushdown Processing.

Full profiling does not include domain detection, which is always executed locally by Data Processing Engine (DPE). For pushdown processing to function, the credentials added for this connection need to be for a Snowflake user with write permissions, as pushdown processing involves writing into the working database (including creating tables). -

Follow the instructions found in Snowflake Pushdown Processing to configure pushdown processing.

-

-

In Additional settings:

-

Select Enable exporting and loading of data if you want to export data from this connection and use it in ONE Data or outside of ONE.

If you want to export data to this source, you also need to configure write credentials. See Connection credentials.

Consider the security and privacy risks of allowing the export of data to other locations. -

Select Enable analytical queries if you want to create data visualizations in Data Stories based on catalog items from this connection.

-

Add credentials

Different sets of credentials can be used for different tasks. One set of credentials must be set as default for each connection.

To determine whether you need to configure more than a single set of credentials, see Connection credentials.

-

Select Add Credentials.

-

Choose an authentication method and proceed with the corresponding step:

-

Username and password: Basic authentication using your username and a password.

-

Key-pair authentication: Enhanced security authentication as an alternative to username and password authentication.

-

OAuth credentials: Use OAuth 2.0 tokens to provide secure delegated access.

-

OAuth user SSO credentials: Use an external identity provider Single Sign-On (SSO).

-

| These sections include references to Azure AD, which you might also know as Microsoft Entra ID. |

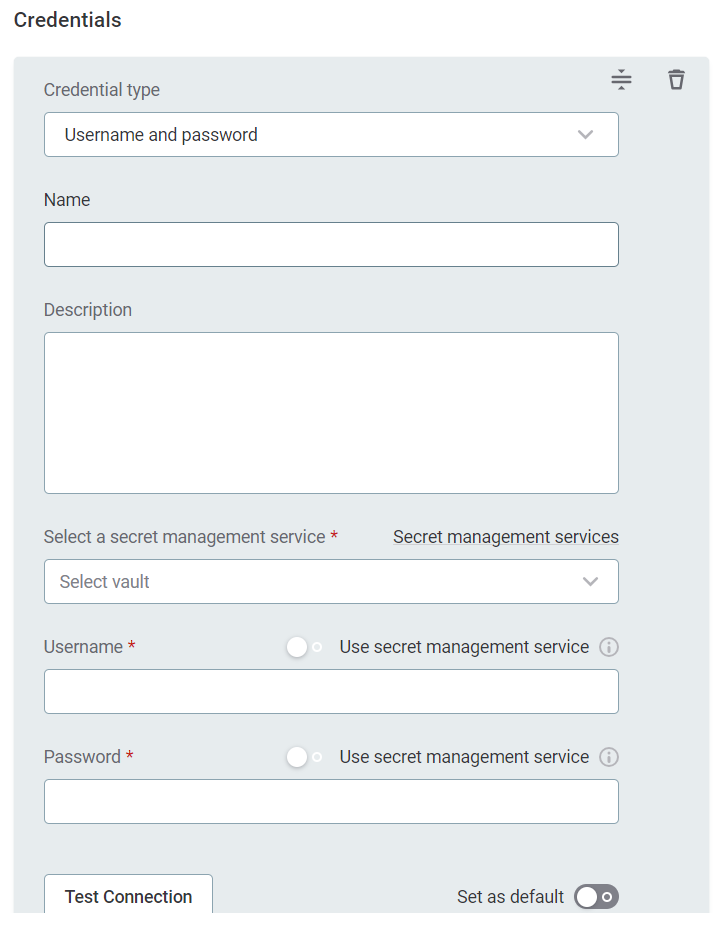

Username and password

-

Select Username and password.

-

Provide the following:

-

Name (Optional): A name for this set of credentials.

-

Description (Optional): A description for this set of credentials.

-

Select a secret management service (optional): If you want to use a secret management service to provide values for the following fields, specify which secret management service should be used. After you select the service, you can enable the Use secret management service toggle and provide instead the names the values are stored under in your key vault. For more information, see Secret Management Service.

-

Username: The username for the data source. Alternatively, enable Use secret management service and provide the name this value is stored under in your selected secret management service.

-

Password: The password for the data source. Alternatively, enable Use secret management service and provide the name this value is stored under in your selected secret management service.

-

-

If you want to use this set of credentials by default when connecting to the data source, select Set as default.

See also Connection credentials.

-

Proceed with Test the connection.

| Snowflake’s “secure by default” policy means that password-only authentication is not supported for new accounts (from September 30th, 2024): key-pair authentication is required, as per the official Snowflake documentation. Proceed to Key-pair authentication. |

Key-pair authentication

Before configuring key-pair authentication, you need to generate a key pair and configure it in Snowflake.

Prerequisites: Generate and configure keys

-

Generate your private key using the openssl tool:

openssl genrsa 2048 | openssl pkcs8 -topk8 -v1 PBE-SHA1-3DES -inform PEM -out rsa_key.p8When prompted, provide a password of your choosing.

-

Extract the public key from your private key:

openssl rsa -in rsa_key.p8 -pubout -out rsa_key.pubEnter the password you created in the previous step.

-

Configure the public key in Snowflake:

-

Go to Worksheets and run the following SQL command:

ALTER USER <user> SET RSA_PUBLIC_KEY = '<public key value>'-

You must run this command from the

ACCOUNTADMINrole or another role with appropriate privileges. -

Replace

<user>with the actual Snowflake username. -

Replace

<public key value>with the content from yourrsa_key.pubfile, excluding the header and footer lines. The value should be a single line of characters.

Alternatively, you can set the public key through the UI: Go to Admin → Users & Roles → Users → Select user → Edit → Advanced → RSA Public Key.

-

-

Configure the connection

-

Select Username and password.

-

In Username, add the username associated with your Snowflake database. This should be the same user you configured in the prerequisites, i.e., in the

ALTER USERcommand in step 3. -

In Password, enter any value. While the password isn’t used for key-pair authentication, the field cannot be left empty.

-

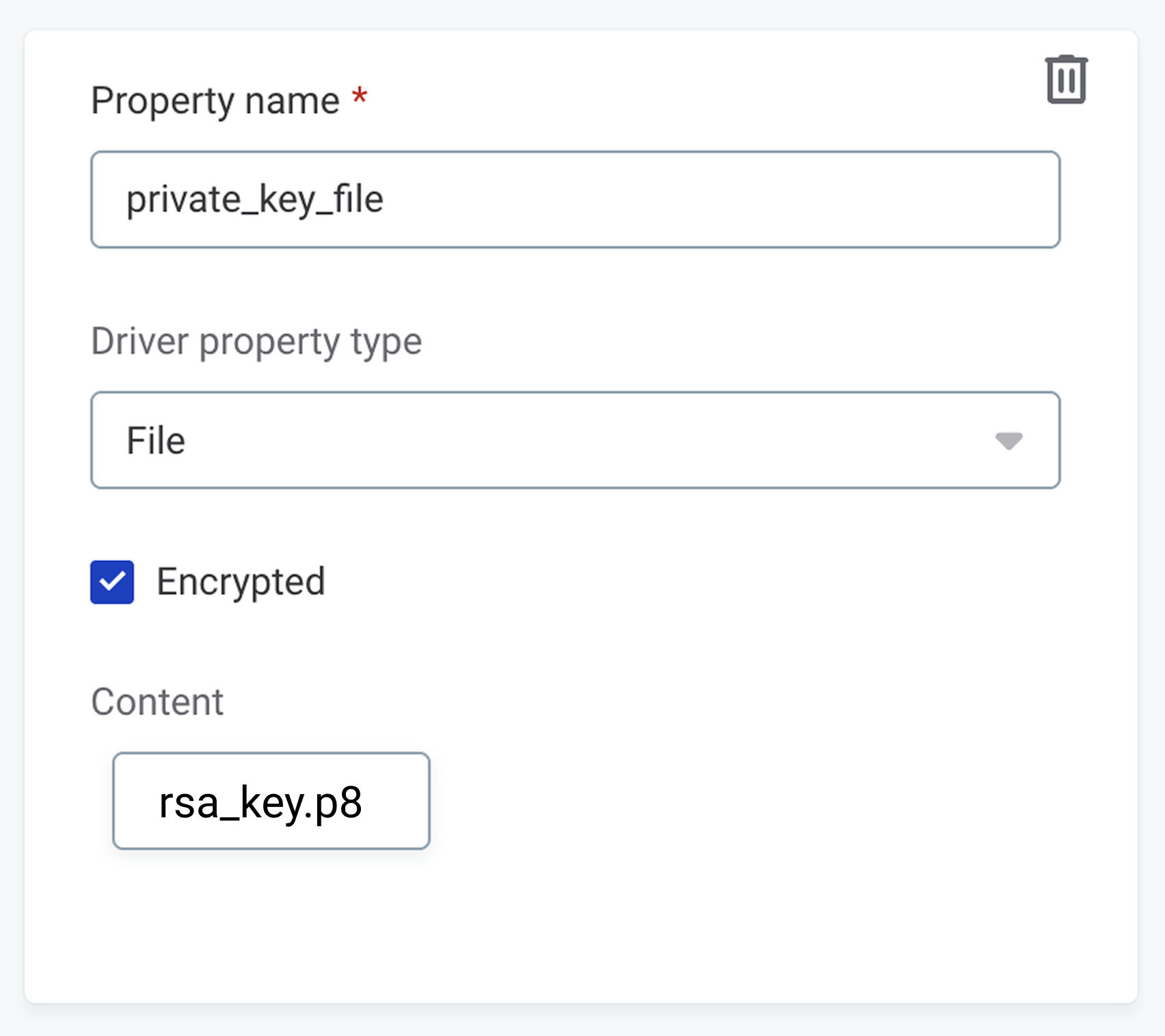

Use Add Driver property to add the private key file:

-

Driver property name:

private_key_file -

Driver property type:

File -

Encrypted: True

-

In Content, upload your

rsa_key.p8file

-

-

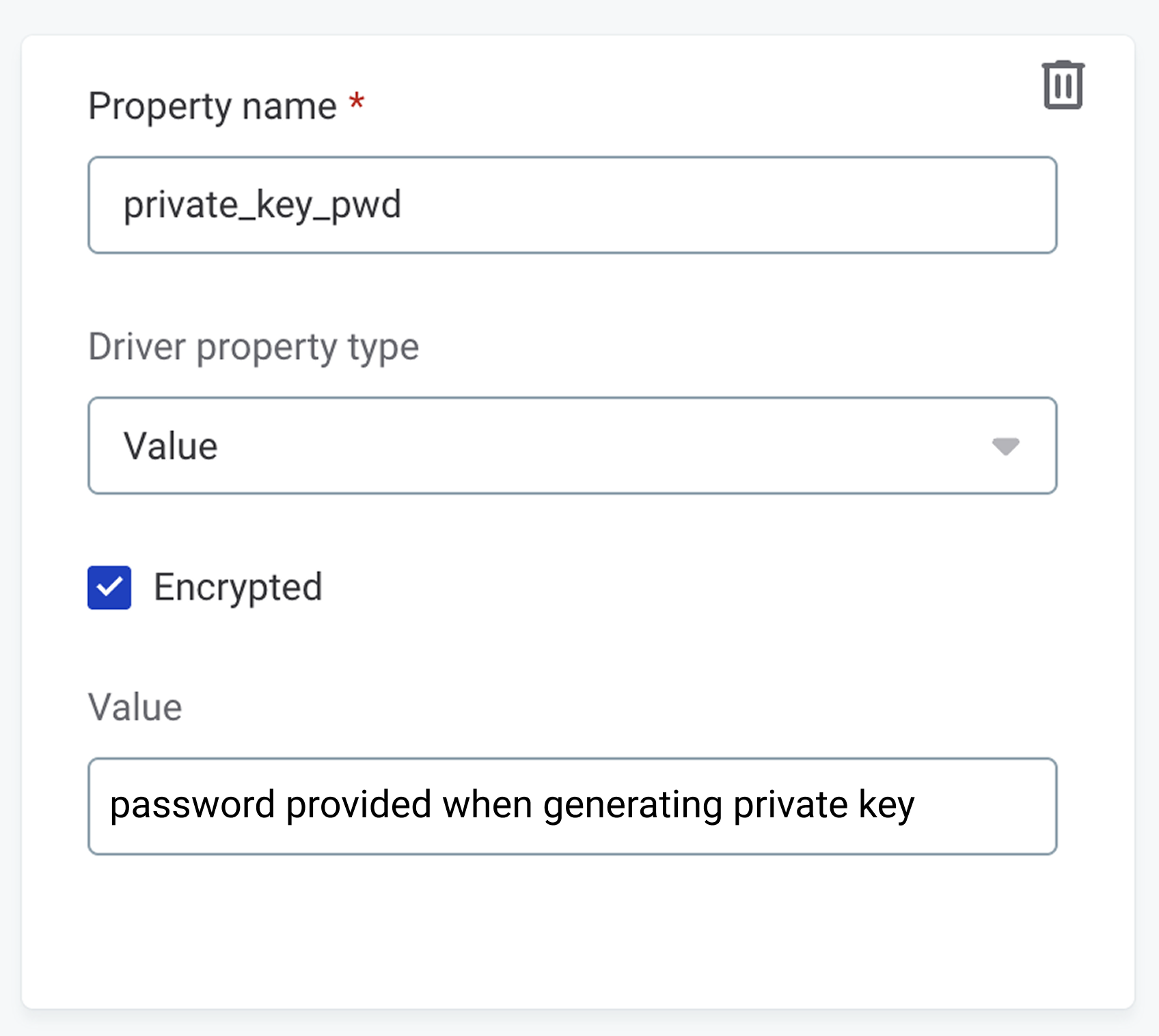

Use Add Driver property to add the private key password:

-

Driver property name:

private_key_pwd -

Driver property type:

Value -

Encrypted: True

-

In Value, provide the password you used when generating the private key

-

Key-pair authentication with JWT authenticator

This method uses Snowflake’s JWT authenticator with key-pair authentication. It follows the same principle as standard key-pair authentication but explicitly specifies the JWT authenticator in the driver properties.

Prerequisites: Generate and configure keys

Follow the same key generation and configuration steps as described in the Key-pair authentication section above.

Configure the connection

-

Select Username and password.

-

Provide the following:

-

Name (Optional): A name for this set of credentials.

-

Description (Optional): A description for this set of credentials.

-

Username: The username for the data source. This should be the same user you configured with the public key in Snowflake., i.e., in the

ALTER USERcommand in step 3 of the prerequisites. -

Password: Enter any value. While the password isn’t used to connect to Snowflake, the credentials cannot be saved if the field is left empty.

-

-

Use Add Driver property to add the authenticator:

-

Driver property name:

authenticator -

Driver property type:

Value -

Value:

snowflake_jwt

-

-

Use Add Driver property to add the private key file:

-

Driver property name:

private_key_file -

Driver property type:

File -

Encrypted: Select this option

-

In Content, upload your private key

-

|

Alternatively, you can set the authenticator as a parameter in the JDBC connection string when setting up the connection.

For example: |

If you encounter the error Test connection has failed: Private key provided is invalid or not supported, please see Troubleshooting.

OAuth credentials

| If you are using OAuth 2.0 tokens, you also need to supply the Redirect URL to the data source you’re connecting to. This information is available when configuring the connection. |

Note that only OAuth flows where the programmatic client acts on behalf of a user are supported. If your programmatic client is configured to request an access token for itself, the connection will fail. For details, see the Configure Microsoft Entra ID for External OAuth article in the Snowflake official documentation, specifically steps 10 and 11.

-

Select OAuth Credentials.

-

Provide the following:

-

Name (Optional): A name for this set of credentials.

-

Description (Optional): A description for this set of credentials.

-

Select a secret management service (optional): If you want to use a secret management service to provide values for the following fields, specify which secret management service should be used. After you select the service, you can enable the Use secret management service toggle and provide instead the names the values are stored under in your key vault. For more information, see Secret Management Service.

-

Redirect URL: This field is predefined and read-only. This URL is required to receive the refresh token and must be provided to the data source you’re integrating with.

-

Client ID: The OAuth 2.0 client ID.

-

Client secret: The client secret used to authenticate to the authorization server. Alternatively, enable Use secret management service and provide the name this value is stored under in your selected secret management service.

-

Authorization endpoint: The OAuth 2.0 authorization endpoint of the data source. It is required only if you need to generate a new refresh token.

-

Token endpoint: The OAuth 2.0 token endpoint of the data source. Used to get access to a token or a refresh token.

-

Refresh token: The OAuth 2.0 refresh token. Allows the application to authenticate after the access token has expired without having to prompt the user for credentials.

Select Generate to create a new token. Once you do this, the expiration date of the refresh token is updated in Refresh token valid till.

-

-

If you want to use this set of credentials by default when connecting to the data source, select Set as default.

See also Connection credentials.

-

Proceed with Test the connection.

OAuth user SSO credentials

Authenticating through an external identity provider SSO involves users logging into ONE through an external OIDC identity provider (for example, Okta) and using their identity when accessing a data source.

| As a prerequisite, you need to set up the integration with the external identity provider. See Okta OIDC Integration with Impersonation. |

-

Provide the following:

-

Name (Optional): A name for this set of credentials.

-

Description (Optional): A description for this set of credentials.

-

-

If you want to use this set of credentials by default when connecting to the data source, select Set as default.

See also Connection credentials.

-

Proceed with Test the connection.

Azure AD Service Principal via driver properties

Snowflake on Azure can be integrated with Azure AD for authentication.

-

Select Integrated credentials and provide the following:

-

Name (Optional): A name for this set of credentials.

-

Description (Optional): A description for this set of credentials.

-

-

If you want to use this set of credentials by default when connecting to the data source, select Set as default.

See also Connection credentials.

-

Use Add Driver property to add the following driver properties:

Driver property name Driver property type Encrypted Value Content authenticatorValue

False

OAUTHn/a

tokenValue

False

SECRET:your-secret-namen/a

ata.jdbc.aad.authTypeValue

False

AAD_CLIENT_CREDENTIALn/a

ata.jdbc.aad.tenantUrlValue

False

login.microsoftonline.com/<your-tenant-id>/oauth2/v2.0/tokenn/a

ata.jdbc.aad.tenantIdValue

False

<tenant ID of your subscription (UUID format)>n/a

ata.jdbc.aad.clientIdValue

False

<service principal client ID (UUID format)>n/a

ata.jdbc.aad.clientSecretValue

True

<service principal client secret>n/a

ata.jdbc.aad.resourceValue

False

<resource ID>.ata.jdbc.aad.resourceis the Resource ID of Snowflake in Azure and takes the following form (GUID),ab12c456-789d-01ef-gg22-3h44i5jkl67mn/a

-

Proceed with Test the connection.

Test the connection

To test and verify whether the data source connection has been correctly configured, select Test Connection.

If the connection is successful, continue with the following step. Otherwise, verify that your configuration is correct and that the data source is running.

Save and publish

Once you have configured your connection, save and publish your changes. If you provided all the required information, the connection is now available for other users in the application.

In case your configuration is missing required fields, you can view a list of detected errors instead. Review your configuration and resolve the issues before continuing.

Next steps

You can now browse and profile assets from your connection.

In Data Catalog > Sources, find and open the source you just configured. Switch to the Connections tab and select Document. Alternatively, opt for Import or Discover documentation flow.

Or, to import or profile only some assets, select Browse on the Connections tab. Choose the assets you want to analyze and then the appropriate profiling option.

Troubleshooting

Test connection has failed: Private key provided is invalid or not supported

If you are seeing this error, it could be because the key was generated using OpenSSL V3. A possible solution is adding the following property to the Snowflake JDBC driver in DPE.

Try to add the following parameter to DPE Start script /etc/systemd/system/dpe.service.d/dpe.conf:

Environment="JAVA_OPTS=[...] -Dnet.snowflake.jdbc.enableBouncyCastle=true"Error when browsing Snowflake data source

If an error is being thrown, or the app is unresponsive when you try to browse your Snowflake data source, the Snowflake warehouse might be overloaded.

By default, Ataccama uses the Snowflake warehouse specified in the JDBC connection string both for browsing and processing. A single Snowflake warehouse can run up to eight concurrent SQL queries: if you run multiple Snowflake processing in parallel, it might lead to DPE overloading the warehouse with the concurrent queries. If this happens, ONE can’t run interactive queries, such as data source browsing, data preview, or metadata import, until the processing jobs finish.

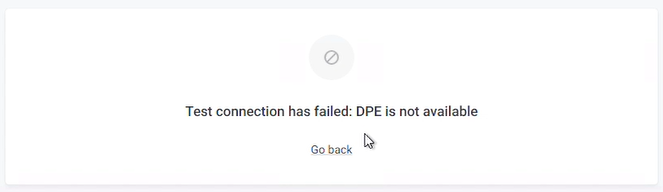

A couple of different error messages can be shown in this event, two examples are:

-

Test connection has failed: DPE is not available.

-

DEADLINE_EXCEEDED.

To overcome this limitation, you can create a separate Snowflake warehouse of XS size and specify it in the driver properties. This warehouse can then be used for the interactive capabilities, while the job processing will be done by the warehouse specified in the connection string.

To do this, add the property browsingWarehouseOverride to your Snowflake connection, as follows:

-

In Sources, select your Snowflake data source.

-

Navigate to the Connections tab.

-

On the connection for which you want to add the property, use the three dots menu and select Show details.

-

You are now on the Overview tab for that connection. In the Driver properties section, select Add Driver Property and add the following details:

-

Property name:

browsingWarehouseOverride. -

Driver property type: Value.

-

Value: The name of your XS Snowflake warehouse.

This warehouse needs to be created beforehand in Snowflake.

-

Was this page useful?