Power BI Scanner Supported Features and Limitations

Overview of supported features

The following table shows the high-level features supported by the scanner:

| Item | Notes |

|---|---|

Dashboard |

|

Dataflows |

Dataflows within dataflows are not supported. Dataflows Gen2 are not supported. |

Dataset Table |

|

External Source |

See Supported Power Query functions, type Source. |

External Source Columns |

Only available for supported external sources. |

Power Query Expressions |

|

Report |

|

Report Calculated Column |

|

Report Column |

|

Report Measure |

|

Semantic Model (Dataset) |

|

Workspace |

Supported Power Query functions

The scanner recognizes the following Power Query functions when creating lineage information:

| Power Query function | Category | Power Query function | Category |

|---|---|---|---|

AmazonRedshift.Database |

Source |

Table.FillDown |

Table function |

AzureDataExplorer.Contents |

Source |

Table.FillUp |

Table function |

Csv.Document |

Source |

Table.FirstN |

Table function |

Databricks.Catalogs |

Source |

Table.FromRows |

Table function |

Excel.Workbook |

Source |

Table.Group |

Table function |

GoogleBigQuery.Database |

Source |

Table.GroupColumns |

Table function |

Json.Document |

Source |

Table.NestedJoin |

Table function |

OData.Feed |

Source |

Table.PivotColumns |

Table function |

Oracle.Database |

Source |

Table.PromoteHeaders |

Table function |

PowerPlatform.Dataflows |

Source |

Table.RemoveColumns |

Table function |

Snowflake.Databases |

Source |

Table.RemoveLastN |

Table function |

Sql.Database |

Source |

Table.RemoveRowsWithErrors |

Table function |

Sql.Databases |

Source |

Table.RenameColumns |

Table function |

Teradata.Database |

Source |

Table.ReorderColumns |

Table function |

Value.NativeQuery |

Source |

Table.ReplaceErrorValues |

Table function |

Table.AddColumn |

Table function |

Table.ReplaceValue |

Table function |

Table.AddIndexColumn |

Table function |

Table.SelectColumns |

Table function |

Table.Buffer |

Table function |

Table.SelectRows |

Table function |

Table.Combine |

Table function |

Table.Skip |

Table function |

Table.Distinct |

Table function |

Table.Sort |

Table function |

Table.DuplicateColumn |

Table function |

Table.TransformColumns |

Table function |

Table.ExpandColumn |

Table function |

Table.TransformColumnTypes |

Table function |

Table.ExpandListColumn |

Table function |

Table.UnpivotColumns |

Table function |

Supported Power Query patterns

The scanner generates lineage between dataset tables and their sources only when Power Queries use specific syntax patterns. These patterns vary by source technology and query construction.

Supported syntax by source technology

Microsoft SQL Server

Option 1: Using Sql.Database with direct table reference:

let

// Connect to SQL

Source = Sql.Database("ataccama.windows.com", "core"),

// Select the table

TableData = Source{[Schema="landing",Item="customer"]}[Data]

in

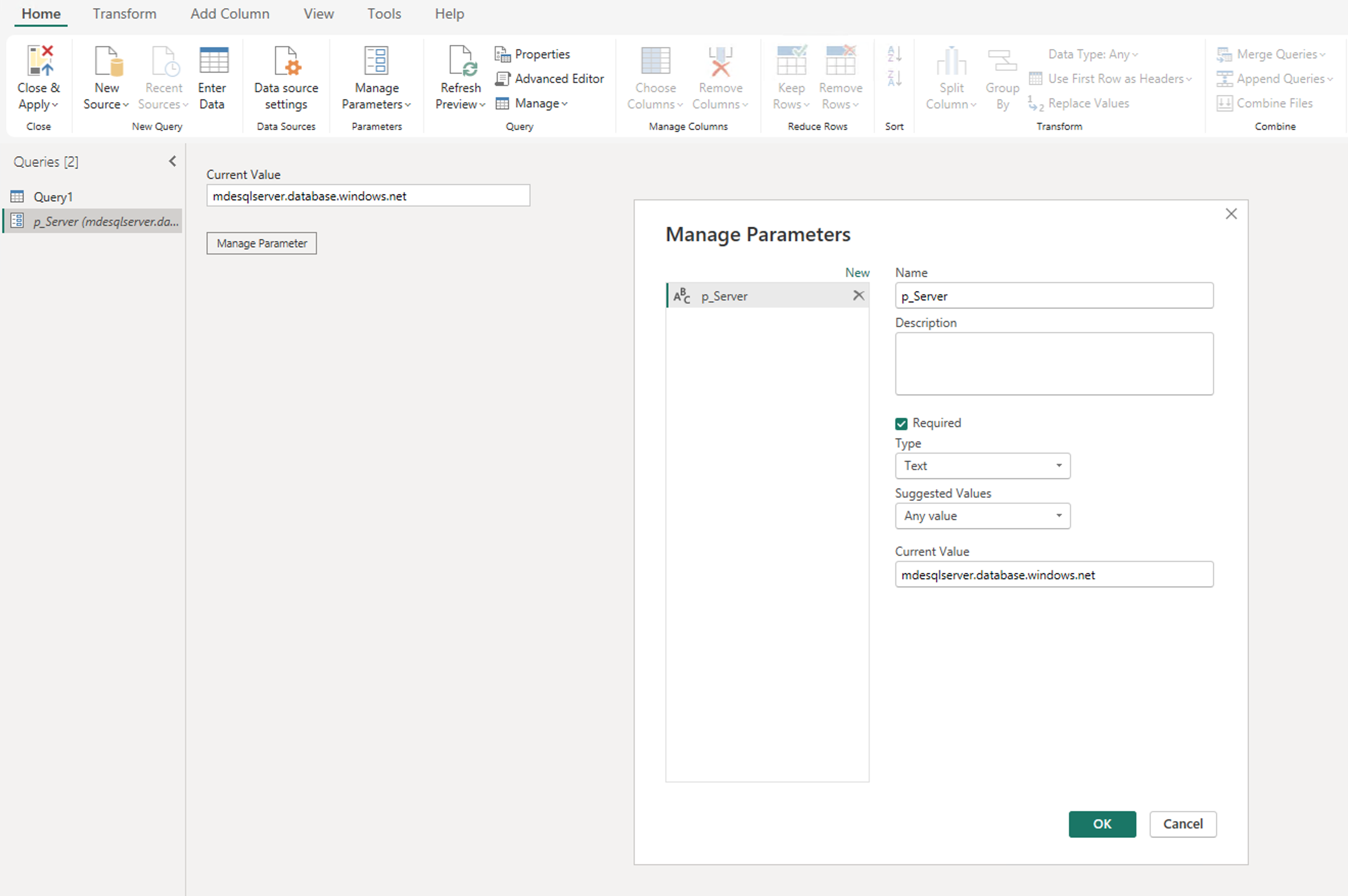

TableDataExample using parameters:

let

// Connect to SQL

Source = Sql.Database(p_Server, p_DB),

// Select the table

TableData = Source{[Schema="landing",Item="customer"]}[Data]

in

TableDataOption 2: Filtering for a specific schema and table directly after specifying the database:

let

TableData = Sql.Database("ataccama.windows.com", "core"){[Schema="landing",

Item="customer"]}[Data]

in

TableDataOption 3: Using Sql.Database with a custom query:

let

Source = Sql.Database(p_Server, "MDETestLineageDB", [Query="SELECT AddressID, AddressLine1, AddressLine2, City, StateProvince, CountryRegion, PostalCode, rowguid, ModifiedDate#(lf)FROM MDETestLineageDB.SalesLT.Address;"])

in

SourceOption 4: Using NativeQuery:

let

QueryResult =

Value.NativeQuery(

Sql.Database("mdesqlserver.database.windows.net", "MDETestLineageDB"),

"SELECT AddressID, AddressLine1, AddressLine2, City, StateProvince, CountryRegion, PostalCode, rowguid, ModifiedDate#(lf)FROM MDETestLineageDB.SalesLT.Address"

)

in

QueryResultAlternative syntax for native queries:

let

DBs = Sql.Databases(p_Server),

SelectedDB = DBs{[Name = "MDETestLineageDB"]}[Data],

QueryResult = Value.NativeQuery(SelectedDB, "SELECT * FROM SalesLT.Address")

in

QueryResultSnowflake

Option 1: Using Snowflake.Database with direct table reference:

let

TableData =

Snowflake.Database("myaccount.snowflakecomputing.com", "db1")

{[Schema = "stage", Item = "customer"]}[Data]

in

TableDataOption 2: Using NativeQuery:

let

Result =

Value.NativeQuery(

Snowflake.Databases("myaccount.snowflakecomputing.com"){[Name = "db1"]}[Data],

"SELECT cust_name

FROM stage.customer

WHERE cust_is is not null"

)

in

ResultOption 3: Multi-step filtering using Snowflake.Database:

let

// Connect to Snowflake

Source = Snowflake.Databases(

"mycompany.region.azure.snowflakecomputing.com",

"WAREHOUSE_NAME",

[Role = "ROLE_NAME"]

),

// Navigate to the target database, schema, and view

TargetDatabase = Source{[Name = "MY_DATABASE", Kind = "Database"]}[Data],

TargetSchema = TargetDatabase{[Name = "MY_SCHEMA", Kind = "Schema"]}[Data],

TargetView = TargetSchema{[Name = "MY_VIEW", Kind = "View"]}[Data],

// Example filter

FilteredRows = Table.SelectRows(TargetView, each true),

in

FilteredRowsOracle

Option 1: Using Oracle.Database with a TNS name (Oracle_Demo in this example):

let

Source = Oracle.Database("Oracle_Demo", [HierarchicalNavigation=true]),

contentwriter = Source{[Schema="contentwriter"]}[Data],

vw_customer_details = contentwriter{[Name="vw_customer_details"]}[Data]

in

vw_customer_detailOption 2: Using Oracle.Database with a full TNS entry:

let

Source = Oracle.Database("(DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=oracle.ataccama.com)(PORT=1521))(CONNECT_DATA=(SERVER=DEDICATED)(SERVICE_NAME=dev)))", [HierarchicalNavigation=true]),

contentwriter = Source{[Schema="contentwriter"]}[Data],

vw_customer_details = contentwriter{[Name="vw_customer_details"]}[Data]

in

vw_customer_detailDatabricks

Option 1: Using NativeQuery:

let

Result =

Value.NativeQuery(

Databricks.Databases("adb-1234567890123456.7.azuredatabricks.net", "https"),

"SELECT cust_name

FROM stage.customer

WHERE cust_id IS NOT NULL"

)

in

ResultDataflows

Option 1: Using PowerPlatform.Dataflows:

let

Source = PowerPlatform.Dataflows(null),

Workspaces = Source{[Id="Workspaces"]}[Data],

#"1de33d3b-2846-4634-9ab8-f83513213a63" = Workspaces{[workspaceId="1de33d3b-2846-4634-9ab8-f83513213a63"]}[Data],

#"55e33c65-0f97-49d8-be41-3e3f8d343291" = #"1de33d3b-2846-4634-9ab8-f83513213a63"{[dataflowId="55e33c65-0f97-49d8-be41-3e3f8d343291"]}[Data],

SapXLS_ = #"55e33c65-0f97-49d8-be41-3e3f8d343291"{[entity="SapXLS",version=""]}[Data]

in

SapXLS_Excel Workbooks

Option 1: Using Excel.Workbook:

let

Source = Excel.Workbook(Web.Contents("https://azureataccama-my.sharepoint.com/personal/com/Documents/Apps/Microsoft Power Query/Uploaded Files/sap_hana_db_lineage_questionnaire.xlsx"), null, true),

#"Navigation 1" = Source{[Item = "Sheet1", Kind = "Sheet"]}[Data],

#"Changed column type" = Table.TransformColumnTypes(#"Navigation 1", {{"Column1", type text}, {"Column2", type text}, {"Column3", type text}, {"Column4", type text}})

in

#"Changed column type"CSV Files

Option 1: Using Csv.Document with Web.Contents:

let

Source = Csv.Document(Web.Contents("https://azureataccama-my.sharepoint.com/personal/com/Documents/Apps/Microsoft Power Query/Uploaded Files/flows.csv"), [Delimiter = ",", Columns = 6, QuoteStyle = QuoteStyle.None]),

#"Changed column type" = Table.TransformColumnTypes(Source, {{"Column1", type text}, {"Column2", type text}, {"Column3", type text}, {"Column4", type text}, {"Column5", type text}, {"Column6", type text}}),

#"Renamed columns" = Table.RenameColumns(#"Changed column type", {{"Column5", "id_join"}})

in

#"Renamed columns"Option 2: Using Csv.Document with File.Contents:

let

Source = Csv.Document(File.Contents("c:\personal\com\flows.csv"), [Delimiter = ",", Columns = 6, QuoteStyle = QuoteStyle.None]),

#"Changed column type" = Table.TransformColumnTypes(Source, {{"Column1", type text}, {"Column2", type text}, {"Column3", type text}, {"Column4", type text}, {"Column5", type text}, {"Column6", type text}}),

#"Renamed columns" = Table.RenameColumns(#"Changed column type", {{"Column5", "id_join"}})

in

#"Renamed columns"Joining sources

Table.NestedJoin

Option 1: Joining standard sources:

let

Source = Csv.Document(File.Contents("C:\Users\frantisek.budias\Documents\file1.csv"),[Delimiter=",", Columns=2, Encoding=1252, QuoteStyle=QuoteStyle.None]),

#"Promoted Headers" = Table.PromoteHeaders(Source, [PromoteAllScalars=true]),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"mycol1", Int64.Type}, {" ""mycol2""", Int64.Type}}),

#"Merged Queries" = Table.NestedJoin(#"Changed Type", {"mycol1"}, file2, {"mycol3"}, "file2", JoinKind.LeftOuter),

#"Merged Queries1" = Table.NestedJoin(#"Merged Queries", {"mycol1"}, file3, {"mycol4"}, "file3", JoinKind.LeftOuter)

in

#"Merged Queries1"Option 2: Joining sources from a Dataflow:

let

Source = PowerPlatform.Dataflows(null),

Workspaces = Source{[Id="Workspaces"]}[Data],

#"1de33d3b-2846-4634-9ab8-f83513213a63" = Workspaces{[workspaceId="1de33d3b-2846-4634-9ab8-f83513213a63"]}[Data],

#"55e33c65-0f97-49d8-be41-3e3f8d343291" = #"1de33d3b-2846-4634-9ab8-f83513213a63"{[dataflowId="55e33c65-0f97-49d8-be41-3e3f8d343291"]}[Data],

assets_ = #"55e33c65-0f97-49d8-be41-3e3f8d343291"{[entity="assets",version=""]}[Data],

#"Merged Queries" = Table.NestedJoin(assets_, {"Column1"}, flows, {"id_join"}, "flows", JoinKind.LeftOuter),

#"Expanded flows" = Table.ExpandTableColumn(#"Merged Queries", "flows", {"Column1", "Column2", "Column3", "Column4", "id_join", "Column6"}, {"flows.Column1", "flows.Column2", "flows.Column3", "flows.Column4", "flows.id_join", "flows.Column6"})

in

#"Expanded flows"Known limitations

Variables declared within Power Query

Variables declared and referenced within the same Power Query are not supported:

let

MyQuery = "SELECT AddressID, AddressLine1, AddressLine2, City, StateProvince, CountryRegion, PostalCode, rowguid, ModifiedDate#(lf)FROM SalesLT.Address;",

Source = Sql.Database(p_Server, db, [Query=MyQuery])

in

SourceParameter concatenation in queries

Parameters combined with other parameters or strings (.." & p_Schema & "..) are not supported by default:

let

Source = Sql.Database(p_Server, "MDETestLineageDB", [Query="SELECT AddressID, AddressLine1, AddressLine2, City, StateProvince, CountryRegion, PostalCode, rowguid, ModifiedDate#(lf)FROM " & p_Schema & ".Address;"])

in

SourceAs a workaround, configure the substituteExpressions parameter in your scan plan JSON file to resolve concatenated expressions:

"substituteExpressions": [

{

"toExpression": "SalesLT",

"fromExpression": "\" & p_Schema & \""

}

]Cross-references within the same dataset

Dataset tables that reference other tables within the same dataset are not supported:

let

Source = Sales

in

SourceAs a workaround, create a new dataset table named "Sales" that duplicates the Power Query from the "Source" table.

Loop-based filtering for server, database, or schema

Using loops to identify servers, databases, or schemas is not supported. Note that using loops to filter data does not affect lineage.

let

Source = Sql.Database(p_Server, "MDETestLineageDB"),

SchemaFiltered = Table.SelectRows(Source, each [Schema] = "SalesLT")

in

SchemaFilteredMicrosoft Power BI limitations

To harvest lineage from a Power BI report, both of the following conditions must be met:

-

The report must be downloadable.

-

The semantic model used by the report must also be downloadable.

Microsoft imposes certain restrictions that can prevent downloading. For details, refer to the official Microsoft documentation:

API rate limits

Power BI allows 500 API requests per hour. For more information, see Admin - WorkspaceInfo GetScanResult.

Was this page useful?