Explore Your Data

Data discovery helps you quickly find, explore, and understand the data available across your organization.

It enables you to confidently identify the right datasets, assess their relevance and quality, make informed data-driven decisions, and ensure regulatory compliance.

The Agentic Data Trust platform offers a comprehensive set of features to support data exploration, including search and filtering capabilities, data quality insights, glossary, data lineage visualization, and data profiling.

If your catalog is already populated and enriched through actions such as profiling, metadata extraction, and business term detection, you can go directly to How to search for the data you need to start working with your data right away.

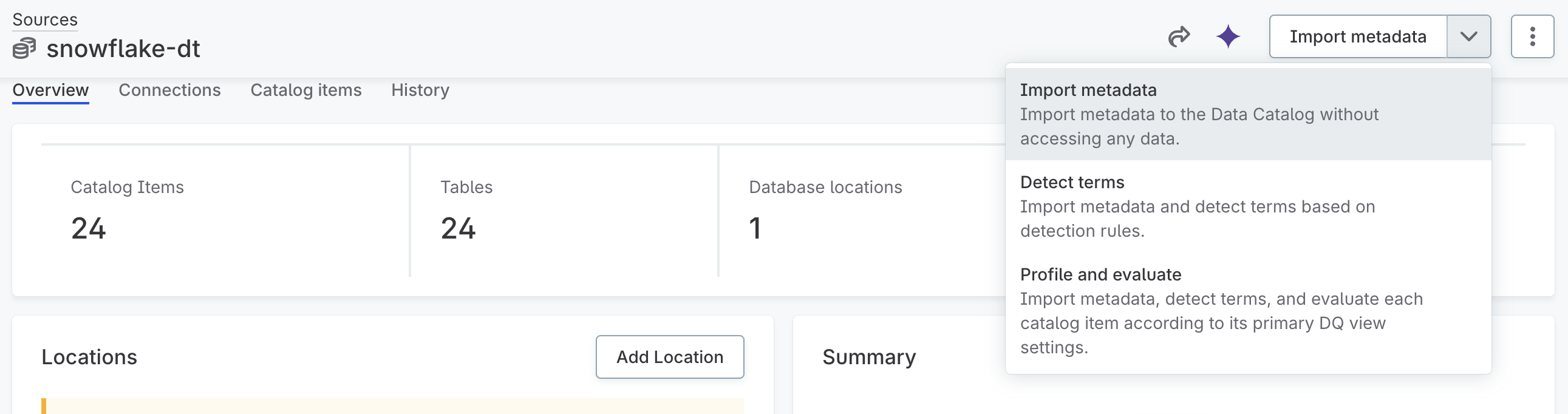

Discovery actions in Catalog

Once you connect to a data source, there are three default actions you can run to populate your data catalog, classify your data, and evaluate it. These actions are available at multiple levels:

-

Source level - All catalog items in a data source.

-

Connection level - All or selected items in a connection, such as schemas.

-

Catalog item level - Individual items, once they’ve been imported.

Import metadata

This action imports catalog items from a source and analyzes their metadata without directly accessing the data. It is the fastest discovery option.

Detect terms

| Detection rules need to be configured first to perform this action in Catalog. |

In addition to importing metadata, this action runs term detection to automatically link glossary terms to catalog items and their attributes. This results in better metadata classification, improved discoverability, and stronger data governance.

Profile and evaluate

| Profiling and DQ evaluation use the configuration in your DQ view. This configuration defines which rules apply and how and when the processing runs. You can change it in DQ view. |

This is the most comprehensive discovery action available. It imports metadata, runs term detection, and initiates DQ evaluation based on each catalog item’s primary DQ view settings, providing the most complete information about the data source.

| You can see the progress and details of the jobs in the Processing center, accessible from the main navigation. |

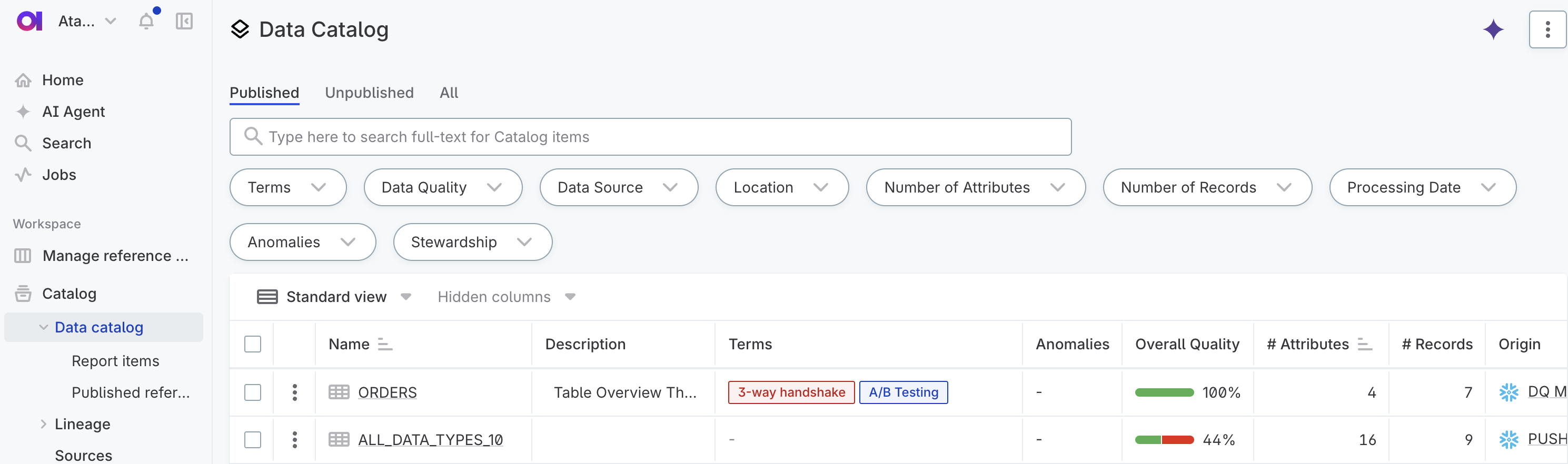

How to search for the data you need

Finding the right data for your analysis, reporting, or business needs is crucial for making informed decisions. There are several ways to discover data in the platform, depending on your use case:

-

Looking for a specific asset — See targeted search.

-

Exploring broadly — See exploratory search.

-

Validating data — See validation.

Search options

There are two ways to search in the platform:

-

Search across all datasets — Accessible from the main navigation, homepage search bar, or via keyboard shortcuts:

-

Mac: ⌘+K

-

Windows/Linux: Ctrl+K

-

-

Search within a specific entity — Available directly on each listing, for example, catalog items, detection rules, or terms.

Targeted search: When you know what you’re looking for

Use fulltext search when you know the name of a catalog item, attribute, term, or another asset.

|

Best for

|

How to search

-

Use the search bar with specific keywords (for example, "Customer", "Sales").

-

Try variations of keywords.

-

Apply filters to narrow down results (for example, by data source, stewardship, or data quality).

Example

You’re a financial analyst looking for the catalog item "Customer_transactions_Q2" which you previously used in a monthly revenue dashboard.

-

Go to Data catalog, accessible from the main navigation.

-

Use the search bar at the top.

-

Enter the exact catalog item name "Customer_transactions_Q2".

-

Alternatively, search by known attribute name or business term (for example, "Transaction_amount").

-

Apply filters to narrow down by data source, stewardship, or processing date.

Exploratory search: When you’re not sure what you’re looking for

If you don’t have exact names or sources, guided methods can be more effective than keyword search alone. Use this approach when you need to discover data based on business concepts, compliance requirements, or domain expertise.

|

Best for

|

How to search

Option 1: Business terms and glossary

-

Navigate to Glossary.

-

Search for business concepts ("Personal data", "Revenue").

-

From each term, view Occurrence tab to find related datasets.

-

Apply filters to narrow down results.

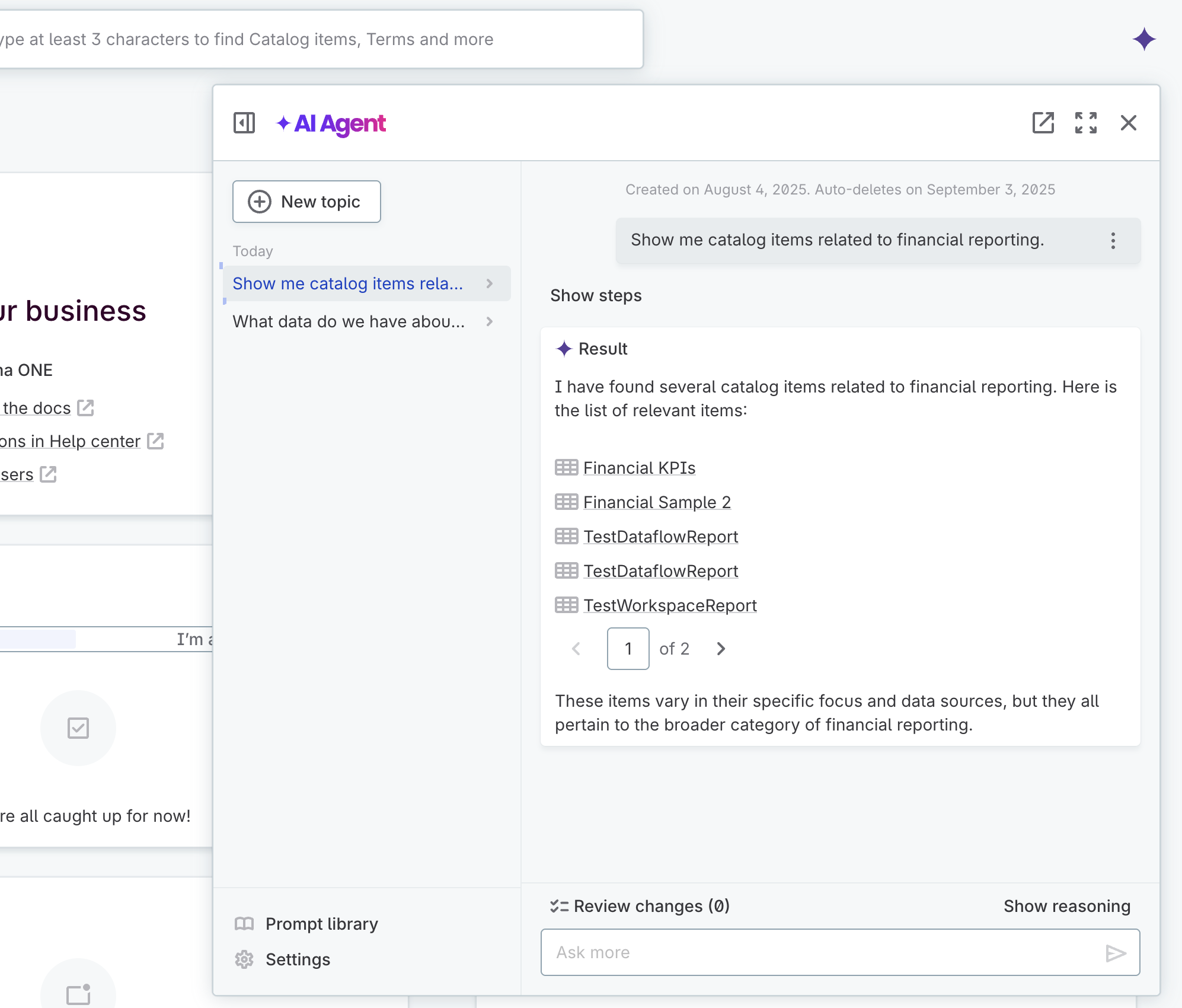

Option 2: AI Agent assistance

-

Open AI Agent.

-

Use natural language:

-

"Find data about customer purchasing behavior."

-

"Show me catalog items related to financial reporting."

-

-

Review suggestions and ask follow-up questions.

Example

You’re part of a compliance team tasked with locating all data related to customer addresses for GDPR audit readiness, but you don’t know the exact dataset names or where they are.

Option 1: Use the glossary

-

Open Glossary.

-

Search for the business term "Customer address".

-

View associated catalog items linked to that term.

-

Browse related terms like "Postal code", "Billing address", "PII data".

Option 2: Use the AI Agent

-

Open AI Agent.

-

Ask: "Show me catalog items that contain customer address information."

-

Review the results and open the relevant items.

Validation: Make sure it’s the right data

Once you find the data you’ve searched for, there are various ways how to validate that it meets your needs before using it.

|

Best for

|

How to validate

-

Inspect the data — Check preview, read asset descriptions.

-

Assess data quality — Review data quality metrics and DQ rules.

-

Understand the context — Check lineage, view data usage.

-

Ask the data owner or data steward.

Example

You found a dataset named "Client_profile_data" and want to confirm it’s accurate, up to date, and appropriate for your analysis.

-

Preview the data:

-

Select the dataset from Catalog.

-

View sample records and attribute-level statistics.

-

-

Check the profiling results:

-

Review null values, uniqueness, and format distributions.

-

Confirm that key attributes are populated and consistent.

-

-

Assess the data quality:

-

Look at the data quality score.

-

Review the DQ rules results. For example, "Email format valid?", "Age within bounds?", "Country is ISO standard?".

-

-

Trace the data lineage and check:

-

Where the data originated (for example, Salesforce or CRM).

-

Whether it’s transformed through ETL jobs.

-

Who else is consuming it (dashboards, APIs).

-

Was this page useful?