Databricks Unity Catalog Connection

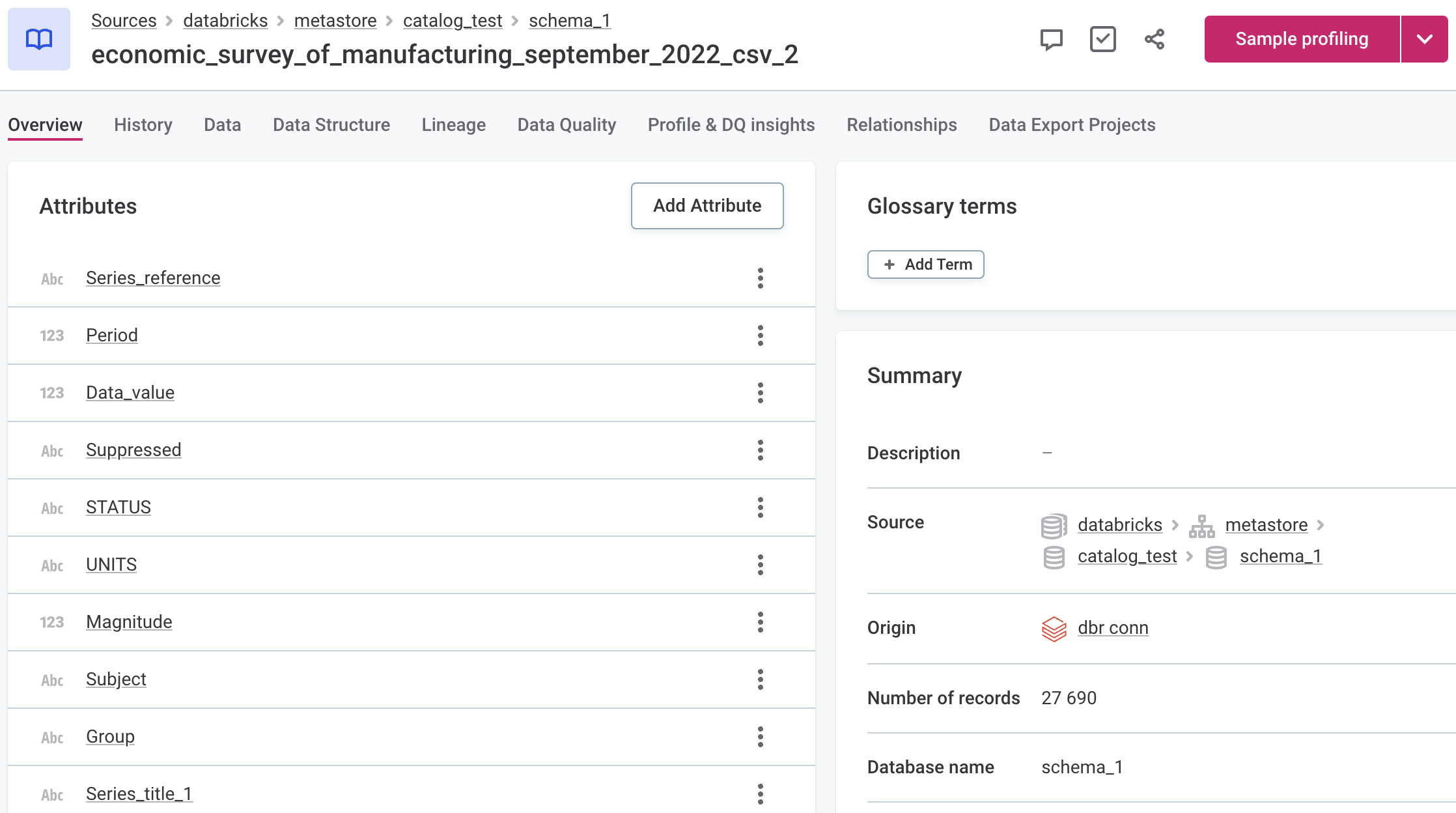

Databricks Unity Catalog provides a unified approach to governing your data and AI assets across Databricks workspaces, along with centralized access control, auditing, lineage, and data discovery capabilities. In Unity Catalog, data assets are organized in three levels, starting from the highest: Catalog > Schema > Table, allowing you to work with multiple catalogs at once.

|

You can see the three catalog levels in the Source field when viewing catalog item details. Use this in the Location filter to narrow down the catalog items you’re looking for.

|

|

Currently, Databricks Unity Catalog is only supported for new data sources and cannot be enabled on existing Databricks connections. In addition, we only support Unity Catalog with Dedicated access mode enabled, not with Standard. For more information about what this means for your Databricks configuration, see the official Databricks documentation, article Create clusters & SQL warehouses with Unity Catalog access. |

| As your Databricks cluster can shut down when idle, it sometimes takes a bit of time before it is ready again for requests. If you try to browse the cluster during this period, you receive a timeout error. |

Prerequisites

Configure a new Unity cluster in Data Processing Engine (DPE).

To do this, add the following set of properties either to dpe/etc/application.properties or in Configuration > DPE Configurations.

Take note of the following:

-

The property

plugin.metastoredatasource.ataccama.one.cluster.databricks.unity-catalog-enabledmust be set totrue. -

With Unity clusters, as only single user access mode is supported, authentication is only possible using an access token. Therefore, service principals are not supported.

-

You can specify some catalogs as technical and prevent them from being imported to ONE. Use the following property

plugin.metastoredatasource.ataccama.one.cluster.databricks.catalog-exclude-pattern. The value should be a regular expression matching the catalog items that you don’t want to import.

| Make sure to update the values as needed. For a more detailed description of properties, see Metastore Data Source Configuration. |

plugin.metastoredatasource.ataccama.one.cluster.databricks.unity-catalog-enabled=true

plugin.metastoredatasource.ataccama.one.cluster.databricks.name=databricks

plugin.metastoredatasource.ataccama.one.cluster.databricks.url=<;JDBC_connection_string>

plugin.metastoredatasource.ataccama.one.cluster.databricks.databricksUrl=<;Unity_cluster_URL>

plugin.metastoredatasource.ataccama.one.cluster.databricks.driver-class=com.simba.spark.jdbc.Driver

plugin.metastoredatasource.ataccama.one.cluster.databricks.driver-class-path=${ataccama.path.root}/lib/runtime/jdbc/databricks/*

plugin.metastoredatasource.ataccama.one.cluster.databricks.timeout=15m

plugin.metastoredatasource.ataccama.one.cluster.databricks.authentication=TOKEN

plugin.metastoredatasource.ataccama.one.cluster.databricks.catalog-exclude-pattern=^(SAMPLES)|(samples)|(main)$

plugin.metastoredatasource.ataccama.one.cluster.databricks.full-select-query-pattern=SELECT {columns} FROM {table}

plugin.metastoredatasource.ataccama.one.cluster.databricks.preview-query-pattern=SELECT {columns} FROM {table} LIMIT {previewLimit}

plugin.metastoredatasource.ataccama.one.cluster.databricks.row-count-query-pattern=SELECT COUNT(*) FROM {table}

plugin.metastoredatasource.ataccama.one.cluster.databricks.sampling-query-pattern=SELECT {columns} FROM {table} LIMIT {limit}

plugin.metastoredatasource.ataccama.one.cluster.databricks.dsl-query-preview-query-pattern=SELECT * FROM ({dslQuery}) dslQuery LIMIT {previewLimit}

plugin.metastoredatasource.ataccama.one.cluster.databricks.dsl-query-import-metadata-query-pattern=SELECT * FROM ({dslQuery}) dslQuery LIMIT 0Create a source

To connect to Databricks Unity Catalog:

-

Navigate to Data Catalog > Sources.

-

Select Create.

-

Provide the following:

-

Name: The source name.

-

Description: A description of the source.

-

Deployment (Optional): Choose the deployment type.

You can add new values if needed. See Lists of Values. -

Stewardship: The source owner and roles. For more information, see Stewardship.

-

| Alternatively, add a connection to an existing data source. See Connect to a Source. |

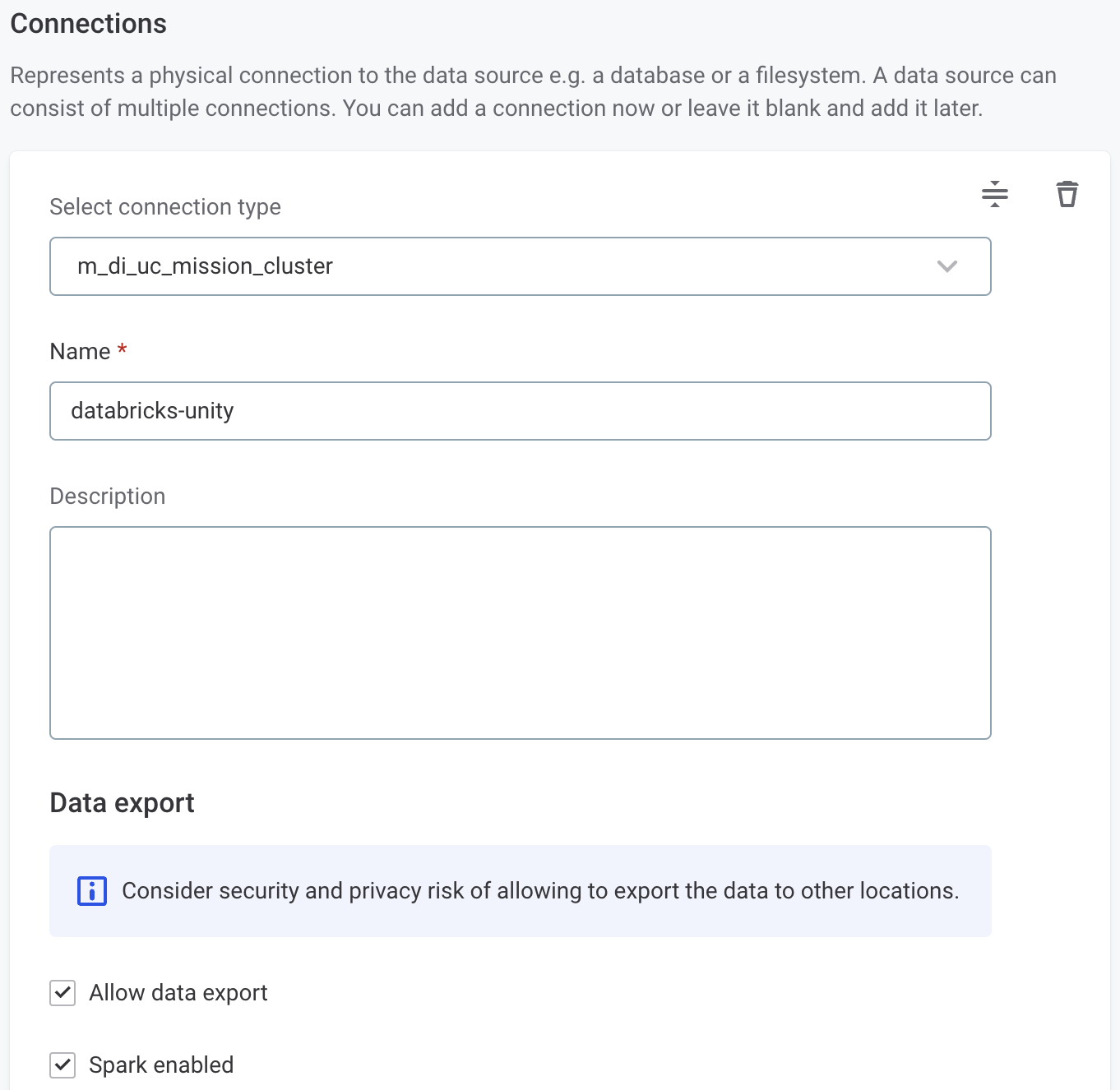

Add a connection

-

Select Add Connection.

-

In Select connection type, choose Metastore > [your Unity Catalog cluster].

-

Provide the following:

-

Name: A meaningful name for your connection. This is used to indicate the location of catalog items.

-

Description (Optional): A short description of the connection.

-

-

Select Spark enabled.

-

In Additional settings, select Enable exporting and loading of data if you want to export data from this connection and use it in ONE Data or outside of ONE.

If you want to export data to this source, you also need to configure write credentials as well. Consider the security and privacy risks of allowing the export of data to other locations.

Add credentials

-

Select Add Credentials.

-

In Credential type, select Token credentials.

-

Provide the following:

-

Name (Optional): A name for this set of credentials.

-

Description (Optional): A description for this set of credentials.

-

Select a secret management service (optional): If you want to use a secret management service to provide values for the following fields, specify which secret management service should be used. After you select the service, you can enable the Use secret management service toggle and provide instead the names the values are stored under in your key vault. For more information, see Secret Management Service.

-

Token: The access token for Databricks. For more information, see the official Databricks documentation. Alternatively, enable Use secret management service and provide the name this value is stored under in your selected secret management service.

-

-

If you want to use this set of credentials by default when connecting to the data source, select Set as default.

One set of credentials must be set as default for each connection. Otherwise, monitoring and DQ evaluation fail, and previewing data in the catalog is not possible.

Add write credentials

Write credentials are required if you want to export data to this source.

To configure these, in Write credentials, select Add Credentials and follow the corresponding step depending on the chosen authentication method (see Add credentials).

| Make sure to set one set of write credentials as default. Otherwise, this connection isn’t shown when configuring data export. |

Test the connection

To test and verify whether the data source connection has been correctly configured, select Test Connection.

If the connection is successful, continue with the following step. Otherwise, verify that your configuration is correct and that the data source is running.

Save and publish

Once you have configured your connection, save and publish your changes. If you provided all the required information, the connection is now available for other users in the application.

In case your configuration is missing required fields, you can view a list of detected errors instead. Review your configuration and resolve the issues before continuing.

Next steps

You can now browse and profile assets from your Unity Catalog connection.

In Data Catalog > Sources, find and open the source you just configured. Switch to the Connections tab and select Document. Alternatively, opt for Import or Discover documentation flow.

Or, to import or profile only some assets, select Browse on the Connections tab. Choose the assets you want to analyze and then the appropriate profiling option.

Was this page useful?