Run DQ Evaluation

Identify issues in your dataset by running DQ evaluation and checking the quality of records according to the conditions you have set in your DQ evaluation rules. For example, ensure that all fields are filled, there are no duplicates, or a value has been entered in the correct format.

Prerequisites

It is necessary to run data discovery before running DQ evaluation in Ataccama ONE so glossary terms are mapped to catalog items and attributes. You also need to have DQ evaluation rules mapped to those terms. For more information, see Detection and DQ Evaluation Rules.

Alternatively, map DQ rules directly to attributes before running DQ evaluation. For more information, see add-dq-rules-to-attributes.adoc.

See the following guides for in-depth instructions, or head back to Data Quality for a general overview of the data quality evaluation process.

Essential

Related

How is data quality calculated?

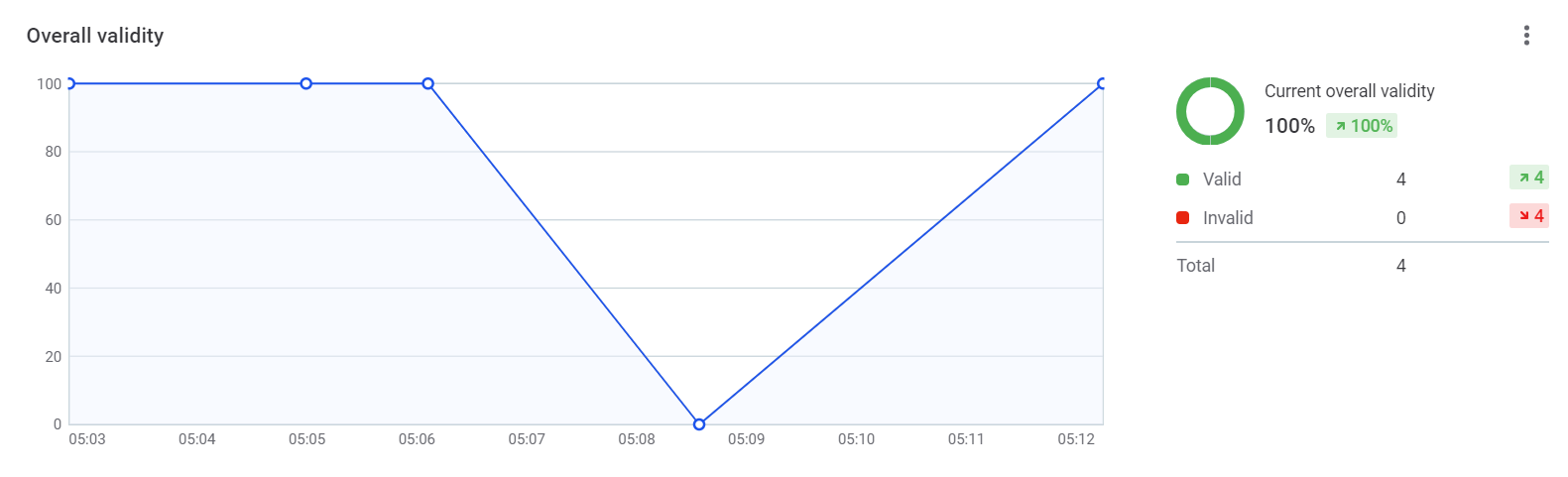

The data quality, represented by the Overall validity metric found throughout the application, is calculated according to the number of values which pass the validity rules applied to them.

It is important to note that only results of rules of the dimension type Validity (one of the four default dimensions) count towards this metric. If a record has multiple validity rules assigned to it, if at least one of rule is not passed, the record is counted as invalid. Records are only considered valid if they pass all validity rules assigned to them.

Evaluate data quality

Data quality evaluation occurs during a number of processes in Ataccama ONE:

Ad hoc DQ evaluation

You can run DQ evaluation on the catalog item, attribute, or term level on an ad hoc basis.

To do this:

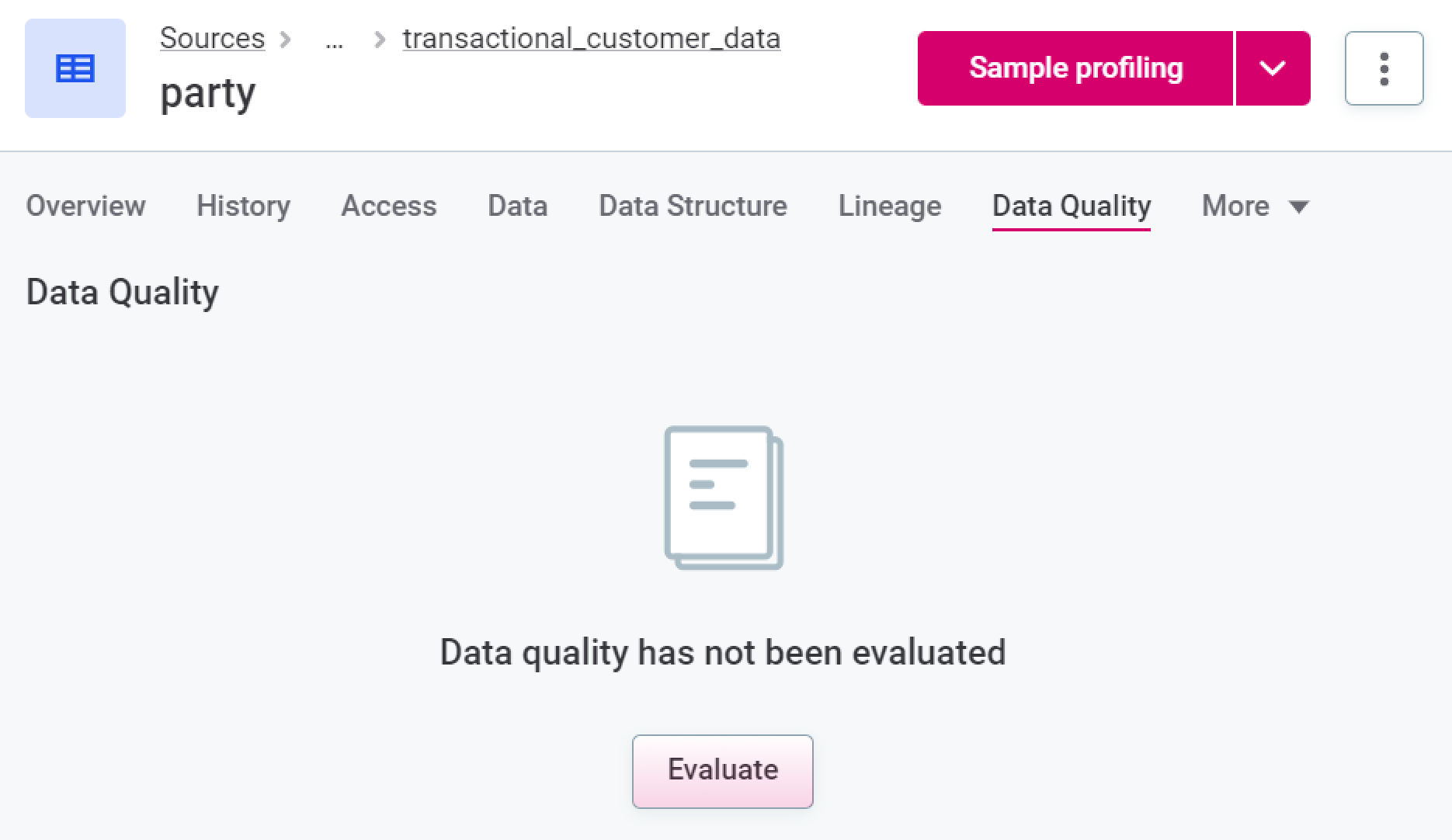

Catalog items and attributes

-

In Knowledge Catalog > Catalog Items, select the required catalog item or attribute, and from the Overview or Data Quality tab, select Evaluate.

Terms

-

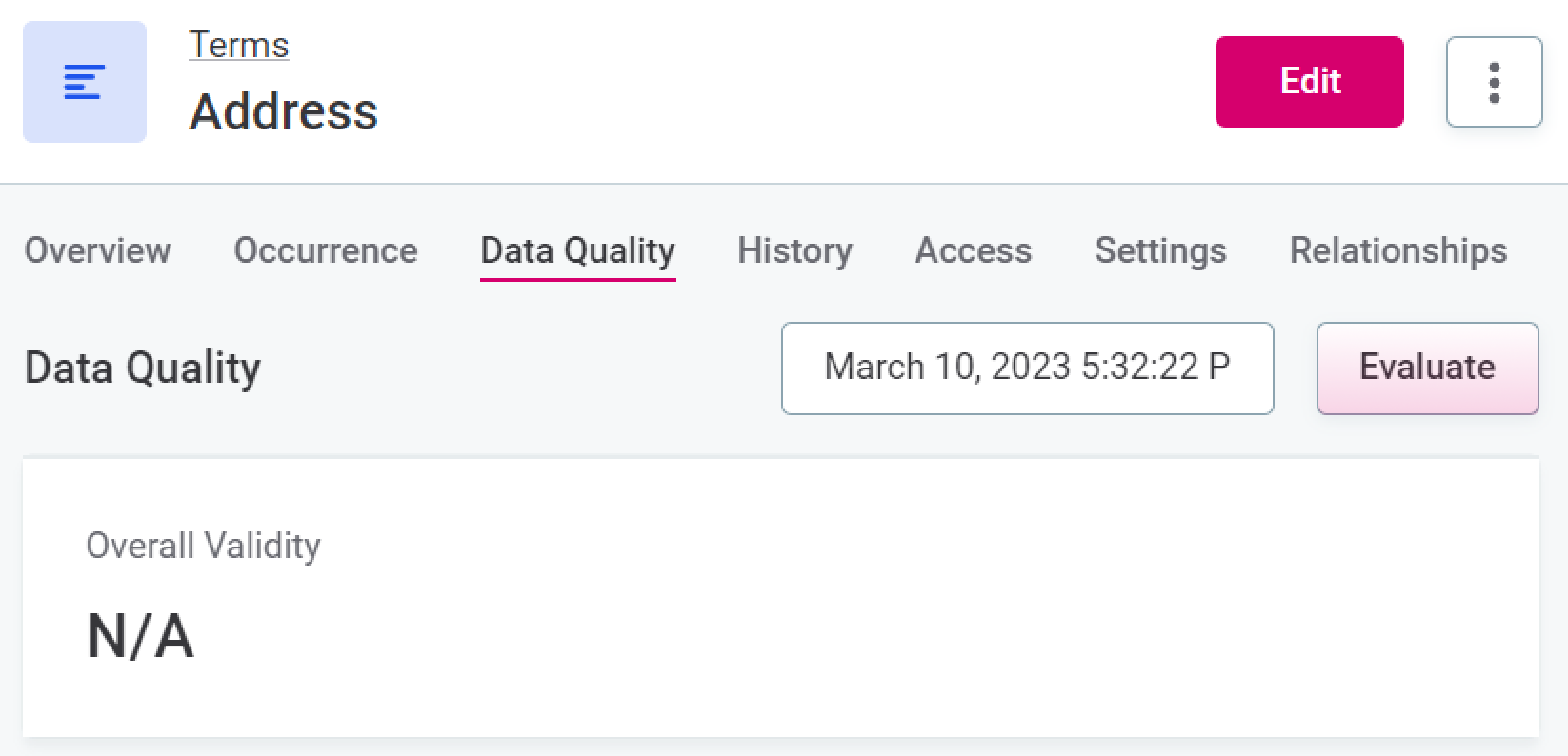

In Business Glossary > Terms, select the required term, and from the Overview or Data Quality tab, select Evaluate.

The data quality of a term is an aggregation of the data quality of the attributes to which the term is assigned.

Schedule monitoring

You can schedule DQ evaluation via monitoring projects.

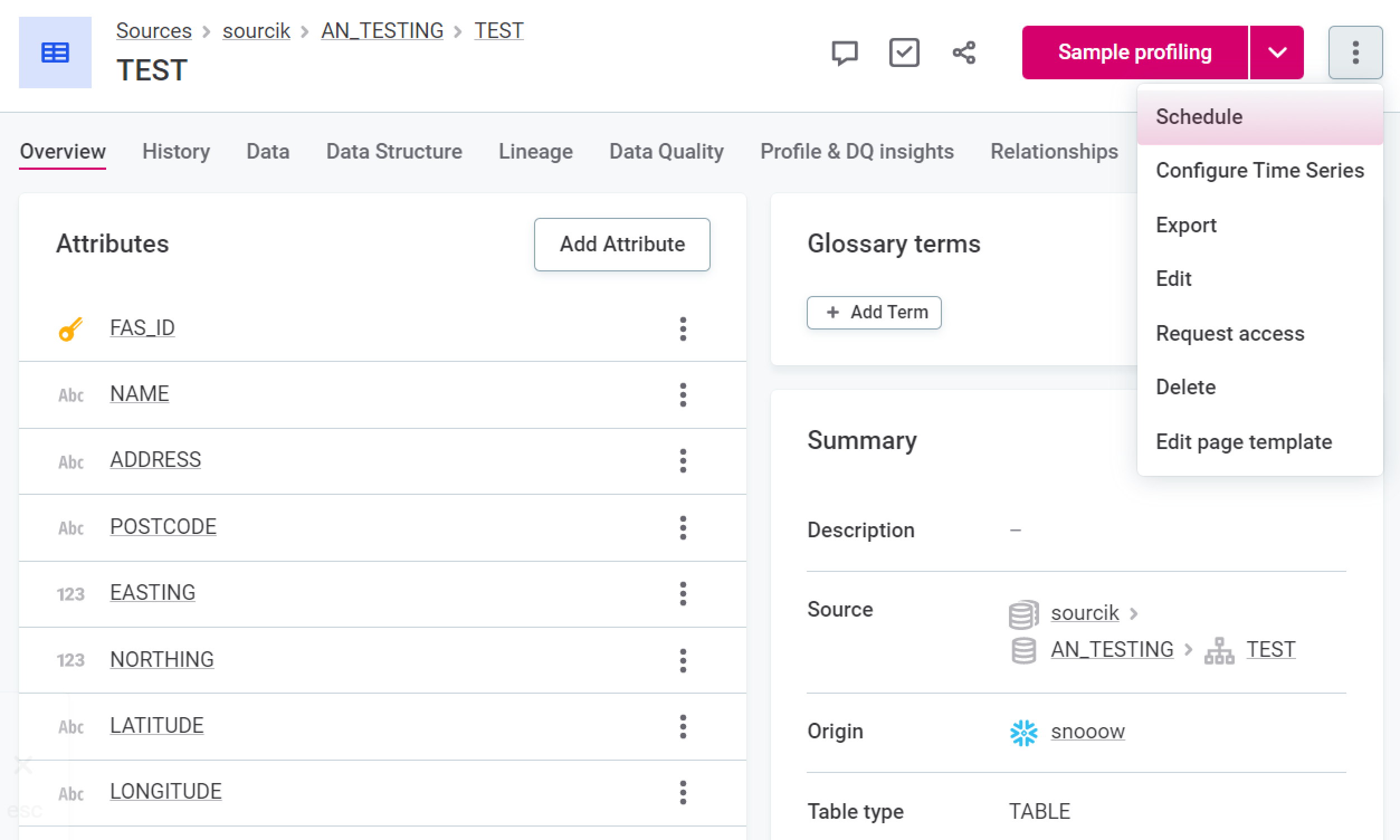

To do this:

-

Select the required project, and in the three dots menu select Schedule.

-

Select Add Scheduled Event.

-

In Type, select DQ eval schedule.

-

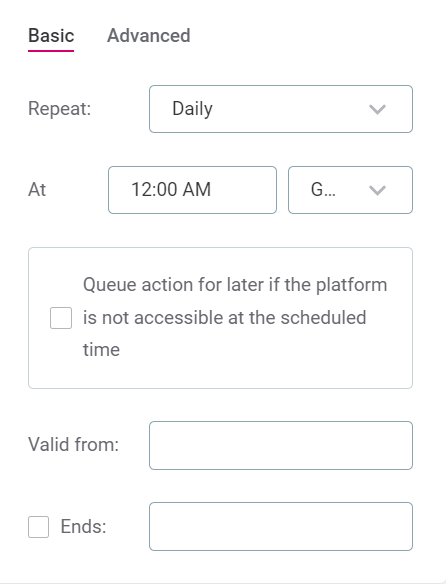

Define the schedule using either Basic or Advanced configuration:

-

For Basic configuration:

-

In Repeat, select from the list of options how often DQ evaluation should run.

-

In At, specify the time (24-hour clock) at which the evaluation should be run, and select from the list of available time zones.

-

If required, select Queue action for later if the platform is not accessible at the scheduled time.

-

In Valid from, define the date from which the schedule should be followed.

-

If the schedule should have a finite end date, select Ends. In this case, you also need to define the date at which the schedule should no longer be effective.

-

-

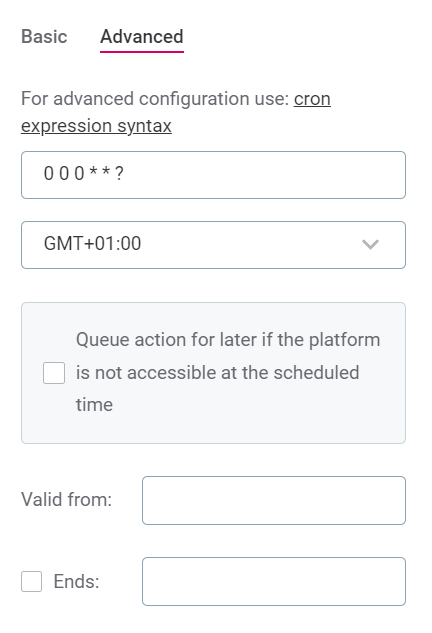

For Advanced configuration:

-

Set the schedule using Cron expression syntax. For more information, see the Cron Expression Generator and Explainer.

-

In Valid from, define the date from which the schedule should be followed.

-

If the schedule should have a finite end date, select Ends. In this case, you also need to define the date at which the schedule should no longer be effective.

-

-

-

Select Save and publish.

Run profiling and DQ evaluation

You can run profiling and DQ evaluation at the catalog item level.

|

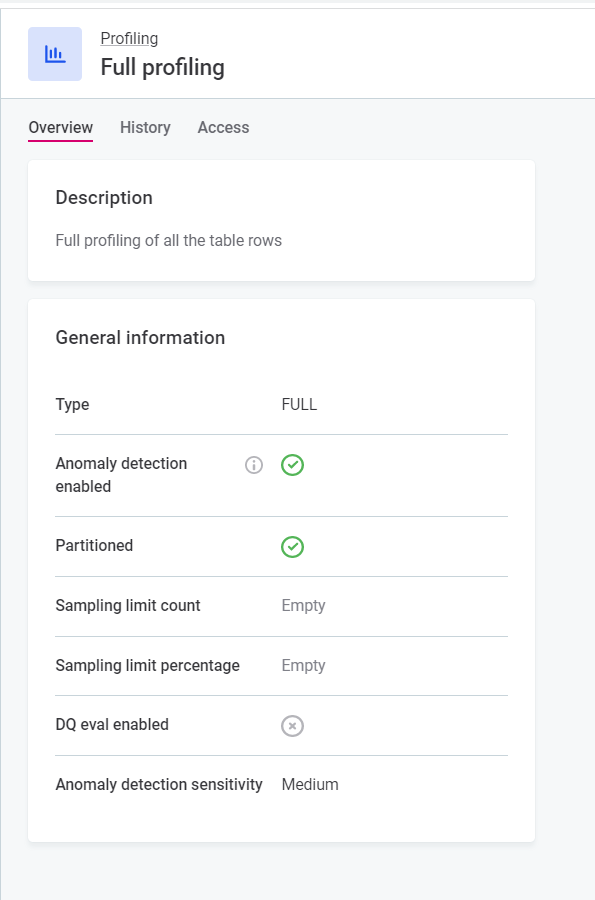

DQ evaluation must first be enabled as part of Full Profiling.

By default it is disabled. To enable it, go to Global Settings > Profiling, and after selecting Full Profiling, select Edit. |

To run profiling and DQ evaluation:

-

In Knowledge Catalog > Catalog Items, select the required catalog item, and using the dropdown next to Full profiling.

| If custom profiling configurations have been added, in which DQ evaluation has been enabled, you can also select these. See Configure profiling. |

Interpret DQ results

Where data quality has been evaluated using one of the methods above, an Overall validity metric is available, showing an aggregated value based on the level at which you view the results and the DQ checks assigned to attributes.

-

When viewing catalog items, the overall validity reflects the quality of all evaluated attributes within the item as determined by validity rules.

-

When viewing attributes, the overall validity reflects results for that attribute only.

-

When viewing terms, the overall validity reflects results for all attributes containing that term.

-

In monitoring projects, the overall validity reflects the results of all validity rules applied in the project.

Was this page useful?