Run DQC on Cluster

Starts a MapReduce or Spark processing on a cluster.

Runs a ONE plan or component in a new Java process: the task waits until the process finishes. This allows to run the ONE plan within the new JVM while using a specific Java version and runtime parameters (such as memory settings).

The plan is validated before the run: if the plan is invalid, the task fails with FINISHED_FAILURE state and log details into a log. Logs of the process are stored in the task resources folder. The STDOUT log also contains a copy of the process command.

This task currently requires that the drivers of all database connections created in the IDE are placed in <ATACCAMA_home>/runtime/lib/.

You can set advanced properties for MapReduce and/or Spark processing in the .properties configuration file.

|

|

To start Spark processing from ONE Desktop, use the remote executor. The task cannot start Spark processing on a cluster via a native connection. To trigger Spark processing via a native connection, use the |

Properties

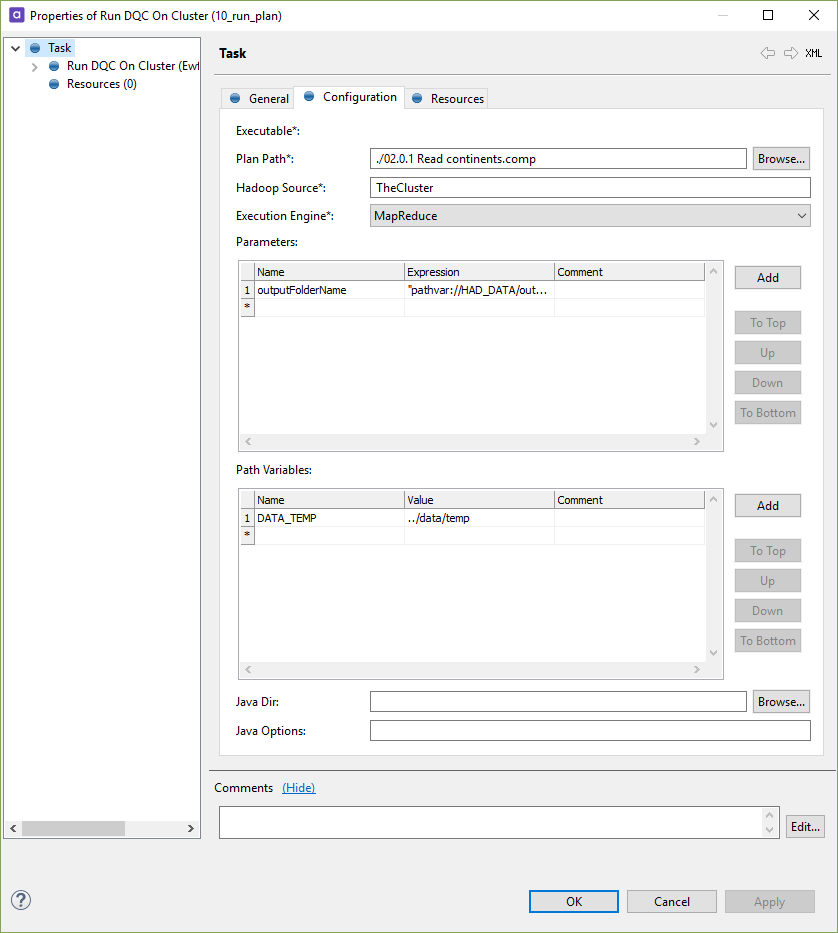

| Name | Type | Description | Expression support |

|---|---|---|---|

Plan Path |

mandatory |

Relative (to the workflow file) or absolute path to the plan or component thab should be run on a cluster. |

semi-expression |

Hadoop Source |

mandatory |

Name of an existing cluster connection. |

semi-expression |

Execution Engine |

mandatory |

Selects the data processing engine (MapReduce or Spark) to run plans on a cluster. |

none |

Parameters |

optional |

Set of parameters to pass to the ONE component. |

none |

Path Variables |

optional |

Set of local path variables to use with the current task. |

none |

Java Dir |

optional |

Defines the path of the Java JRE or JDK directory to use.

The defined directory must contain either If this attribute is not specified, the JRE is autodetected using Java’s |

none |

Java Options |

optional |

Space separated JVM Configuration to pass to the Java Virtual Machine. |

none |

Set ATACCAMA_HOME on Linux

The Run DQC Process task is a separate Java task, so it does not inherit the ATACCAMA_HOME variable.

To set ATACCAMA_HOME for Run DQC Process on Linux, you have to set it as a global variable.

Make sure the variable is set by the user running the online server.

To set the global variable:

-

Navigate to the root/home, for example,

/home/<user_name>. -

Open the hidden file

.bash_profileand add the following parameter:export ATACCAMA_HOME=/one20/app/server/runtimeMake sure the parameter points to the correct distribution.

-

Stop the server.

-

Log out from your Linux account and log back in.

-

Start the server.

Was this page useful?