Features

MDM embodies the best practices adapted toward an MDM project. It follows a model-driven architecture at its core and is essentially a domain-agnostic MDM, which enables developers to define a domain-specific MDM through models.

The following are the high-level features divided by type.

Model-driven architecture

Domain-agnostic MDM supported with vertical models (metadata templates) that are domain-specific and can be customized and extended to fit particular project needs.

For detailed information about the architecture and model-driven nature of Ataccama MDM, see MDM Model.

Internal workflow and processing features

The following are highlights of the MDM features:

-

Out-of-the-box change detection on load. MDM is able to detect changes from source instance records fed to the MDM through a track of their unique source identifiers.

In addition, it can detect updates or differences on the different attributes, triggering the MDM process for that record in case it changed. The delta detection has an attribute-level granularity, hence developers can define a subset of attributes that should not trigger the MDM processing. All of them are considered by default.

-

Entity-by-entity-oriented processing. The processing of the MDM is done on entity level, that is, entities are processed in the MDM in parallel as independent abstractions.

If any dependency between entities needs to be satisfied in order to process a subsequent phase of the MDM, MDM will create them according to the MDM execution plan.

-

Data transfer and linkage between entities. Entities can have their columns copied to other entities through their relationships.

This allows users to transfer values of importance to support MDM processes on a particular entity, for example, copying values from a supporting entity (on a 1:N relationship) to its parent entity, so the data can be used for matching.

-

Consistent MDM processes. This refers to data cleansing, matching and merging plans across all processing modes (batch, online, hybrid) and all source systems (through a common canonical model that accommodates all heterogeneous representations of the same entities).

The following diagram provides a high-level view of the internal workflow, including the MDM processes for master data consolidation.

Integrated internal workflow with logical transaction

The integrated internal workflow of MDM automates and orchestrates the MDM processes transparently, enabling users to focus on the MDM processes themselves and not in the overhead of the integration and execution planning of those tasks.

At the same time, the logical transaction and the integrated workflow serve for the purposes of:

-

Enabling coexistence of batch, stream, and online processes. This allows for a hybrid deployment of MDM, and supports SOA-ready MDM that also interacts with legacy or not SOA-enabled systems through batch interfaces.

-

Parallel batch and online processing. Mainly suitable for online parallel processing, but batch operations can also be processed in parallel.

-

Consistent data providing. Read-Only services are allowed to access actual data as providers, while Read-Write services are within the data scope of the logical transaction.

-

Data consistency. The logical transaction also secures rollback capability.

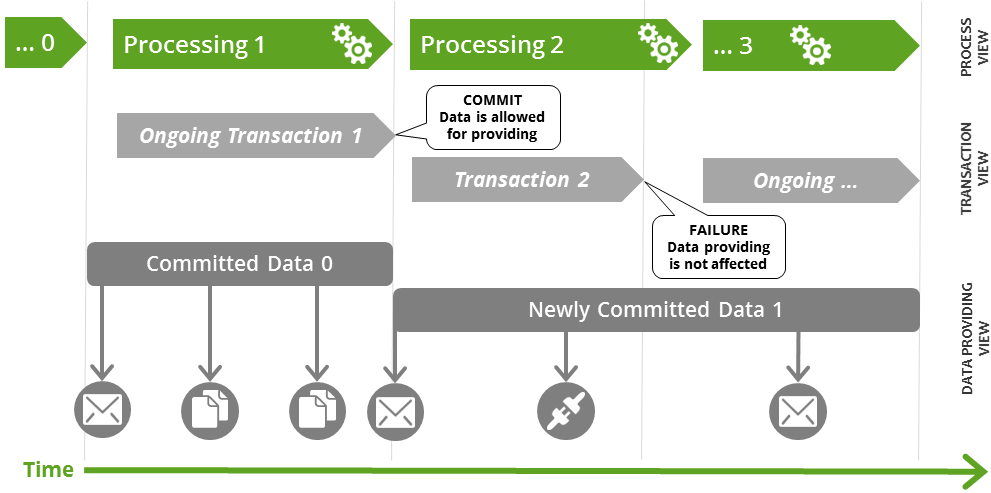

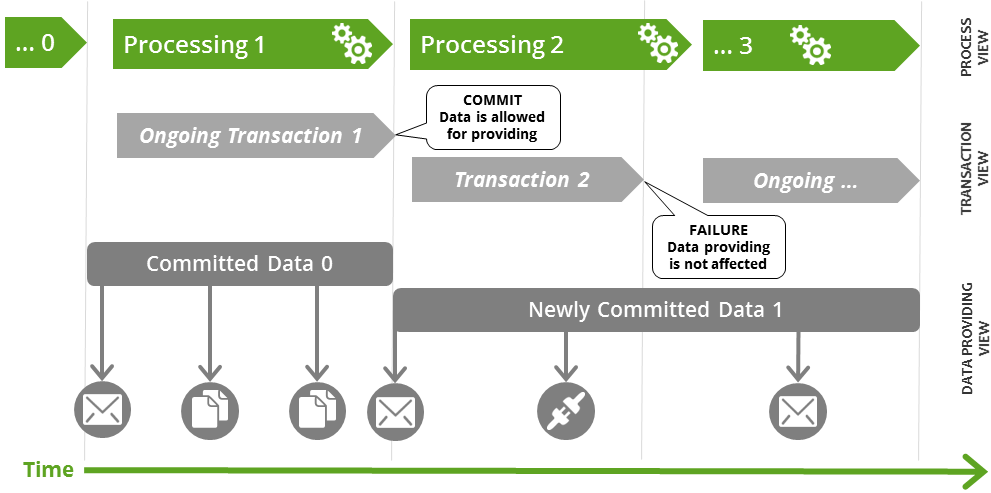

Serial logical transaction

The following diagram shows a set of serialized MDM processing activities, specifically the reading activities while an ongoing transaction takes place, which can either fail or be committed.

It also shows how the data providing is affected by the transaction being successful or being rolled back.

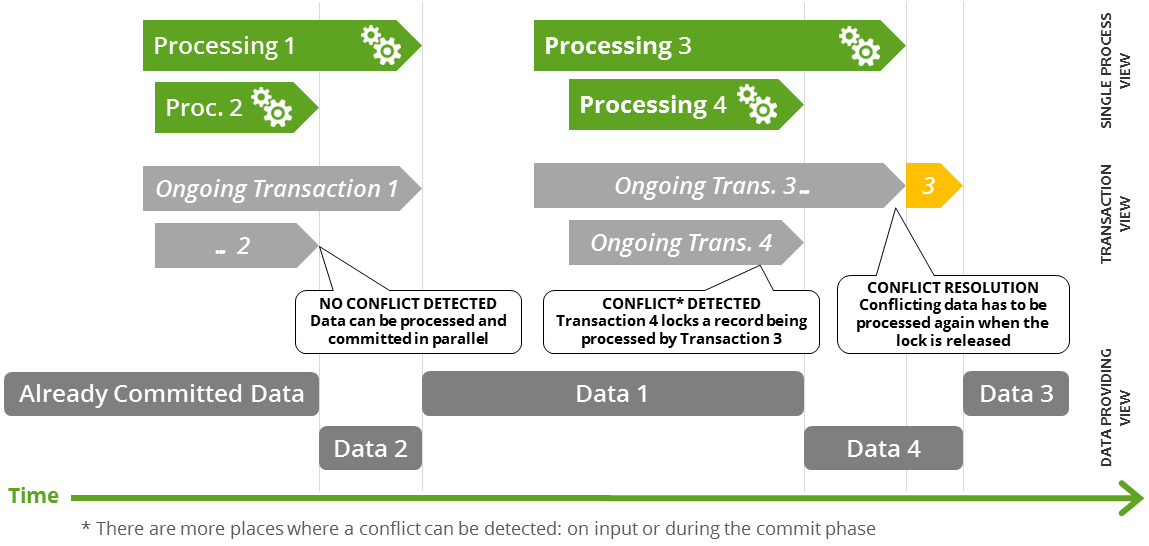

Parallel logical transaction

The following diagram shows a series of MDM processing activities in parallel. Parallel processing detects possible conflicts, for example, the same instance record, with conflict in the committing phase.

If no conflict is detected, transactions are fully parallel. If a conflict occurs, conflicted records are identified and locked.

When a lock is released, the conflicting records are reprocessed in the given order (based on the source timestamp), and the whole transaction is committed when the conflict is resolved.

There are several working modes, but parallelism is suitable mainly for online and/or streaming data processing.

Business lineage and versioning

MDM stores data and supports both logical and physical deletes through housekeeping activities. It provides metadata engine flags to mark different statuses of records and enables activation and deactivation of selected records as per their source system updates. Historical record updates can be also stored if activated (not enabled by default).

Hence, MDM provides the following features out-of-the-box:

-

Ability to maintain inactive records for business lineage through logical deletes. Different system-level handling for the active-inactive records.

-

Ability to capture changes and publish them, creating versioning history. Publishing is done to an external component of the architecture (for example, a data warehouse or database).

MDM is capable of publishing intra-day changes (using Event Publisher) or snapshots (using Batch Export).

-

Versioning records of selected entities and layers. History data can be provided by the native service interface and batch export.

The most common versioning setup would be publishing changes to an external component designated for this role.

Versioning records within MDM by using historical tables is possible, but it requires additional resources and extra database space. Using MDM as a data warehouse replacement is discouraged.

Native MDM services

MDM automatically generates a set of services over the different entities defined in the model. Therefore, the services do not need to be defined or implemented and are instead made available by configuration.

These cover all common services, including providers and setters. A set of supporting services for maintenance is also provided.

These are the most common native services:

-

Get: Retrieves a list of attributes from a record in a given entity. Get services are generated per each entity, and the service name is composed by the Get directive and the name of the entity.

A Get service exists for as every defined layer (the instance and any master layers that can be defined). The Get service takes the identifier as the parameter and retrieves the whole record.

-

List: Produces a list of records and accepts an offset and a number of records to be retrieved. It can be typically used in conjunction with other services for creating composed services that produce multiple options.

-

Search: Retrieves a list of records that match some search criteria, given as a parameter. It also accepts a number of maximum records to be retrieved.

-

Traverse: A complex service that is able to walk through entity relationships to retrieve a record and all related records from other entities as demanded by the query.

The traverse query needs to define the different relationships that should be retrieved, and a composed nested response is taken as the result.

-

Identify: Queries MDM repositories, looking for a record that matches the record passed as a parameter. For that, MDM simulates the MDM processing for the passed record, including a simulation of the matching process, and returns the resulting identified records.

This service can be used in conjunction with other services to create a powerful service, such as identify-then-insert with some criteria depending on the identification results.

-

ProcessDelta: Plays a role of an "upsert" service, functioning both as an Insert or an Update to an existing source record, which is identified by its unique source ID.

The ProcessDelta takes a record as its parameter, and launches the MDM processes as it would do with batch processing. The records need to be mapped directly to the canonical structure.

In other words, there is no loading and mapping phase when using ProcessDelta. Instead, the process directly starts at the cleansing MDM process.

Besides the native MDM services, there are DQ Services, which can be used through online interfaces. Any functionality implemented in MDM, such as other components, or Data Quality processes, can be exposed as online services without any additional configuration.

Online services are tied to the original implementations, so changes in the MDM components exposed as services take an immediate effect on the service as well, reducing the configuration to a minimum.

Reference data and related features

MDM allows defining reference data as part of the mastering process, and within the same domain models. This enables validation against reference data out-of-the-box by mapping attributes to reference data dictionaries, also called lookups.

It also generates validations against those dictionaries automatically, leveraging the model and avoiding manual implementation of reference data checks.

-

Reference Data: Models include reference data models for defining dictionaries and their master versions, providing master reference data across sources, and effectively creating a bridge to master reference values on loading or cleansing processes.

Attributes defined in model entities can then be mapped to reference data dictionaries, with all logic being generated with the MDM process plans.

-

Refreshing: This feature serves for regenerating lookups when reference data is altered but uses a temporary copy of previous lookups until the new ones are made available. As such, it ensures business continuity and no downtime during lookup regeneration.

-

Reprocess: Existing data might need reprocessing after the reference data is changed, even if there are no updates coming from the original source systems.

MDM provides out-of-the-box options to trigger a reprocess, also enabling selective reprocessing based on metadata aspects (IDs, source systems, and so on).

For advanced administration and use of reference data, we recommend using Ataccama Reference Data Manager. This tool integrates seamlessly with Ataccama MDM to provide strong and tightly integrated master and reference data management. For more information about Ataccama RDM, see Welcome to RDM.

Stream Interface

The Stream Interface enables reading messages from a queue using JMS and transforms messages into MDM entries. Messages in the queue are processed after the queue collects the defined maximum number of messages or after the defined maximum waiting time. The processing starts whenever one of the parameters is satisfied.

Event Handler and Event Publisher

Event Handler and Event Publisher capture certain user-defined changes during data processing. Multiple event handlers can be defined.

-

Event handlers are able to function in the asynchronous mode and support filtering to select only certain events. Ultimately, the events handlers can trigger a publishing event.

-

Event publishers can be of different types, for example, publishing to the standard output, to asynchronous services, as an asynchronous message, or even some custom or proprietary-channel publishers.

The EventHandler class persists the events and therefore requires available persistent memory to be used.

High availability

One of the non-functional top features offered by Ataccama is the high availability capability. MDM supports HA in a variety of different modes (Active-Active, Active-Passive) and relies on Zookeeper.

Various services are supported, depending on the mode and the chosen architecture. Balancing can be secured by an external tool, for example, BigIp F5 or similar.

While MDM provides high availability, the fact that the tool remains storage-platform-agnostic means that high availability needs to be supported on the storage side as well (for example, by Oracle RAC). Otherwise, the storage becomes a single point of failure.

For more information, see High Availability Overview.

Was this page useful?