How to Secure Remote Executor with Apache Knox

This section describes the Apache Knox configuration necessary for integrating with the Remote Executor. Apache Knox is an Apache gateway service for facilitating safe communication between your own Hadoop clusters and outside systems such as Ataccama’s.

When you use Apache Knox, you need to have ONE Runtime Server with the Remote Executor component configured on your edge node. In this deployment option, Data Processing Engine (DPE) is located outside of Hadoop DMZ and communicates with Hadoop and ONE Runtime Server via REST API through Apache Knox API.

DPE Spark jobs such as profiling, DQ evaluations, and plans are started on ONE Runtime Server (instead of DPE) on your edge node. DPE itself uses two Knox services: Hive JDBC via Knox and ONE Runtime Server via a newly created Ataccama-specific Knox service. Browsing your data source such as Hive is done via a JDBC request from DPE through Knox and then directly to your Hive.

| Make sure you have access to Apache Ambari and Apache Knox servers. For more information about Knox configuration, see Apache Knox Gateway User Guide and Adding a server to Apache Knox. |

Authentication

To allow Apache Knox user impersonation by the Remote Executor, the executor requires a "superuser" Kerberos principal and its keytab.

-

Issue a Kerberos principal that will be used to authenticate Ataccama Remote Executor to the cluster.

-

Issue a Kerberos keytab with access to Hadoop services.

-

Specify the path to these files in the

bde_conf.xml.

For more information about superusers, see Superusers.

Apache Knox service for Ataccama Remote Executor

-

Add a new Apache Knox service for Ataccama Remote Executor that will be used for communication between the Remote Executor and the BDE clients. Define a new BDE service in the Apache Knox topology configuration with the address of the edge node where Ataccama Remote Executor is installed, for example:

Knox topology example<service> <role>BDE</role> <url>http://executor.ataccama.com:8888</url> </service> -

To configure the Ataccama Remote Executor service, create

service.xmlandrewrite.xmlservice definitions for Apache Knox. To store the files, create abde/0.0.1folder in/usr/hdp/<version>/knox/data/services/:rewrite.xml<rules> <rule dir="IN"` `name="BDE/bde/inbound"` `pattern="*://*:*/**/bde/{path=**}?{**}"> <rewrite template="{$serviceUrl[BDE]}/{path=**}?{**}"/> </rule> </rules>service.xml<service role="BDE"` `name="bde"` `version="x.x.x"> <routes> <route path="/bde/**"/> </routes> </service> -

Navigate to Apache Ambari and restart the Knox service to apply the changes.

-

To test the BDE service configuration, use the following links. If the service is configured correctly, the content of both pages is the same.

-

https://<knox_server>:8443/gateway/default/bde/executor: Link to the Remote Executor via Knox. -

https://<knox_server>:8888/executor: Link to the Admin Center Remote Executor web page.

-

Troubleshooting

Renew Knox certificate

Move the existing gateway.jks certificate to another location from /var/lib/knox/data/security/keystores and restart Knox.

A new certificate will be generated automatically.

Missing Knox certificate

The error unable to find valid certification path to requested target appears when you attempt to start a plan on a cluster without a certificate for Knox:

javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

at sun.security.ssl.Alerts.getSSLException(Alerts.java:192)

at sun.security.ssl.SSLSocketImpl.fatal(SSLSocketImpl.java:1949)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:302)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:296)

...To solve the issue:

-

Add the certificate to the keystore cacerts with the following command:

./keytool.exe -importcert -trustcacerts -keystore ../lib/security/cacerts -storepass changeit -import -file <path_to_certificate>/<certificate>.cerFor Linux OS, the command would be:

/usr/<java-path>/bin/keytool -importkeystore -destkeystore /<path-to-keystore>/cacerts -srckeystore /<path-to-certificate>/certificate.p12 -srcstoretype pkcs12 -

A certificate-related information appears. When asked whether to trust this certificate, type

yes.The following message appears to confirm the certificate was successfully added:

Certificate was added to keystore

Access time for HDFS not configured

The error access time for hdfs not configured appears when you attempt to start a plan on a cluster without the dfs.namenode.accesstime.precision configuration parameter set.

The dfs.namenode.accesstime.precision parameter specifies the amount of time (ms) up to which the access time for HDFS file is precise.

The parameter is used for removing old files (uploaded during jobs) from the temporary folder: files that are not accessed on the server longer than for the time period specified by the parameter are removed.

To solve the issue:

-

Open

hdfs-site.xmland set thedfs.namenode.accesstime.precisionparameter to a value between0and36000000:-

1to3600000(recommended): All files that were not used for longer than the specified value (ms) are removed. -

0: If set to this, old files are not removed.hdfs-site.xml<property> <name>dfs.namenode.accesstime.precision</name> <value>3600000</value> </property>Make sure to change hdfs-site.xmlof both ONE Desktop and ONE Runtime Server server (for example,<ONE_build>/runtime/server/executor/client_conf/hdfs-site.xml.

-

-

In case step 1 does not solve the issue, set the parameter in Ambari. Depending on the settings, Ambari is able to overwrite values in

hdfs-site.xml.-

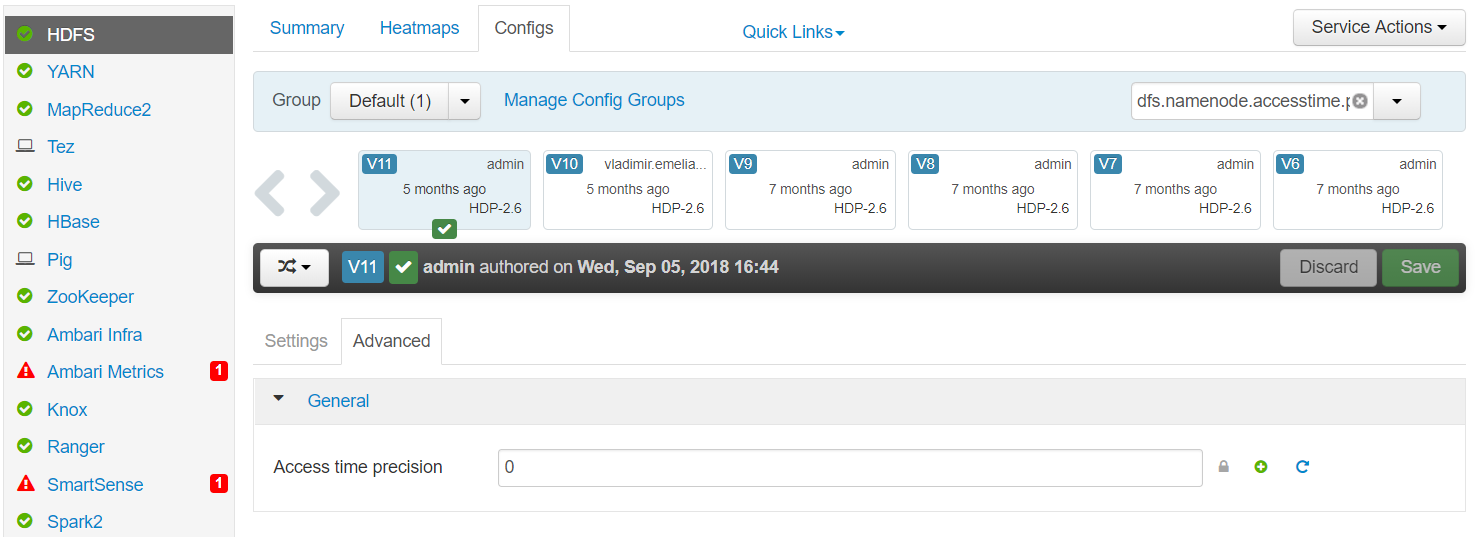

Log in to Ambari as a cluster admin.

-

Navigate to HDFS > Configs > Advanced and set the Access time precision:

-

Restart all required services.

-

Knox is not allowed to impersonate <user>

The error User: knox is not allowed to impersonate <user> appears when you attempt to start a plan on a cluster with a user who has no access to the Remote Executor.

To solve the issue:

-

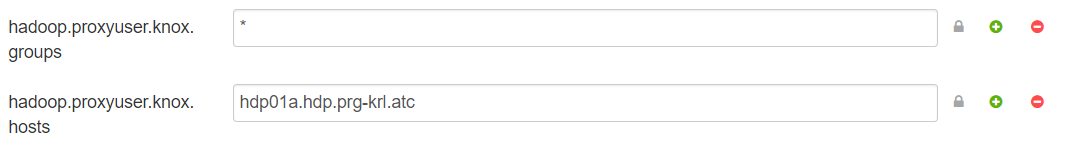

Log in to Ambari as a cluster admin.

-

Navigate to HDFS > Configs > Custom core-site.

-

Give access to the Remote Executor to the users:

-

All users in all groups (according to user permissions): Set the hadoop.proxyuser.knox.groups to

*:

-

Specified users: Add and configure hadoop.proxyuser.knox.users property. The value should be a comma-separated list of users who should have access to the Remote Executor.

-

Was this page useful?