Troubleshooting Hybrid Deployment

The following guide is intended to provide support in response to the most common causes of errors you might face while installing Ataccama client-side components in hybrid mode.

Install and configure Ansible

No such file or directory error

Problem: When copying the Ansible configuration to the home directory, the following error occurs:

(venv) user@dpe-testing-1:~/one/ansible$ cp ansible/ansible-example.cfg ~/.ansible.cfg

cp: cannot stat 'ansible/ansible-example.cfg': No such file or directorySolution: Check in which directory your terminal is running and make sure that the command you are executing is using the correct paths.

In this example, the source file path should be ansible-example.cfg instead of ansible/ansible-example.cfg.

Install client-side components

No hosts matched parsing error

Problem: When running the hybrid-dpe.yml playbook, the following warning is displayed, stating that the hosts.yml file cannot be parsed and that no hosts were matched.

(venv) <user>@dpe-testing-1:~/one/ansible$ ansible-playbook -i inventories/customer/ -u admin --private-key ~/.ssh/authorized_keys -b hybrid-dpe.yml

[WARNING]: * Failed to parse /home/<user>/one/ansible/inventories/customer/hosts.yml with auto plugin: no root 'plugin' key found,

'/home/<user>/one/ansible/inventories/customer/hosts.yml' is not a valid YAML inventory plugin config file

[WARNING]: * Failed to parse /home/<user>/one/ansible/inventories/customer/hosts.yml with yaml plugin: Invalid data from file, expected dictionary and

got: localhost

[WARNING]: * Failed to parse /home/<user>/one/ansible/inventories/customer/hosts.yml with ini plugin: Invalid host pattern '---' supplied, '---' is

normally a sign this is a YAML file.

[WARNING]: Unable to parse /home/<user>/one/ansible/inventories/customer/hosts.yml as an inventory source

[WARNING]: Unable to parse /home/<user>/one/ansible/inventories/customer as an inventory source

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available.

Note that the implicit localhost does not match 'all'

PLAY [processing] ************************************************************************************************************************************************

skipping: no hosts matched

PLAY RECAP *******************************************************************************************************************************************************Solution: Make sure that the hosts.yml file, located in ~/one/ansible/inventories/<inventory>, is correctly configured and formatted.

The name of each host must be provided under hosts, followed by a colon (:).

all:

children:

processing:

hosts:

localhost:

<hostname>:Failed to connect to host via SSH

Problem: When running the hybrid-dpe.yml playbook, a fatal error is reported stating that the host is unreachable through SSH due to denied permissions (permissions 0664).

TASK [Gathering Facts gather_subset=['distribution'], gather_timeout=10] *****************************************************************************************

fatal: [localhost]: UNREACHABLE! => {"changed": false, "msg": "Failed to connect to the host via ssh: @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@\r\n@ WARNING: UNPROTECTED PRIVATE KEY FILE! @\r\n@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@\r\nPermissions 0664 for '/home/<user>/.ssh/id_rsa' are too open.\r\nIt is required that your private key files are NOT accessible by others.\r\nThis private key will be ignored.\r\nLoad key \"/home/<user>/.ssh/id_rsa\": bad permissions\r\n<user>@localhost: Permission denied (publickey,password).", "unreachable": true}

NO MORE HOSTS LEFT ***********************************************************************************************************************************************

PLAY RECAP *******************************************************************************************************************************************************

localhost : ok=0 changed=0 unreachable=1 failed=0 skipped=0 rescued=0 ignored=0Solution: To fix the issue, execute the following command, then try to run the playbook again:

grant 0600 permsKeycloak host unavailable

Problem: When running the hybrid-dpe.yml playbook, the pre-installation task that verifies the connectivity to Keycloak fails with a similar error:

TASK [system : Check connectivity to Keycloak url={{ keycloak_url }}] ********************************************************************************************

fatal: [localhost]: FAILED! => {"changed": false, "elapsed": 0, "msg": "Status code was -1 and not [200]: Request failed: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1131)>", "redirected": false, "status": -1, "url": "https://[customer].[env].ataccama.online/auth"}Possible solution: In this case, you need to check whether the Keycloak host is correctly configured as well as whether Keycloak can be reached from your machine.

-

Start by checking the

vars.ymlconfiguration.-

Open the file

~/one/ansible/inventories/<inventory>/group_vars/all/vars.yml. -

In the

vars.ymlfile, locate thekeycloak_urlvariable and make sure that it is set to the correct URL:vars.ymlkeycloak_url: https://[customer].[env].ataccama.online/auth -

Save the changes made and proceed to step

d. If no changes were made, proceed to step 2. -

Rerun the

hybrid-dpe.ymlplaybook.

-

-

Once you have verified that there are no issues with the configuration, test the connection to Keycloak manually. You can use the following command:

Test the connection to Keycloakcurl -v https://[customer].[env].ataccama.online/authThe console output, in addition to confirming that the connection has been established (

* Connected to [customer].[env].ataccama.online (10.47.251.31) port 443 (0)), should not contain any timeout or certificate errors. -

Rerun the

hybrid-dpe.ymlplaybook.

MinIO host unavailable

Problem: When running the hybrid-dpe.yml playbook, the pre-installation task that verifies the connectivity to MinIO fails with a similar error:

TASK [system : Check connectivity to MinIO url={{ minio_url }}, status_code={{ range(200,499) | list }}] *********************************************************

fatal: [localhost]: FAILED! => {"changed": false, "elapsed": 0, "msg": "Status code was -1 and not [200, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219, 220, 221, 222, 223, 224, 225, 226, 227, 228, 229, 230, 231, 232, 233, 234, 235, 236, 237, 238, 239, 240, 241, 242, 243, 244, 245, 246, 247, 248, 249, 250, 251, 252, 253, 254, 255, 256, 257, 258, 259, 260, 261, 262, 263, 264, 265, 266, 267, 268, 269, 270, 271, 272, 273, 274, 275, 276, 277, 278, 279, 280, 281, 282, 283, 284, 285, 286, 287, 288, 289, 290, 291, 292, 293, 294, 295, 296, 297, 298, 299, 300, 301, 302, 303, 304, 305, 306, 307, 308, 309, 310, 311, 312, 313, 314, 315, 316, 317, 318, 319, 320, 321, 322, 323, 324, 325, 326, 327, 328, 329, 330, 331, 332, 333, 334, 335, 336, 337, 338, 339, 340, 341, 342, 343, 344, 345, 346, 347, 348, 349, 350, 351, 352, 353, 354, 355, 356, 357, 358, 359, 360, 361, 362, 363, 364, 365, 366, 367, 368, 369, 370, 371, 372, 373, 374, 375, 376, 377, 378, 379, 380, 381, 382, 383, 384, 385, 386, 387, 388, 389, 390, 391, 392, 393, 394, 395, 396, 397, 398, 399, 400, 401, 402, 403, 404, 405, 406, 407, 408, 409, 410, 411, 412, 413, 414, 415, 416, 417, 418, 419, 420, 421, 422, 423, 424, 425, 426, 427, 428, 429, 430, 431, 432, 433, 434, 435, 436, 437, 438, 439, 440, 441, 442, 443, 444, 445, 446, 447, 448, 449, 450, 451, 452, 453, 454, 455, 456, 457, 458, 459, 460, 461, 462, 463, 464, 465, 466, 467, 468, 469, 470, 471, 472, 473, 474, 475, 476, 477, 478, 479, 480, 481, 482, 483, 484, 485, 486, 487, 488, 489, 490, 491, 492, 493, 494, 495, 496, 497, 498]: Request failed: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1131)>", "redirected": false, "status": -1, "url": "https://minio.[customer].[env].ataccama.online"}Possible solution: In this case, you need to check whether the MinIO host is correctly configured as well as whether MinIO can be reached from your machine.

-

Start by checking the

vars.ymlconfiguration.-

Open the file

~/one/ansible/inventories/<inventory>/group_vars/all/vars.yml. -

In the

vars.ymlfile, locate theminio_urlvariable and make sure that it is set to the correct URL:vars.ymlminio_url: https://minio.[customer].[env].ataccama.online -

Save the changes made and proceed to step

d. If no changes were made, proceed to step 2. -

Rerun the

hybrid-dpe.ymlplaybook.

-

-

Once you have verified that there are no issues with the configuration, test the connection to MinIO manually. You can use the following command:

Test the connection to MinIOcurl -v https://minio.[customer].[env].ataccama.onlineThe console output, in addition to confirming that the connection has been established (

* Connected to minio.[customer].[env].ataccama.online (10.47.246.36) port 443 (0)), should not contain any timeout or certificate errors. -

Rerun the

hybrid-dpe.ymlplaybook.

DPM host unavailable

Problem: When running the hybrid-dpe.yml playbook, the post-installation task that verifies the availability of DPE fails with a similar error:

TASK [dpe : Wait for dpe to come up (check monitoring endpoint ready) url=http://{{ ansible_host }}:{{ dpe.http_port }}/actuator/health, status_code=200] ********

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (30 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (29 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (28 retries left).

...

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (3 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (2 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (1 retries left).

fatal: [localhost]: FAILED! => {"attempts": 30, "changed": false, "connection": "close", "content_type": "application/vnd.spring-boot.actuator.v3+json", "date": "Wed, 27 Oct 2021 15:14:23 GMT", "elapsed": 0, "json": {"components": {"db": {"details": {"database": "H2", "validationQuery": "isValid()"}, "status": "UP"}, "diskSpace": {"details": {"exists": true, "free": 185962528768, "threshold": 10485760, "total": 210182905856}, "status": "UP"}, "dpm": {"details": {"state": "CONNECTING"}, "status": "DOWN"}, "livenessState": {"status": "UP"}, "ping": {"status": "UP"}, "readinessState": {"status": "UP"}}, "groups": ["liveness", "readiness"], "status": "DOWN"}, "msg": "Status code was 503 and not [200]: HTTP Error 503: ", "redirected": false, "status": 503, "transfer_encoding": "chunked", "url": "http://localhost:8034/actuator/health", "x_correlation_id": "f6793e"}Solution: In this case, you need to check whether the DPE host and gRPC port are correctly configured as well as whether the DPM gRPC port can be reached from your machine.

-

Start by checking the

vars.ymlconfiguration.-

Open the file

~/one/ansible/inventories/<inventory>/group_vars/all/vars.yml. -

In the

vars.ymlfile, locate thedpmvariable and make sure that thehostandgrpc_portare correctly set:vars.ymldpm: host: dpm-grpc.[customer].[env].ataccama.online grpc_port: 443 -

Save the changes made and proceed to step

d. If no changes were made, proceed to step 2. -

Rerun the

hybrid-dpe.ymlplaybook.

-

-

Once you have verified that there are no issues with the configuration, test the connection to the DPM gRPC port manually. For example, this can be done using Telnet, a utility for testing connectivity to remote servers.

To do this, depending on your OS, execute the following commands:

-

Red Hat Enterprise Linux (RHEL)

Test the connection to DPM gRPC portInstall Telnet sudo dnf install telnet Connect to the DPM gRPC port telnet dpm-grpc.[customer].[env].ataccama.online 443 -

Ubuntu

Test the connection to DPM gRPC portInstall Telnet sudo apt install telnetd -y Check the Telnet service status. If active (running), continue with the remaining command sudo systemctl status inetd Connect to the DPM gRPC port telnet dpm-grpc.[customer].[env].ataccama.online 443The expected output is as follows:

*Test the connection to DPM gRPC port console output

-

Trying 10.47.251.31... Connected to dpm-grpc.[customer].[env].ataccama.online. Escape character is '^]'.

License file not found or missing

Problem: When running the hybrid-dpe.yml playbook, the DPE installation task fails with a similar error:

An exception occurred during task execution.

To see the full traceback, use -vvv.

The error was: If you are using a module and expect the file to exist on the remote, see the remote_src option

failed: [localhost] (item=src: /path/to/license.plf -> dest: /opt/ataccama/one/dpe/license/license.plf) => {"ansible_loop_var": "file", "changed": false, "file": {"dest": "/opt/ataccama/one/dpe/license/license.plf", "src": "/path/to/license.plf"}, "msg": "Could not find or access '/path/to/license.plf' on the Ansible Controller.\nIf you are using a module and expect the file to exist on the remote, see the remote_src option"}

An exception occurred during task execution.

To see the full traceback, use -vvv.

The error was: If you are using a module and expect the file to exist on the remote, see the remote_src option

failed: [localhost] (item=src: /path/to/license.plf -> dest: /opt/ataccama/one/dpe/license.plf) => {"ansible_loop_var": "file", "changed": false, "file": {"dest": "/opt/ataccama/one/dpe/license.plf", "src": "/path/to/license.plf"}, "msg": "Could not find or access '/path/to/license.plf' on the Ansible Controller.\nIf you are using a module and expect the file to exist on the remote, see the remote_src option"}

An exception occurred during task execution.

To see the full traceback, use -vvv.

The error was: If you are using a module and expect the file to exist on the remote, see the remote_src option

failed: [localhost] (item=src: /path/to/license.plf -> dest: /home/dpe) => {"ansible_loop_var": "file", "changed": false, "file": {"dest": "/home/dpe", "mode": "0755", "src": "/path/to/license.plf"}, "msg": "Could not find or access '/path/to/license.plf' on the Ansible Controller.\nIf you are using a module and expect the file to exist on the remote, see the remote_src option"}Solution: Check that the license path is correctly set and that the license file is located in the directory.

To do this, follow these steps:

-

Open the file

~/one/ansible/inventories/<inventory>/group_vars/all/vars.yml.-

In the

vars.ymlfile, locate thedpe_license_filevariable and make sure that the path is correctly defined:vars.ymldpe_license_file: /home/<user>/license_v13.plf -

Save the changes made.

-

Rerun the

hybrid-dpe.ymlplaybook.

-

Application package cannot be downloaded

Problem: When running the hybrid-dpe.yml playbook, the DPE installation task fails with a similar error:

An

TASK [one_module : Download application package from remote location | module: dpe url={{ package_url }}, dest={{ package_download_directory }}/{{ module_name }}-{{ module_version }}.zip, owner={{ service_user }}, group={{ service_group }}, mode=420] ***

fatal: [localhost]: FAILED! => {"changed": false, "dest": "/var/tmp/dpe-13.3.1-final.zip", "elapsed": 0, "gid": 998, "group": "dpe", "mode": "0644", "msg": "Request failed", "owner": "dpe", "response": "HTTP Error 403: Forbidden", "size": 524327921, "state": "file", "status_code": 403, "uid": 997, "url": "https://ataccama.s3.amazonaws.com/products/releases/dpe-assembly-13.3.1-final-linuxs.zip"}Solution: Check that the path to the installation package is correctly set and that it is accessible from the DPE server. To do this:

-

Open the file

~/one/ansible/inventories/<inventory>/group_vars/all/vars.yml.-

In the

vars.ymlfile, locate thepackage_urlvariable (underpackages>dpe) and make sure that the path is correctly defined:vars.ymlpackages: dpe: version: 13.3.1-final package_url: "https://ataccama.s3.amazonaws.com/products/releases/dpe-assembly-13.3.1-final-linux.zip" -

Save the changes made.

-

Rerun the

hybrid-dpe.ymlplaybook.

-

DPE does not start

Problem: When running the hybrid-dpe.yml playbook, the DPE installation task fails with a similar error:

TASK [dpe : Wait for dpe to come up (check monitoring endpoint ready) url=http://{{ ansible_host }}:{{ dpe.http_port }}/actuator/health, status_code=200] ********

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (30 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (29 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (28 retries left).

...

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (3 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (2 retries left).

FAILED - RETRYING: Wait for dpe to come up (check monitoring endpoint ready) (1 retries left).

fatal: [localhost]: FAILED! => {"attempts": 30, "changed": false, "elapsed": 0, "msg": "Status code was -1 and not [200]: Request failed: <urlopen error [Errno 111] Connection refused>", "redirected": false, "status": -1, "url": "http://localhost:8034/actuator/health"}Possible solution: Any issues with DPE need to be further diagnosed before attempting to resolve the underlying cause. To do so:

-

Query the DPE service logs using the following command:

Access DPE logsjournalctl -u dpe -f -

In the output, look for the

Application run failedmessage or messages with severity levelERROR, as shown in the following example. In this example, the exception was caused by a corrupt JWT key in DPE properties, which means that it needs to be replaced by a valid private key for DPE before attempting to rerun thehybrid-dpe.ymlplaybook. The necessary JWT keys are provided by Ataccama along with the other credentials.On the client-side, these keys are defined in the

vars.ymlfile located inone/ansible/inventories/<inventory>/group_vars/all(variablesname,content, andfpunderdpm_jwt_key, variableprivateunderdpe_jwt_key):vars.ymldpm_jwt_key: name: dpm-prod-key # jwt key content content: '{"kty":"EC","crv":"P-256","kid":"rsS16kdWaPWHmQysa6kC4lL1xqYWJXfB-Uydd6SQjLc","x":"NQtIiwPXdYyvhXGxtoOBPn9zztHNO8dU8TQUc-S7IlU","y":"-2RFU45NJNSCDiRG6yEEsP8WTPt_6Mgnb6UIujA4H7I","alg":"ES256"}' # jwt key fingerprint fp: rsS16kdWaPWHmQysa6kC4lL1xqYWJXfB-Uydd6SQjLc ... # private_key of the DPE module. Change accordingly dpe_jwt_key: private: <replace with DPE private key>For more information, see Hybrid DPE Installation Guide, section Build the Ansible inventory. DPE logs output example

Oct 27 16:17:16 dpe-testing-1 dpe[1270788]: {"@timestamp":"2021-10-27T16:17:16.695Z","@version":"1","message":"Application run failed","logger_name":"org.springframework.boot.SpringApplication","thread_name":"restartedMain","severity":"ERROR","level_value":40000,"stack_trace":"org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'gRpcJwtCredentialsProvider' defined in class path resource [com/ataccama/lib/authentication/grpc/client/spring/GRpcAuthClientConfiguration.class]: Unsatisfied dependency expressed through method 'gRpcJwtCredentialsProvider' parameter 0; nested exception is org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'internalJwtCallCredentialsProvider' defined in class path resource [com/ataccama/lib/authentication/grpc/client/spring/GRpcAuthClientConfiguration.class]: Unsatisfied dependency expressed through method 'internalJwtCallCredentialsProvider' parameter 0; nested exception is org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'internalJwtGenerator' defined in class path resource [com/ataccama/lib/authentication/internal/jwt/starter/InternalJwtGeneratorAutoConfiguration.class]: Bean instantiation via factory method failed; nested exception is org.springframework.beans.BeanInstantiationException: Failed to instantiate [com.ataccama.lib.authentication.internal.jwt.InternalJwtGenerator]: Factory method 'internalJwtGenerator' threw exception; nested exception is java.lang.IllegalArgumentException: java.text.ParseException: Invalid JSON: Unexpected token &kty\" at position 6.\n\tat org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:800)\n\tat ... org.springframework.boot.SpringApplication.run(SpringApplication.java:1332)\n\tat com.ataccama.dpe.application.Application.main(Application.java:32)\n\tat java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\n\tat java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\n\tat java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\n\tat java.base/java.lang.reflect.Method.invoke(Method.java:566)\n\tat org.springframework.boot.devtools.restart.RestartLauncher.run(RestartLauncher.java:49)\nCaused by: org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name 'internalJwtCallCredentialsProvider' defined in class path resource [com/ataccama/lib/authentication/grpc/client/spring/GRpcAuthClientConfiguration.class]: Unsatisfied dependency expressed through method 'internalJwtCallCredentialsProvider' parameter 0; nested exception is org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'internalJwtGenerator' defined in class path resource [com/ataccama/lib/authentication/internal/jwt/starter/InternalJwtGeneratorAutoConfiguration.class]: Bean instantiation via factory method failed; nested exception is org.springframework.beans.BeanInstantiationException: Failed to instantiate [com.ataccama.lib.authentication.internal.jwt.InternalJwtGenerator]: Factory method 'internalJwtGenerator' threw exception; nested exception is java.lang.IllegalArgumentException: java.text.ParseException: Invalid JSON: Unexpected token &kty\" at position 6.\n\tat org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:800)\n\tat ... org.springframework.beans.factory.support.ConstructorResolver.resolveAutowiredArgument(ConstructorResolver.java:887)\n\tat org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:791)\n\t... 43 common frames omitted\nCaused by: org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'internalJwtGenerator' defined in class path resource [com/ataccama/lib/authentication/internal/jwt/starter/InternalJwtGeneratorAutoConfiguration.class]: Bean instantiation via factory method failed; nested exception is org.springframework.beans.BeanInstantiationException: Failed to instantiate [com.ataccama.lib.authentication.internal.jwt.InternalJwtGenerator]: Factory method 'internalJwtGenerator' threw exception; nested exception is java.lang.IllegalArgumentException: java.text.ParseException: Invalid JSON: Unexpected token &kty\" at position 6.\n\tat org.springframework.beans.factory.support.ConstructorResolver.instantiate(ConstructorResolver.java:658)\n\tat ... org.springframework.beans.factory.support.ConstructorResolver.resolveAutowiredArgument(ConstructorResolver.java:887)\n\tat org.springframework.beans.factory.support.ConstructorResolver.createArgumentArray(ConstructorResolver.java:791)\n\t... 57 common frames omitted\nCaused by: org.springframework.beans.BeanInstantiationException: Failed to instantiate [com.ataccama.lib.authentication.internal.jwt.InternalJwtGenerator]: Factory method 'internalJwtGenerator' threw exception; nested exception is java.lang.IllegalArgumentException: java.text.ParseException: Invalid JSON: Unexpected token &kty\" at position 6.\n\tat org.springframework.beans.factory.support.SimpleInstantiationStrategy.instantiate(SimpleInstantiationStrategy.java:185)\n\tat org.springframework.beans.factory.support.ConstructorResolver.instantiate(ConstructorResolver.java:653)\n\t... 71 common frames omitted\nCaused by: java.lang.IllegalArgumentException: java.text.ParseException: Invalid JSON: Unexpected token &kty\" at position 6.\n\tat com.ataccama.lib.authentication.internal.jwt.InternalJwtGenerator.fromJWK(InternalJwtGenerator.java:93)\n\tat com.ataccama.lib.authentication.internal.jwt.starter.InternalJwtGeneratorAutoConfiguration.internalJwtGenerator(InternalJwtGeneratorAutoConfiguration.java:57)\n\tat java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\n\tat java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\n\tat java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\n\tat java.base/java.lang.reflect.Method.invoke(Method.java:566)\n\tat org.springframework.beans.factory.support.SimpleInstantiationStrategy.instantiate(SimpleInstantiationStrategy.java:154)\n\t... 72 common frames omitted\nCaused by: java.text.ParseException: Invalid JSON: Unexpected token &kty\" at position 6.\n\tat com.nimbusds.jose.util.JSONObjectUtils.parse(JSONObjectUtils.java:111)\n\tat com.nimbusds.jose.util.JSONObjectUtils.parse(JSONObjectUtils.java:69)\n\tat com.nimbusds.jose.jwk.JWK.parse(JWK.java:575)\n\tat com.ataccama.lib.authentication.internal.jwt.InternalJwtGenerator.fromJWK(InternalJwtGenerator.java:69)\n\t... 78 common frames omitted\n","application":"oneApplication"} -

After making the necessary configuration changes, rerun the

hybrid-dpe.ymlplaybook.

Troubleshoot DPE

DPE failing to register with DPM

Problem: DPE fails to register with DPM with an exception message: "eventId=failedToRegisterDueToStatus dpmUrl=dpm-grpc.teranet.test.ataccama.online:443, statusCode=INTERNAL, statusDescription=http2 exception".

Possible solution: The client on the DPE connecting to DPM over gRPC does not have the TLS security and trusted certificates configured. Enable TLS and configure usage of TLS certificates in one of the following ways:

#Set to true to enable TLS communication

ataccama.client.connection.dpm.grpc.tls.enabled=true

#Select one of the following methods:

#Set to true to trust all TLS certificates

#This is not recommended as a permanent solution

#ataccama.client.connection.dpm.grpc.tls.trust-all=false

#Or set the path to certificate collection

#ataccama.client.grpc.connection.dpm.tls.trust-cert-collection=./path/to/trust/cert/chain.crt1

#Or configure these properties to access a certificate trust store

#ataccama.client.grpc.connection.dpm.tls.trust-store=file:path/to/trust/cert/trust-store.pfx

#ataccama.client.grpc.connection.dpm.tls.trust-store-password=pswd

#ataccama.client.grpc.connection.dpm.tls.trust-store-type=PKCS12|JCEKSDPE not running

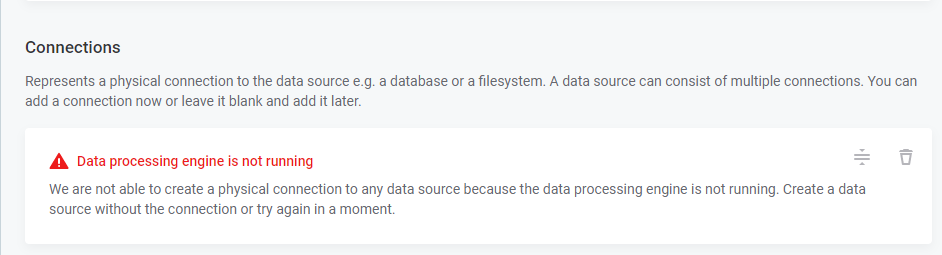

Problem: After completing the installation procedure and trying to create a new data source connection in ONE (Knowledge Catalog > Sources > Create), a message is displayed stating that DPE is not running.

Possible solutions: As there are several possible underlying causes, we recommend trying out the following solutions, in the order listed. After completing each step, check if the issue is resolved. If not, continue to the next step.

-

Refresh the web application in the browser (F5).

-

Verify whether Data Processing Module (DPM) is running. The quickest way to do this is typically by trying to access the DPM Admin Console from a browser:

https://dpm.[customer].[env].ataccama.online.If the DPM Admin Console cannot be accessed, try to connect to the DPM HTTP endpoint using the following command:

Test the connection to DPM HTTP portcurl -v https://dpm.[customer].[env].ataccama.onlineIf the connection cannot be established, contact the Ataccama Support.

-

If the DPM Admin Console can be accessed as described in step 2, check whether DPE is running. To do this, in the Admin Console (

https://dpm.[customer].[env].ataccama.online), go to the Engines tab.-

If there are no DPEs listed, check the DPE service status using the following command:

Check the DPE statussudo systemctl status dpeIf the service is not running, investigate the issue further by checking the DPE logs. For more information, see sections DPE does not start and DPE registered but disconnected.

Check DPE logsjournalctl -u dpe -f -

If there is at least one DPE listed, check whether its status is

READY. All other statuses indicate an issue with DPE. In the latter case, DPE logs should provide more information about the underlying cause. To access logs, execute the following command:Check DPE logsjournalctl -u dpe -fIt is also possible that the issue is due to a communication problem between the Metadata Management Module (MMM) and DPM. In that case, contact the Ataccama Support.

See also DPE registered but disconnected.

-

DPE registered but disconnected

Problem: This section summarizes some typical connection issues between DPE and DPM, starting from how to detect them to how to fix them.

Possible solutions: Start by checking the DPE logs using the following command:

journalctl -u dpe -fDepending on the exception that DPE logged, there are several possibilities:

-

"code":"UNAUTHENTICATED","message":"Unable to register." or UNAUTHENTICATED: Public key not found: This typically indicates that there is an issue with DPM and DPE JWT keys. Double-check that the DPM and DPE JWT keys have been correctly provided, as explained in DPE does not start. If the issue is still present, contact the Ataccama Support. -

"code":"UNAVAILABLE","message":"Unable to register.": The exception usually occurs in cases when the DPM gRPC port has been incorrectly configured. For more instructions, see DPM host unavailable.

All DPE jobs failing

Problem: After completing the installation procedure and trying to run any job on the data source in ONE (metadata import, sample or full profiling), all initiated jobs finish with an error.

Possible solution: Typically, this issue occurs due to incorrect or missing credentials for ONE Object Storage (MinIO).

-

Start by further investigating the issue. In the DPM Admin Console (

https://dpm.[customer].[env].ataccama.online), on the Jobs tab, locate and select the failed job. This opens the job details screen. -

On the job details page, under General Information > Events, select Open related events and look for events with status

FAILURE. The event description contains more information about the issue, for example:Failed to process job in DPE. Reason: Failed to save results for a673241d-2d55-4186-8a86-acdb4bb6b3d4.Alternatively, you can access this information through DPE logs using the following command: journalctl -u dpe -f. -

To fix the issue, open the

vars.ymlfile, located in~/one/ansible/inventories/<inventory>/group_vars/all. -

In the file, locate the variables

access_keyandsecret_keyunderminioand make sure that the correct values are provided:vars.ymlminio: access_key: minio secret_key: minio-secret -

Rerun the

hybrid-dpe.ymlplaybook.

Was this page useful?