Remote Plan Execution

The remote plan execution lets you run any ONE plan on the Ataccama ONE Platform. Plans are typically developed and tested on small data sets or on dedicated environments. This helps you validate the plans and assess how well they perform so that you can make the necessary adjustments before applying them on your actual data. Once the plan works as expected, you can run it remotely on production data in ONE.

Jobs are first sent to the Data Processing Manager (DPM) module, which forwards the job information to a suitable Data Processing Engine (DPE). Actual data processing takes place only in DPE instances.

Configure remote plan execution

Remote plan execution leverages existing controls and concepts of ONE Desktop. Once you connect to the Ataccama ONE Platform, you can choose between the following launch options:

-

Local: A suitable DPE is selected automatically depending on the plan. The plan is first preprocessed in order to detect any steps that require using ONE Spark DPE.

-

Spark: All jobs are sent only to ONE Spark DPE.

To create a new environment for the Ataccama ONE Platform, follow the instructions in Environments, section Add a new environment. In this case, the new environment must be configured to use your Ataccama ONE Platform server, with the Launch type set to ONE Platform launch.

|

Drivers used for remote execution

For security reasons, database drivers are not transferred to DPE when remotely executing plans. Make sure the file name (case insensitive) of the database driver is configured the same way in both your DPE instance and in ONE Desktop. |

Remote execution on ONE Spark DPE

| ONE Metadata Reader and ONE Metadata Writer steps are not supported to run on Spark. |

If you want all plans to be executed on the Spark engine, set the run configuration as follows:

-

In ONE Desktop, select the environment that you want to use from the toolbar. The environment must be configured for Ataccama ONE Platform.

-

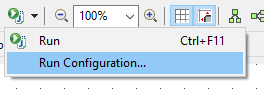

Open your plan and in the toolbar, select Run configuration.

-

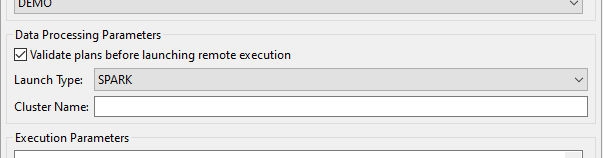

In Data Processing Parameters, select SPARK and provide the name of your cluster.

-

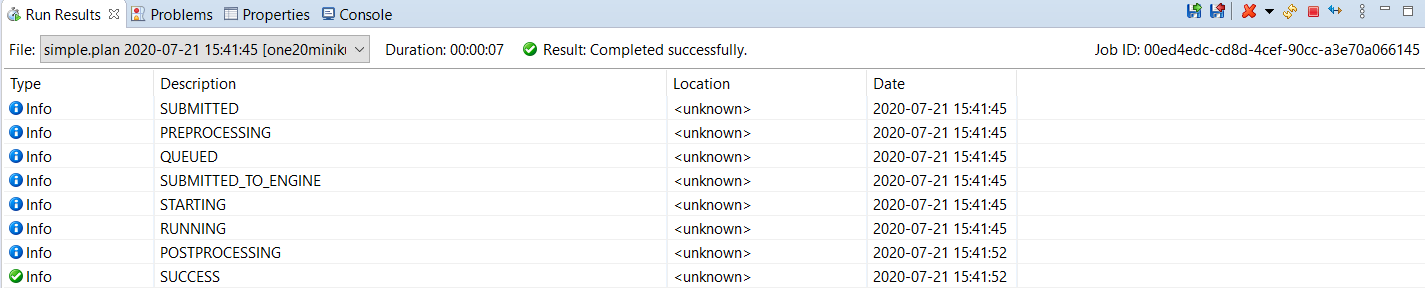

Select Run to execute your plan on ONE Spark DPE. You can track the progress and the outcome of the run on the Run Results tab.

Was this page useful?